Add a preview option `uv init --build-backend uv --preview` that uses

the uv build backend when generating the project. The uv build backend

is in preview, so the option is also guarded by preview and hidden from

the help message and docs.

For https://github.com/astral-sh/uv/issues/3957#issuecomment-2518757563

## Summary

A lot of good new lints, and most importantly, error stabilizations. I

tried to find a few usages of the new stabilizations, but I'm sure there

are more.

IIUC, this _does_ require bumping our MSRV.

When performing a noop sync, we don't need the rayon threadpool, yet we

pay for its initialization:

Be making the initialization lazy, we avoid that cost:

This code runs every time before user code in `uv run`.

This means that before calling rayon, one now needs to call

`LazyLock::force(&RAYON_INITIALIZE);`.

Performance mode (CPU 0 is a perf core):

```

$ taskset -c 0 hyperfine --warmup 5 -N "/home/konsti/projects/uv/uv-main sync" "/home/konsti/projects/uv/target/profiling/uv sync"

Benchmark 1: /home/konsti/projects/uv/uv-main sync

Time (mean ± σ): 4.5 ms ± 0.1 ms [User: 2.7 ms, System: 1.8 ms]

Range (min … max): 4.4 ms … 6.4 ms 640 runs

Warning: Statistical outliers were detected. Consider re-running this benchmark on a quiet system without any interferences from other programs. It might help to use the '--warmup' or '--prepare' options.

Benchmark 2: /home/konsti/projects/uv/target/profiling/uv sync

Time (mean ± σ): 4.4 ms ± 0.1 ms [User: 2.7 ms, System: 1.6 ms]

Range (min … max): 4.3 ms … 5.0 ms 679 runs

Summary

/home/konsti/projects/uv/target/profiling/uv sync ran

1.03 ± 0.04 times faster than /home/konsti/projects/uv/uv-main sync

```

Power saver mode:

```

$ hyperfine --warmup 5 -N "/home/konsti/projects/uv/uv-main sync" "/home/konsti/projects/uv/target/profiling/uv sync"

Benchmark 1: /home/konsti/projects/uv/uv-main sync

Time (mean ± σ): 28.1 ms ± 1.2 ms [User: 15.5 ms, System: 20.3 ms]

Range (min … max): 25.7 ms … 31.9 ms 102 runs

Benchmark 2: /home/konsti/projects/uv/target/profiling/uv sync

Time (mean ± σ): 24.0 ms ± 1.2 ms [User: 13.8 ms, System: 9.9 ms]

Range (min … max): 22.2 ms … 28.2 ms 122 runs

Summary

/home/konsti/projects/uv/target/profiling/uv sync ran

1.17 ± 0.08 times faster than /home/konsti/projects/uv/uv-main sync

```

<!--

Thank you for contributing to uv! To help us review effectively, please

ensure that:

- The pull request includes a summary of the change.

- The title is descriptive and concise.

- Relevant issues are referenced where applicable.

-->

## Summary

Resolves#9333

This pull request introduces support for the `--no-extra` command-line

flag and the corresponding `no-extra` UV setting.

### Behavior

- When `--all-extras` is supplied, the specified extras in `--no-extra`

will be excluded from the installation.

- If `--all-extras` is not supplied, `--no-extra` has no effect and is

safely ignored.

## Test Plan

Since `ExtrasSpecification::from_args` and

`ExtrasSpecification::extra_names` are the most important parts in the

implementation, I added the following tests in the

`uv-configuration/src/extras.rs` module:

- **`test_no_extra_full`**: Verifies behavior when `no_extra` includes

the entire list of extras.

- **`test_no_extra_partial`**: Tests partial exclusion, ensuring only

specified extras are excluded.

- **`test_no_extra_empty`**: Confirms that no extras are excluded if

`no_extra` is empty.

- **`test_no_extra_excessive`**: Ensures the implementation ignores

`no_extra` values that don't match any available extras.

- **`test_no_extra_without_all_extras`**: Validates that `no_extra` has

no effect when `--all-extras` is not supplied.

- **`test_no_extra_without_package_extras`**: Confirms correct behavior

when no extras are available in the package.

- **`test_no_extra_duplicates`**: Verifies that duplicate entries in

`pkg_extras` or `no_extra` do not cause errors.

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

<!--

Thank you for contributing to uv! To help us out with reviewing, please

consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

PR #4965 added `*-manylinux_2_31` as a target triple, and issue #4966

described the need for a more general solution.

In lieu of a general solution, this PR adds further explicit manylinux

target triples for different glibc version up to the one used by the

latest Ubuntu release (glibc 2.40 used in Ubuntu 24.10).

## Test Plan

<!-- How was it tested? -->

Local, manual testing with a Python wheel targeting

`x86_64-manylinux_2_35`.

## Summary

These were moved as part of a broader refactor to create a single

integration test module. That "single integration test module" did

indeed have a big impact on compile times, which is great! But we aren't

seeing any benefit from moving these tests into their own files (despite

the claim in [this blog

post](https://matklad.github.io/2021/02/27/delete-cargo-integration-tests.html),

I see the same compilation pattern regardless of where the tests are

located). Plus, we don't have many of these, and same-file tests is such

a strong Rust convention.

Fixes#9164

Using clap's `default_value_t` makes the `flag` function unhappy, so

just set the default when we unwrap. Tested with no flags,

`--verify-hashes`, `--no-verify-hashes` and setting in uv.toml

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

This PR adds support for `tool.uv.default-groups`, which defaults to

`["dev"]` for backwards-compatibility. These represent the groups we

sync by default.

Part of #8090

Unblocks https://github.com/astral-sh/uv/pull/8274

Refactors `DevMode` and `DevSpecification` into a shared type

`DevGroupsSpecification` that allows us to track if `--dev` was

implicitly or explicitly provided.

Part of #8090

Adds the ability to include a group (`--group`) in the sync or _only_

sync a group (`--only-group`). Includes all groups in the resolution,

which will have the same limitations as extras as described in #6981.

There's a great deal of refactoring of the "development" concept into

"groups" behind the scenes that I am continuing to defer here to

minimize the diff.

Additionally, this does not yet resolve interactions with the existing

`dev` group — we'll tackle that separately as well. I probably won't

merge the stack until that design is resolved. The current proposal is

that we'll just "combine' the `dev-dependencies` contents into the `dev`

group.

Part of #8090

Adds the ability to add and remove dependencies from arbitrary groups

using `uv add` and `uv remove`. Does not include resolving with the new

dependencies — tackling that in #8110.

Additionally, this does not yet resolve interactions with the existing

`dev` group — we'll tackle that separately as well. I probably won't

merge the stack until that design is resolved.

## Summary

We shouldn't show these in `uv add`, especially when the thing we're

adding is about to have a lower-bound put on it. Now, we only show these

when the user runs `uv lock` or `uv sync`.

## Summary

This PR declares and documents all environment variables that are used

in one way or another in `uv`, either internally, or externally, or

transitively under a common struct.

I think over time as uv has grown there's been many environment

variables introduced. Its harder to know which ones exists, which ones

are missing, what they're used for, or where are they used across the

code. The docs only documents a handful of them, for others you'd have

to dive into the code and inspect across crates to know which crates

they're used on or where they're relevant.

This PR is a starting attempt to unify them, make it easier to discover

which ones we have, and maybe unlock future posibilities in automating

generating documentation for them.

I think we can split out into multiple structs later to better organize,

but given the high influx of PR's and possibly new environment variables

introduced/re-used, it would be hard to try to organize them all now

into their proper namespaced struct while this is all happening given

merge conflicts and/or keeping up to date.

I don't think this has any impact on performance as they all should

still be inlined, although it may affect local build times on changes to

the environment vars as more crates would likely need a rebuild. Lastly,

some of them are declared but not used in the code, for example those in

`build.rs`. I left them declared because I still think it's useful to at

least have a reference.

Did I miss any? Are their initial docs cohesive?

Note, `uv-static` is a terrible name for a new crate, thoughts? Others

considered `uv-vars`, `uv-consts`.

## Test Plan

Existing tests

Allow '*' as a value to match all hosts, and provide

`reqwest_blocking_get` for uv tests, so that they also respect

UV_INSECURE_HOST (since they respect `ALL_PROXY`).

This lets those tests pass with a forward proxy - we can think about

setting a root certificate later so that we don't need to disable

certificate verification at all.

---

I tested this locally by running:

```bash

GIT_SSL_NO_VERIFY=true ALL_PROXY=localhost:8080 UV_INSECURE_HOST="*" cargo nextest run sync_wheel_path_source_error

```

With my forward proxy showing:

```

2024-10-09T18:20:16.300188Z INFO fopro: Proxied GET cc2fedbd88a6546c1727ae13fa977a/cffi-1.17.1-cp310-cp310-macosx_11_0_arm64.whl (headers 480.024958ms + body 92.345666ms)

2024-10-09T18:20:16.913298Z INFO fopro: Proxied GET https://pypi.org/simple/pycparser/ (headers 509.664834ms + body 269.291µs)

2024-10-09T18:20:17.383975Z INFO fopro: Proxied GET 5f610ebe4298517d912eb1c76e1a53/pycparser-2.21-py2.py3-none-any.whl.metadata (headers 443.184208ms + body 2.094792ms)

```

As per

https://matklad.github.io/2021/02/27/delete-cargo-integration-tests.html

Before that, there were 91 separate integration tests binary.

(As discussed on Discord — I've done the `uv` crate, there's still a few

more commits coming before this is mergeable, and I want to see how it

performs in CI and locally).

## Summary

Similiar to `cargo init --vcs <VCS>`, this PR adds the `--vcs <VCS>`

flag for `uv init`, allowing to create a version control system during

initialization. By default, `uv init` will create a Git repository if

the `--vcs` flag is not provided. Use `--vcs none` to disable this

feature.

Currently, only Git is supported. While Cargo also supports hg, pijul,

and fossil, this initial PR only includes Git. We can add more later if

there are any user requests.

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

## Summary

We need to apply the `--no-install` filters earlier, such that we don't

error if we only have a source distribution for a given package when

`--no-build` is provided but that package is _omitted_.

Closes#7247.

## Summary

This PR adds a more flexible cache invalidation abstraction for uv, and

uses that new abstraction to improve support for dynamic metadata.

Specifically, instead of relying solely on a timestamp, we now pass

around a `CacheInfo` struct which (as of now) contains

`Option<Timestamp>` and `Option<Commit>`. The `CacheInfo` is saved in

`dist-info` as `uv_cache.json`, so we can test already-installed

distributions for cache validity (along with testing _cached_

distributions for cache validity).

Beyond the defaults (`pyproject.toml`, `setup.py`, and `setup.cfg`

changes), users can also specify additional cache keys, and it's easy

for us to extend support in the future. Right now, cache keys can either

be instructions to include the current commit (for `setuptools_scm` and

similar) or file paths (for `hatch-requirements-txt` and similar):

```toml

[tool.uv]

cache-keys = [{ file = "requirements.txt" }, { git = true }]

```

This change should be fully backwards compatible.

Closes https://github.com/astral-sh/uv/issues/6964.

Closes https://github.com/astral-sh/uv/issues/6255.

Closes https://github.com/astral-sh/uv/issues/6860.

## Summary

Like `uv sync`, you can omit the current project (`--no-emit-project`),

a specific package (`--no-emit-package`), or the entire workspace

(`--no-emit-workspace`).

Closes https://github.com/astral-sh/uv/issues/6960.

Closes#6995.

## Summary

The interface here is intentionally a bit more limited than `uv pip

compile`, because we don't want `requirements.txt` to be a system of

record -- it's just an export format. So, we don't write annotation

comments (i.e., which dependency is requested from which), we don't

allow writing extras, etc. It's just a flat list of requirements, with

their markers and hashes.

Closes#6007.

Closes#6668.

Closes#6670.

## Summary

This PR revives https://github.com/astral-sh/uv/pull/4944, which I think

was a good start towards adding `--trusted-host`. Last night, I tried to

add `--trusted-host` with a custom verifier, but we had to vendor a lot

of `reqwest` code and I eventually hit some private APIs. I'm not

confident that I can implement it correctly with that mechanism, and

since this is security, correctness is the priority.

So, instead, we now use two clients and multiplex between them.

Closes https://github.com/astral-sh/uv/issues/1339.

## Test Plan

Created self-signed certificate, and ran `python3 -m http.server --bind

127.0.0.1 4443 --directory . --certfile cert.pem --keyfile key.pem` from

the packse index directory.

Verified that `cargo run pip install

transitive-yanked-and-unyanked-dependency-a-0abad3b6 --index-url

https://127.0.0.1:8443/simple-html` failed with:

```

error: Request failed after 3 retries

Caused by: error sending request for url (https://127.0.0.1:8443/simple-html/transitive-yanked-and-unyanked-dependency-a-0abad3b6/)

Caused by: client error (Connect)

Caused by: invalid peer certificate: Other(OtherError(CaUsedAsEndEntity))

```

Verified that `cargo run pip install

transitive-yanked-and-unyanked-dependency-a-0abad3b6 --index-url

'https://127.0.0.1:8443/simple-html' --trusted-host '127.0.0.1:8443'`

failed with the expected error (invalid resolution) and made valid

requests.

Verified that `cargo run pip install

transitive-yanked-and-unyanked-dependency-a-0abad3b6 --index-url

'https://127.0.0.1:8443/simple-html' --trusted-host '127.0.0.2' -n` also

failed.

This is a fallback mode that we supported when we decided to use PEP 517

builds by default. I can't find a single reference to it on GitHub or in

our issue tracker, so I want to drop support for it as part of v0.3.0.

## Summary

We now persist the `ResolverInstallerOptions` when writing out a tool

receipt. When upgrading, we grab the saved options, and merge with the

command-line arguments and user-level filesystem settings (CLI > receipt

> filesystem).

## Summary

This PR rewrites the `MarkerTree` type to use algebraic decision

diagrams (ADD). This has many benefits:

- The diagram is canonical for a given marker function. It is impossible

to create two functionally equivalent marker trees that don't refer to

the same underlying ADD. This also means that any trivially true or

unsatisfiable markers are represented by the same constants.

- The diagram can handle complex operations (conjunction/disjunction) in

polynomial time, as well as constant-time negation.

- The diagram can be converted to a simplified DNF form for user-facing

output.

The new representation gives us a lot more confidence in our marker

operations and simplification, which is proving to be very important

(see https://github.com/astral-sh/uv/pull/5733 and

https://github.com/astral-sh/uv/pull/5163).

Unfortunately, it is not easy to split this PR into multiple commits

because it is a large rewrite of the `marker` module. I'd suggest

reading through the `marker/algebra.rs`, `marker/simplify.rs`, and

`marker/tree.rs` files for the new implementation, as well as the

updated snapshots to verify how the new simplification rules work in

practice. However, a few other things were changed:

- [We now use release-only comparisons for `python_full_version`, where

we previously only did for

`python_version`](https://github.com/astral-sh/uv/blob/ibraheem/canonical-markers/crates/pep508-rs/src/marker/algebra.rs#L522).

I'm unsure how marker operations should work in the presence of

pre-release versions if we decide that this is incorrect.

- [Meaningless marker expressions are now

ignored](https://github.com/astral-sh/uv/blob/ibraheem/canonical-markers/crates/pep508-rs/src/marker/parse.rs#L502).

This means that a marker such as `'x' == 'x'` will always evaluate to

`true` (as if the expression did not exist), whereas we previously

treated this as always `false`. It's negation however, remains `false`.

- [Unsatisfiable markers are written as `python_version <

'0'`](https://github.com/astral-sh/uv/blob/ibraheem/canonical-markers/crates/pep508-rs/src/marker/tree.rs#L1329).

- The `PubGrubSpecifier` type has been moved to the new `uv-pubgrub`

crate, shared by `pep508-rs` and `uv-resolver`. `pep508-rs` also depends

on the `pubgrub` crate for the `Range` type, we probably want to move

`pubgrub::Range` into a separate crate to break this, but I don't think

that should block this PR (cc @konstin).

There is still some remaining work here that I decided to leave for now

for the sake of unblocking some of the related work on the resolver.

- We still use `Option<MarkerTree>` throughout uv, which is unnecessary

now that `MarkerTree::TRUE` is canonical.

- The `MarkerTree` type is now interned globally and can potentially

implement `Copy`. However, it's unclear if we want to add more

information to marker trees that would make it `!Copy`. For example, we

may wish to attach extra and requires-python environment information to

avoid simplifying after construction.

- We don't currently combine `python_full_version` and `python_version`

markers.

- I also have not spent too much time investigating performance and

there is probably some low-hanging fruit. Many of the test cases I did

run actually saw large performance improvements due to the markers being

simplified internally, reducing the stress on the old `normalize`

routine, especially for the extremely large markers seen in

`transformers` and other projects.

Resolves https://github.com/astral-sh/uv/issues/5660,

https://github.com/astral-sh/uv/issues/5179.

## Summary

I think this seems reasonable... Otherwise, we might not go back to PyPI

to revalidate the list of available versions despite the user passing

`--upgrade`.

## Summary

It's hard for me to imagine a scenario in which a user passed

`--reinstall`, but wanted us to keep respecting cached data for a

package. For example, to actually "rebuild and reinstall" an editable

today, you have to pass both `--reinstall` and `--refresh`.

This PR makes `--reinstall` imply `--refresh`, so we always validate

that the cached data is fresh.

Closes https://github.com/astral-sh/uv/issues/5424.

## Summary

This PR modifies the lockfile to include the impactful resolution

settings, like the resolution and pre-release mode. If any of those

values change, we want to ignore the existing lockfile. Otherwise,

`--resolution lowest-direct` will typically have no effect, which is

really unintuitive.

Closes https://github.com/astral-sh/uv/issues/5226.

## Summary

This is an alternative to `--require-hashes` which will validate a hash

if it's present, but ignore requirements that omit hashes or are absent

from the lockfile entirely.

So, e.g., transitive dependencies that are missing will _not_ error; nor

will dependencies that are included but lack a hash.

Closes https://github.com/astral-sh/uv/issues/3305.

This is an attempt to solve https://github.com/astral-sh/uv/issues/ by

applying the extra marker of the requirement to overrides and

constraints.

Say in `a` we have a requirements

```

b==1; python_version < "3.10"

c==1; extra == "feature"

```

and overrides

```

b==2; python_version < "3.10"

b==3; python_version >= "3.10"

c==2; python_version < "3.10"

c==3; python_version >= "3.10"

```

Our current strategy is to discard the markers in the original

requirements. This means that on 3.12 for `a` we install `b==3`, but it

also means that we add `c` to `a` without `a[feature]`, causing #4826.

With this PR, the new requirement become,

```

b==2; python_version < "3.10"

b==3; python_version >= "3.10"

c==2; python_version < "3.10" and extra == "feature"

c==3; python_version >= "3.10" and extra == "feature"

```

allowing to override markers while preserving optional dependencies as

such.

Fixes#4826

Downstack PR: #4515 Upstack PR: #4481

Consider these two cases:

A:

```

werkzeug==2.0.0

werkzeug @ 960bb4017c4aed12b5ed8b78e0153e/Werkzeug-2.0.0-py3-none-any.whl

```

B:

```toml

dependencies = [

"iniconfig == 1.1.1 ; python_version < '3.12'",

"iniconfig @ git+https://github.com/pytest-dev/iniconfig@93f5930e668c0d1ddf4597e38dd0dea4e2665e7a ; python_version >= '3.12'",

]

```

In the first case, `werkzeug==2.0.0` should be overridden by the url. In

the second case `iniconfig == 1.1.1` is in a different fork and must

remain a registry distribution.

That means the conversion from `Requirement` to `PubGrubPackage` is

dependent on the other requirements of the package. We can either look

into the other packages immediately, or we can move the forking before

the conversion to `PubGrubDependencies` instead of after. Either version

requires a flat list of `Requirement`s to use. This refactoring gives us

this list.

I'll add support for both of the above cases in the forking urls branch

before merging this PR. I also have to move constraints over to this.

With the change, we remove the special casing of workspace dependencies

and resolve `tool.uv` for all git and directory distributions. This

gives us support for non-editable workspace dependencies and path

dependencies in other workspaces. It removes a lot of special casing

around workspaces. These changes are the groundwork for supporting

`tool.uv` with dynamic metadata.

The basis for this change is moving `Requirement` from

`distribution-types` to `pypi-types` and the lowering logic from

`uv-requirements` to `uv-distribution`. This changes should be split out

in separate PRs.

I've included an example workspace `albatross-root-workspace2` where

`bird-feeder` depends on `a` from another workspace `ab`. There's a

bunch of failing tests and regressed error messages that still need

fixing. It does fix the audited package count for the workspace tests.

## Summary

I haven't tested on Windows yet, but the idea here is that we should use

a portable representation when printing paths.

I decided to limit the scope here to paths that we write to output

files.

Closes https://github.com/astral-sh/uv/issues/3800.

## Summary

This PR takes the functions used in `pip install`, moves them into a

common module, and then replaces all the `pip sync` logic with calls

into those functions. The net effect is that `pip install` and `pip

sync` share far more code and demonstrate much more consistent behavior.

Closes https://github.com/astral-sh/uv/issues/3555.

## Summary

This PR adds editables using a new source type (`editable+...`), and

then extracts the editables from the lockfile in `uv sync`.

Closes https://github.com/astral-sh/uv/issues/3695.

## Summary

This PR consolidates the concurrency limits used throughout `uv` and

exposes two limits, `UV_CONCURRENT_DOWNLOADS` and

`UV_CONCURRENT_BUILDS`, as environment variables.

Currently, `uv` has a number of concurrent streams that it buffers using

relatively arbitrary limits for backpressure. However, many of these

limits are conflated. We run a relatively small number of tasks overall

and should start most things as soon as possible. What we really want to

limit are three separate operations:

- File I/O. This is managed by tokio's blocking pool and we should not

really have to worry about it.

- Network I/O.

- Python build processes.

Because the current limits span a broad range of tasks, it's possible

that a limit meant for network I/O is occupied by tasks performing

builds, reading from the file system, or even waiting on a `OnceMap`. We

also don't limit build processes that end up being required to perform a

download. While this may not pose a performance problem because our

limits are relatively high, it does mean that the limits do not do what

we want, making it tricky to expose them to users

(https://github.com/astral-sh/uv/issues/1205,

https://github.com/astral-sh/uv/issues/3311).

After this change, the limits on network I/O and build processes are

centralized and managed by semaphores. All other tasks are unbuffered

(note that these tasks are still bounded, so backpressure should not be

a problem).

We now use the getters and setters everywhere.

There were some places where we wanted to build a `MarkerEnvironment`

out of whole cloth, usually in tests. To facilitate those use cases, we

add a `MarkerEnvironmentBuilder` that provides a convenient constructor.

It's basically like a `MarkerEnvironment::new`, but with named

parameters. That's useful here because there are so many fields (and

they many have the same type).

## Summary

This is universal environment variable used to determine the mac OS

deployment target. We now respect it in `--python-platform` -- so we

default to 12.0, but users can override it as needed.

## Summary

This PR follows Cargo's strategy for merging configuration, albeit in a

more limited way (we don't support as many configuration locations).

Specifically, we merge the user configuration with the workspace

configuration if both are present. The workspace configuration has

priority, such that we take values from the workspace configuration and

ignore those in the user configuration if both are specified for a given

setting -- with the exception of arrays and maps, which are

concatenated.

For now, if a user provides a configuration file with `--config-file`,

we _don't_ merge in the user settings.

See:

https://doc.rust-lang.org/cargo/reference/config.html#hierarchical-structure.

Closes#3420.

## Introduction

PEP 621 is limited. Specifically, it lacks

* Relative path support

* Editable support

* Workspace support

* Index pinning or any sort of index specification

The semantics of urls are a custom extension, PEP 440 does not specify

how to use git references or subdirectories, instead pip has a custom

stringly format. We need to somehow support these while still stying

compatible with PEP 621.

## `tool.uv.source`

Drawing inspiration from cargo, poetry and rye, we add `tool.uv.sources`

or (for now stub only) `tool.uv.workspace`:

```toml

[project]

name = "albatross"

version = "0.1.0"

dependencies = [

"tqdm >=4.66.2,<5",

"torch ==2.2.2",

"transformers[torch] >=4.39.3,<5",

"importlib_metadata >=7.1.0,<8; python_version < '3.10'",

"mollymawk ==0.1.0"

]

[tool.uv.sources]

tqdm = { git = "https://github.com/tqdm/tqdm", rev = "cc372d09dcd5a5eabdc6ed4cf365bdb0be004d44" }

importlib_metadata = { url = "https://github.com/python/importlib_metadata/archive/refs/tags/v7.1.0.zip" }

torch = { index = "torch-cu118" }

mollymawk = { workspace = true }

[tool.uv.workspace]

include = [

"packages/mollymawk"

]

[tool.uv.indexes]

torch-cu118 = "https://download.pytorch.org/whl/cu118"

```

See `docs/specifying_dependencies.md` for a detailed explanation of the

format. The basic gist is that `project.dependencies` is what ends up on

pypi, while `tool.uv.sources` are your non-published additions. We do

support the full range or PEP 508, we just hide it in the docs and

prefer the exploded table for easier readability and less confusing with

actual url parts.

This format should eventually be able to subsume requirements.txt's

current use cases. While we will continue to support the legacy `uv pip`

interface, this is a piece of the uv's own top level interface. Together

with `uv run` and a lockfile format, you should only need to write

`pyproject.toml` and do `uv run`, which generates/uses/updates your

lockfile behind the scenes, no more pip-style requirements involved. It

also lays the groundwork for implementing index pinning.

## Changes

This PR implements:

* Reading and lowering `project.dependencies`,

`project.optional-dependencies` and `tool.uv.sources` into a new

requirements format, including:

* Git dependencies

* Url dependencies

* Path dependencies, including relative and editable

* `pip install` integration

* Error reporting for invalid `tool.uv.sources`

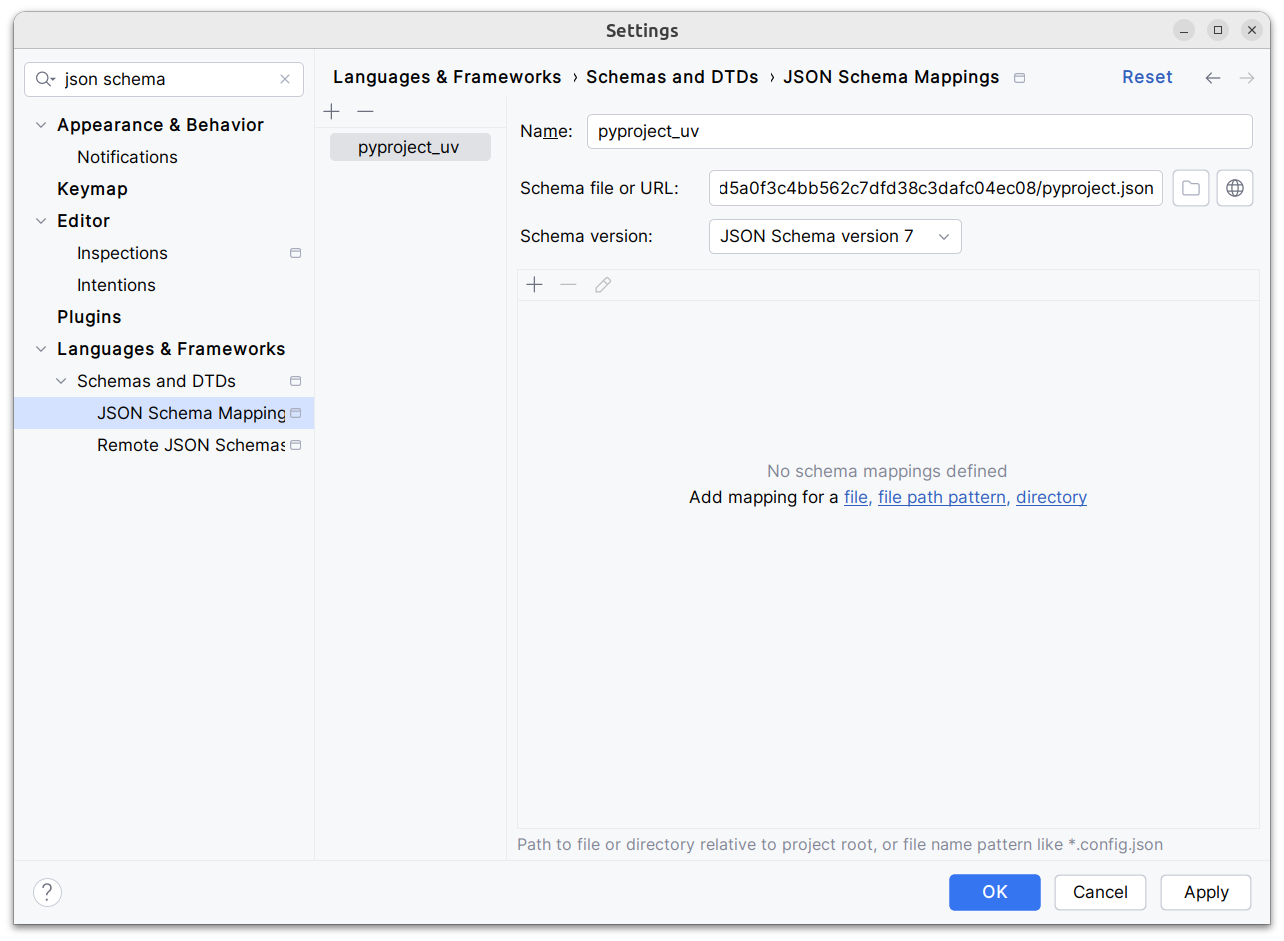

* Json schema integration (works in pycharm, see below)

* Draft user-level docs (see `docs/specifying_dependencies.md`)

It does not implement:

* No `pip compile` testing, deprioritizing towards our own lockfile

* Index pinning (stub definitions only)

* Development dependencies

* Workspace support (stub definitions only)

* Overrides in pyproject.toml

* Patching/replacing dependencies

One technically breaking change is that we now require user provided

pyproject.toml to be valid wrt to PEP 621. Included files still fall

back to PEP 517. That means `pip install -r requirements.txt` requires

it to be valid while `pip install -r requirements.txt` with `-e .` as

content falls back to PEP 517 as before.

## Implementation

The `pep508` requirement is replaced by a new `UvRequirement` (name up

for bikeshedding, not particularly attached to the uv prefix). The still

existing `pep508_rs::Requirement` type is a url format copied from pip's

requirements.txt and doesn't appropriately capture all features we

want/need to support. The bulk of the diff is changing the requirement

type throughout the codebase.

We still use `VerbatimUrl` in many places, where we would expect a

parsed/decomposed url type, specifically:

* Reading core metadata except top level pyproject.toml files, we fail a

step later instead if the url isn't supported.

* Allowed `Urls`.

* `PackageId` with a custom `CanonicalUrl` comparison, instead of

canonicalizing urls eagerly.

* `PubGrubPackage`: We eventually convert the `VerbatimUrl` back to a

`Dist` (`Dist::from_url`), instead of remembering the url.

* Source dist types: We use verbatim url even though we know and require

that these are supported urls we can and have parsed.

I tried to make improve the situation be replacing `VerbatimUrl`, but

these changes would require massive invasive changes (see e.g.

https://github.com/astral-sh/uv/pull/3253). A main problem is the ref

`VersionOrUrl` and applying overrides, which assume the same

requirement/url type everywhere. In its current form, this PR increases

this tech debt.

I've tried to split off PRs and commits, but the main refactoring is

still a single monolith commit to make it compile and the tests pass.

## Demo

Adding

d1ae3b85d5/pyproject.json

as json schema (v7) to pycharm for `pyproject.toml`, you can try the IDE

support already:

[dove.webm](https://github.com/astral-sh/uv/assets/6826232/c293c272-c80b-459d-8c95-8c46a8d198a1)

In *some* places in our crates, `serde` (and `rkyv`) are optional

dependencies. I believe this was done out of reasons of "good sense,"

that is, it follows a Rust ecosystem pattern where serde integration

tends to be an opt-in crate feature. (And similarly for `rkyv`.)

However, ultimately, `uv` itself requires `serde` and `rkyv` to

function. Since our crates are strictly internal, there are limited

consumers for our crates without `serde` (and `rkyv`) enabled. I think

one possibility is that optional `serde` (and `rkyv`) integration means

that someone can do this:

cargo test -p pep440_rs

And this will run tests _without_ `serde` or `rkyv` enabled. That in

turn could lead to faster iteration time by reducing compile times. But,

I'm not sure this is worth supporting. The iterative compilation times

of

individual crates are probably fast enough in debug mode, even with

`serde` and `rkyv` enabled. Namely, `serde` and `rkyv` themselves

shouldn't need to be re-compiled in most cases. On `main`:

```

from-scratch: `cargo test -p pep440_rs --lib` 0.685

incremental: `cargo test -p pep440_rs --lib` 0.278s

from-scratch: `cargo test -p pep440_rs --features serde,rkyv --lib` 3.948s

incremental: `cargo test -p pep440_rs --features serde,rkyv --lib` 0.321s

```

So while a from-scratch build does take significantly longer, an

incremental build is about the same.

The benefit of doing this change is two-fold:

1. It brings out crates into alignment with "reality." In particular,

some crates were _implicitly_ relying on `serde` being enabled

without explicitly declaring it. This technically means that our

`Cargo.toml`s were wrong in some cases, but it is hard to observe it

because of feature unification in a Cargo workspace.

2. We no longer need to deal with the cognitive burden of writing

`#[cfg_attr(feature = "serde", ...)]` everywhere.

## Summary

This index strategy resolves every package to the latest possible

version across indexes. If a version is in multiple indexes, the first

available index is selected.

Implements #3137

This closely matches pip.

## Test Plan

Good question. I'm hesitant to use my certifi example here, since that

would inevitably break when torch removes this package. Please comment!

## Summary

based on PEP 508 the `platform_machine` should be same as

`platform.machine()` output:

https://peps.python.org/pep-0508/#environment-markers

From my macOS M2

```python

In [1]: import platform

In [2]: platform.machine()

Out[2]: 'arm64'

```

I napari we also use `arm64` in requirements and it works as expected:

9fcf63e69a/pyproject.toml (L120)

## Test Plan

<!-- How was it tested? -->

## Summary

I initially implemented this by allowing arbitrary

`x86_64-manylinux_x_y`, but it makes the Clap, Serde, and Schemars

definitions more complicated, _and_ makes it harder for the user.

Ultimately, manylinux itself only provides images for 2_17 and 2_28, so

this seems like it should be sufficient.

Closes https://github.com/astral-sh/uv/issues/3222.

## Summary

macOS 11 has been EOL for 7 months, and it seems like it's common to

publish ARM-only wheels at 12.0+. I think this is a better default for

ARM macs.

Closes https://github.com/astral-sh/uv/issues/3227.

## Test Plan

`cargo run pip install scikit-learn==1.3.2 --python-platform macos

--no-build`

Adds hidden `--preview` / `--no-preview` flags with `UV_PREVIEW`

environment variable support. Copies the `PreviewMode` type from Ruff.

Does a little bit of extra work to port `uv run` to the new settings

model.

Note we allow `uv run` invocations without preview and only use its

presence to toggle an experimental warning.

## Test plan

```

❯ cargo run -q -- run --no-workspace -- python --version

warning: `uv run` is experimental and may change without warning.

Python 3.12.2

❯ cargo run -q -- run --no-workspace --preview -- python --version

Python 3.12.2

❯ UV_PREVIEW=1 cargo run -q -- run --no-workspace -- python --version

Python 3.12.2

```

## Summary

This PR adds basic struct definitions along with a "workspace" concept

for discovering settings. (The "workspace" terminology is used to match

Ruff; I did not invent it.)

A few notes:

- We discover any `pyproject.toml` or `uv.toml` file in any parent

directory of the current working directory. (We could adjust this to

look at the directories of the input files.)

- We don't actually do anything with the configuration yet; but those

PRs are large and I want this to be reviewed in isolation.

When running the `uv-client` tests, i would previously get:

```

warning: field `0` is never read

--> crates/uv-configuration/src/config_settings.rs:43:27

|

43 | pub struct ConfigSettings(BTreeMap<String, ConfigSettingValue>);

| -------------- ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

| |

| field in this struct

|

= note: `ConfigSettings` has derived impls for the traits `Clone` and `Debug`, but these are intentionally ignored during dead code analysis

= note: `#[warn(dead_code)]` on by default

help: consider changing the field to be of unit type to suppress this warning while preserving the field numbering, or remove the field

|

43 | pub struct ConfigSettings(());

| ~~

warning: `uv-configuration` (lib) generated 1 warning

```