## Summary

Discovered while working on https://github.com/astral-sh/uv/issues/9516.

In the linked repo, the root uses a `../dependency` path for the

workspace member, which we weren't normalizing.

This updates the surrounding code to use the new ResolverEnvironment

type. In some cases, this simplifies caller code by removing case

analysis. There *shouldn't* be any behavior changes here. Some test

snapshots were updated to account for some minor tweaks to error

messages.

I didn't split this up into separate commits because it would have been

too difficult/costly.

## Summary

This is similar to https://github.com/astral-sh/uv/pull/6171 but more

expansive... _Anywhere_ that we test requirements for platform

compatibility, we _need_ to respect the resolver-friendly markers. In

fixing the motivating issue (#6621), I also realized that we had a bunch

of bugs here around `pip install` with `--python-platform` and

`--python-version`, because we always performed our `satisfy` and `Plan`

operations on the interpreter's markers, not the adjusted markers!

Closes https://github.com/astral-sh/uv/issues/6621.

* Use a dedicated `ResolverMarkers` check in the fork state. This is

better than the `MarkerTree::And(Vec::new())` check.

* Report the timing correct naming universal resolution instead of two

spaces around an empty string when there are no markers.

* On resolution error, show the split that we're in. I'm not sure how to

word this, since we're doing a universal resolution until we fork, so

the trace may contain information from requirements that are not part of

this fork.

Downstack PR: #4481

## Introduction

We support forking the dependency resolution to support conflicting

registry requirements for different platforms, say on package range is

required for an older python version while a newer is required for newer

python versions, or dependencies that are different per platform. We

need to extend this support to direct URL requirements.

```toml

dependencies = [

"iniconfig @ 62565a6e1ceac6173dc9db836a5b46/iniconfig-2.0.0-py3-none-any.whl ; python_version >= '3.12'",

"iniconfig @ b3c12c6d70988d7baea9578f3c48f3/iniconfig-1.1.1-py2.py3-none-any.whl ; python_version < '3.12'"

]

```

This did not work because `Urls` was built on the assumption that there

is a single allowed URL per package. We collect all allowed URL ahead of

resolution by following direct URL dependencies (including path

dependencies) transitively, i.e. a registry distribution can't require a

URL.

## The same package can have Registry and URL requirements

Consider the following two cases:

requirements.in:

```text

werkzeug==2.0.0

werkzeug @ 960bb4017c4aed12b5ed8b78e0153e/Werkzeug-2.0.0-py3-none-any.whl

```

pyproject.toml:

```toml

dependencies = [

"iniconfig == 1.1.1 ; python_version < '3.12'",

"iniconfig @ git+https://github.com/pytest-dev/iniconfig@93f5930e668c0d1ddf4597e38dd0dea4e2665e7a ; python_version >= '3.12'",

]

```

In the first case, we want the URL to override the registry dependency,

in the second case we want to fork and have one branch use the registry

and the other the URL. We have to know about this in

`PubGrubRequirement::from_registry_requirement`, but we only fork after

the current method.

Consider the following case too:

a:

```

c==1.0.0

b @ https://b.zip

```

b:

```

c @ https://c_new.zip ; python_version >= '3.12'",

c @ https://c_old.zip ; python_version < '3.12'",

```

When we convert the requirements of `a`, we can't know the url of `c`

yet. The solution is to remove the `Url` from `PubGrubPackage`: The

`Url` is redundant with `PackageName`, there can be only one url per

package name per fork. We now do the following: We track the urls from

requirements in `PubGrubDependency`. After forking, we call

`add_package_version_dependencies` where we apply override URLs, check

if the URL is allowed and check if the url is unique in this fork. When

we request a distribution, we ask the fork urls for the real URL. Since

we prioritize url dependencies over registry dependencies and skip

packages with `Urls` entries in pre-visiting, we know that when fetching

a package, we know if it has a url or not.

## URL conflicts

pyproject.toml (invalid):

```toml

dependencies = [

"iniconfig @ e96292c7f723f1fa332fe4ed6dfbec/iniconfig-1.1.0.tar.gz",

"iniconfig @ b3c12c6d70988d7baea9578f3c48f3/iniconfig-1.1.1-py2.py3-none-any.whl ; python_version < '3.12'",

"iniconfig @ 62565a6e1ceac6173dc9db836a5b46/iniconfig-2.0.0-py3-none-any.whl ; python_version >= '3.12'",

]

```

On the fork state, we keep `ForkUrls` that check for conflicts after

forking, rejecting the third case because we added two packages of the

same name with different URLs.

We need to flatten out the requirements before transformation into

pubgrub requirements to get the full list of other requirements which

may contain a URL, which was changed in a previous PR: #4430.

## Complex Example

a:

```toml

dependencies = [

# Force a split

"anyio==4.3.0 ; python_version >= '3.12'",

"anyio==4.2.0 ; python_version < '3.12'",

# Include URLs transitively

"b"

]

```

b:

```toml

dependencies = [

# Only one is used in each split.

"b1 ; python_version < '3.12'",

"b2 ; python_version >= '3.12'",

"b3 ; python_version >= '3.12'",

]

```

b1:

```toml

dependencies = [

"iniconfig @ b3c12c6d70988d7baea9578f3c48f3/iniconfig-1.1.1-py2.py3-none-any.whl",

]

```

b2:

```toml

dependencies = [

"iniconfig @ 62565a6e1ceac6173dc9db836a5b46/iniconfig-2.0.0-py3-none-any.whl",

]

```

b3:

```toml

dependencies = [

"iniconfig @ e96292c7f723f1fa332fe4ed6dfbec/iniconfig-1.1.0.tar.gz",

]

```

In this example, all packages are url requirements (directory

requirements) and the root package is `a`. We first split on `a`, `b`

being in each split. In the first fork, we reach `b1`, the fork URLs are

empty, we insert the iniconfig 1.1.1 URL, and then we skip over `b2` and

`b3` since the mark is disjoint with the fork markers. In the second

fork, we skip over `b1`, visit `b2`, insert the iniconfig 2.0.0 URL into

the again empty fork URLs, then visit `b3` and try to insert the

iniconfig 1.1.0 URL. At this point we find a conflict for the iniconfig

URL and error.

## Closing

The git tests are slow, but they make the best example for different URL

types i could find.

Part of #3927. This PR does not handle `Locals` or pre-releases yet.

## Summary

This is what I consider to be the "real" fix for #8072. We now treat

directory and path URLs as separate `ParsedUrl` types and

`RequirementSource` types. This removes a lot of `.is_dir()` forking

within the `ParsedUrl::Path` arms and makes some states impossible

(e.g., you can't have a `.whl` path that is editable). It _also_ fixes

the `direct_url.json` for direct URLs that refer to files. Previously,

we wrote out to these as if they were installed as directories, which is

just wrong.

By splitting `path` into a lockable, relative (or absolute) and an

absolute installable path and by splitting between urls and paths by

dist type, we can store relative paths in the lockfile.

## Summary

Should be no behavior changes, but one piece of technical debt I noticed

left over in the URL resolver. We already have structured paths, so we

shouldn't need to compare verbatim URLs.

## Summary

If the user requests a package as both editable and non-editable, the

editable now "wins".

Previously, `pip install -e . .` would install as editable. However,

`pip install -e . -r requirements.txt` would _not_ if `requirements.txt`

contained `.`, because we ignored `editable` when deduplicating and the

order of iteration was just dependent on internals.

Closes https://github.com/astral-sh/uv/issues/4053.

When creating a lockfile, lock the combined dependencies for all

packages in a workspace. This make the lockfile independent of where you

are in the workspace.

Fixes#3983

## Summary

This PR removes the static resolver map:

```rust

static RESOLVED_GIT_REFS: Lazy<Mutex<FxHashMap<RepositoryReference, GitSha>>> =

Lazy::new(Mutex::default);

```

With a `GitResolver` struct that we now pass around on the

`BuildContext`. There should be no behavior changes here; it's purely an

internal refactor with an eye towards making it cleaner for us to

"pre-populate" the list of resolved SHAs.

With the change, we remove the special casing of workspace dependencies

and resolve `tool.uv` for all git and directory distributions. This

gives us support for non-editable workspace dependencies and path

dependencies in other workspaces. It removes a lot of special casing

around workspaces. These changes are the groundwork for supporting

`tool.uv` with dynamic metadata.

The basis for this change is moving `Requirement` from

`distribution-types` to `pypi-types` and the lowering logic from

`uv-requirements` to `uv-distribution`. This changes should be split out

in separate PRs.

I've included an example workspace `albatross-root-workspace2` where

`bird-feeder` depends on `a` from another workspace `ab`. There's a

bunch of failing tests and regressed error messages that still need

fixing. It does fix the audited package count for the workspace tests.

## Summary

There are a few behavior changes in here:

- We now enforce `--require-hashes` for editables, like pip. So if you

use `--require-hashes` with an editable requirement, we'll reject it. I

could change this if it seems off.

- We now treat source tree requirements, editable or not (e.g., both `-e

./black` and `./black`) as if `--refresh` is always enabled. This

doesn't mean that we _always_ rebuild them; but if you pass

`--reinstall`, then yes, we always rebuild them. I think this is an

improvement and is close to how editables work today.

Closes#3844.

Closes#2695.

When parsing requirements from any source, directly parse the url parts

(and reject unsupported urls) instead of parsing url parts at a later

stage. This removes a bunch of error branches and concludes the work

parsing url parts once and passing them around everywhere.

Many usages of the assembled `VerbatimUrl` remain, but these can be

removed incrementally.

Please review commit-by-commit.

This is split out from workspaces support, which needs editables in the

bluejay commands. It consists mainly of refactorings:

* Move the `editable` module one level up.

* Introduce a `BuiltEditableMetadata` type for `(LocalEditable,

Metadata23, Requirements)`.

* Add editables to `InstalledPackagesProvider` so we can use

`EmptyInstalledPackages` for them.

This commit touches a lot of code, but the conceptual change here is

pretty simple: make it so we can run the resolver without providing a

`MarkerEnvironment`. This also indicates that the resolver should run in

universal mode. That is, the effect of a missing marker environment is

that all marker expressions that reference the marker environment are

evaluated to `true`. That is, they are ignored. (The only markers we

evaluate in that context are extras, which are the only markers that

aren't dependent on the environment.)

One interesting change here is that a `Resolver` no longer needs an

`Interpreter`. Previously, it had only been using it to construct a

`PythonRequirement`, by filling in the installed version from the

`Interpreter` state. But we now construct a `PythonRequirement`

explicitly since its `target` Python version should no longer be tied to

the `MarkerEnvironment`. (Currently, the marker environment is mutated

such that its `python_full_version` is derived from multiple sources,

including the CLI, which I found a touch confusing.)

The change in behavior can now be observed through the

`--unstable-uv-lock-file` flag. First, without it:

```

$ cat requirements.in

anyio>=4.3.0 ; sys_platform == "linux"

anyio<4 ; sys_platform == "darwin"

$ cargo run -qp uv -- pip compile -p3.10 requirements.in

anyio==4.3.0

exceptiongroup==1.2.1

# via anyio

idna==3.7

# via anyio

sniffio==1.3.1

# via anyio

typing-extensions==4.11.0

# via anyio

```

And now with it:

```

$ cargo run -qp uv -- pip compile -p3.10 requirements.in --unstable-uv-lock-file

x No solution found when resolving dependencies:

`-> Because you require anyio>=4.3.0 and anyio<4, we can conclude that the requirements are unsatisfiable.

```

This is expected at this point because the marker expressions are being

explicitly ignored, *and* there is no forking done yet to account for

the conflict.

## Introduction

PEP 621 is limited. Specifically, it lacks

* Relative path support

* Editable support

* Workspace support

* Index pinning or any sort of index specification

The semantics of urls are a custom extension, PEP 440 does not specify

how to use git references or subdirectories, instead pip has a custom

stringly format. We need to somehow support these while still stying

compatible with PEP 621.

## `tool.uv.source`

Drawing inspiration from cargo, poetry and rye, we add `tool.uv.sources`

or (for now stub only) `tool.uv.workspace`:

```toml

[project]

name = "albatross"

version = "0.1.0"

dependencies = [

"tqdm >=4.66.2,<5",

"torch ==2.2.2",

"transformers[torch] >=4.39.3,<5",

"importlib_metadata >=7.1.0,<8; python_version < '3.10'",

"mollymawk ==0.1.0"

]

[tool.uv.sources]

tqdm = { git = "https://github.com/tqdm/tqdm", rev = "cc372d09dcd5a5eabdc6ed4cf365bdb0be004d44" }

importlib_metadata = { url = "https://github.com/python/importlib_metadata/archive/refs/tags/v7.1.0.zip" }

torch = { index = "torch-cu118" }

mollymawk = { workspace = true }

[tool.uv.workspace]

include = [

"packages/mollymawk"

]

[tool.uv.indexes]

torch-cu118 = "https://download.pytorch.org/whl/cu118"

```

See `docs/specifying_dependencies.md` for a detailed explanation of the

format. The basic gist is that `project.dependencies` is what ends up on

pypi, while `tool.uv.sources` are your non-published additions. We do

support the full range or PEP 508, we just hide it in the docs and

prefer the exploded table for easier readability and less confusing with

actual url parts.

This format should eventually be able to subsume requirements.txt's

current use cases. While we will continue to support the legacy `uv pip`

interface, this is a piece of the uv's own top level interface. Together

with `uv run` and a lockfile format, you should only need to write

`pyproject.toml` and do `uv run`, which generates/uses/updates your

lockfile behind the scenes, no more pip-style requirements involved. It

also lays the groundwork for implementing index pinning.

## Changes

This PR implements:

* Reading and lowering `project.dependencies`,

`project.optional-dependencies` and `tool.uv.sources` into a new

requirements format, including:

* Git dependencies

* Url dependencies

* Path dependencies, including relative and editable

* `pip install` integration

* Error reporting for invalid `tool.uv.sources`

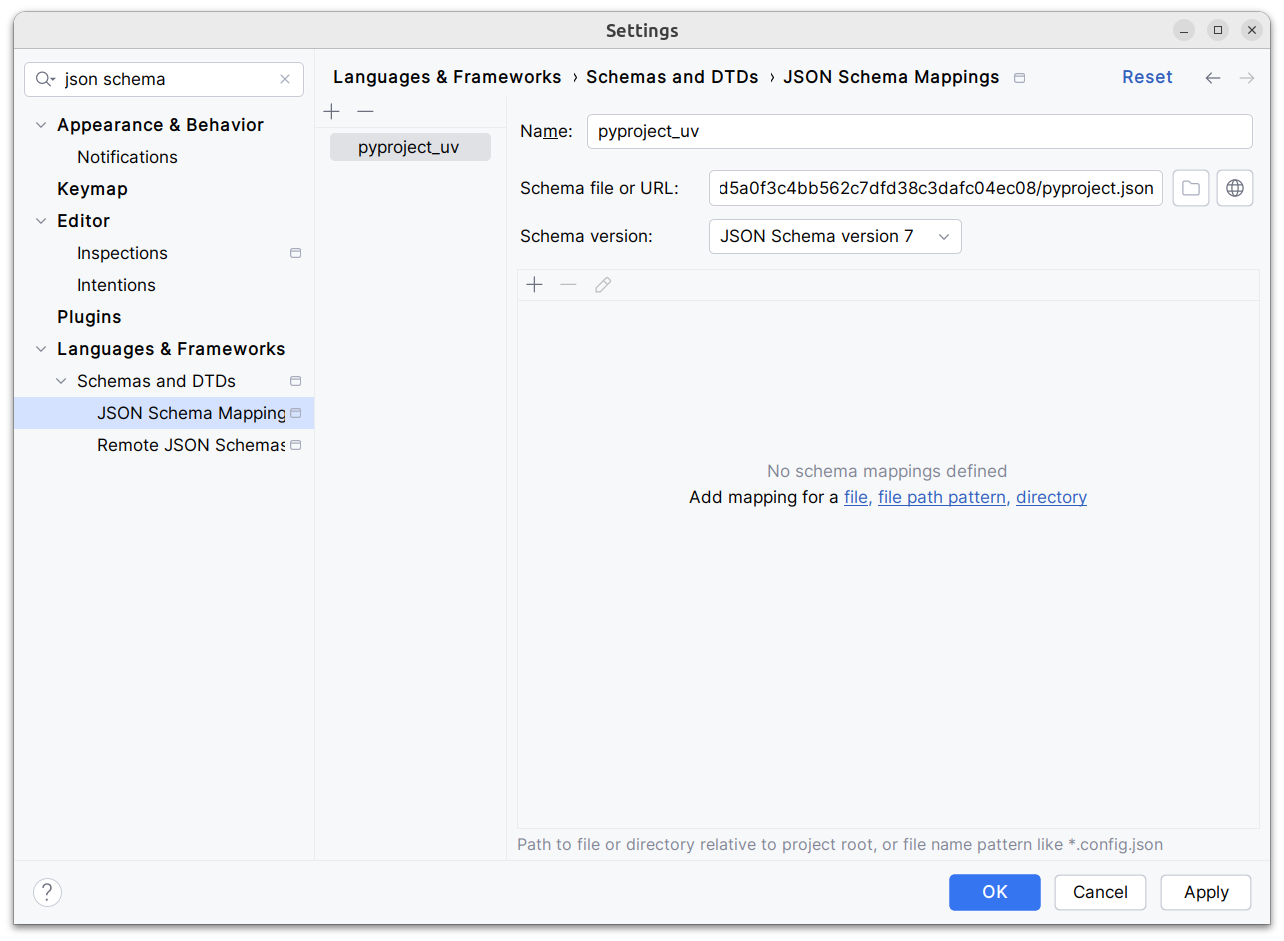

* Json schema integration (works in pycharm, see below)

* Draft user-level docs (see `docs/specifying_dependencies.md`)

It does not implement:

* No `pip compile` testing, deprioritizing towards our own lockfile

* Index pinning (stub definitions only)

* Development dependencies

* Workspace support (stub definitions only)

* Overrides in pyproject.toml

* Patching/replacing dependencies

One technically breaking change is that we now require user provided

pyproject.toml to be valid wrt to PEP 621. Included files still fall

back to PEP 517. That means `pip install -r requirements.txt` requires

it to be valid while `pip install -r requirements.txt` with `-e .` as

content falls back to PEP 517 as before.

## Implementation

The `pep508` requirement is replaced by a new `UvRequirement` (name up

for bikeshedding, not particularly attached to the uv prefix). The still

existing `pep508_rs::Requirement` type is a url format copied from pip's

requirements.txt and doesn't appropriately capture all features we

want/need to support. The bulk of the diff is changing the requirement

type throughout the codebase.

We still use `VerbatimUrl` in many places, where we would expect a

parsed/decomposed url type, specifically:

* Reading core metadata except top level pyproject.toml files, we fail a

step later instead if the url isn't supported.

* Allowed `Urls`.

* `PackageId` with a custom `CanonicalUrl` comparison, instead of

canonicalizing urls eagerly.

* `PubGrubPackage`: We eventually convert the `VerbatimUrl` back to a

`Dist` (`Dist::from_url`), instead of remembering the url.

* Source dist types: We use verbatim url even though we know and require

that these are supported urls we can and have parsed.

I tried to make improve the situation be replacing `VerbatimUrl`, but

these changes would require massive invasive changes (see e.g.

https://github.com/astral-sh/uv/pull/3253). A main problem is the ref

`VersionOrUrl` and applying overrides, which assume the same

requirement/url type everywhere. In its current form, this PR increases

this tech debt.

I've tried to split off PRs and commits, but the main refactoring is

still a single monolith commit to make it compile and the tests pass.

## Demo

Adding

d1ae3b85d5/pyproject.json

as json schema (v7) to pycharm for `pyproject.toml`, you can try the IDE

support already:

[dove.webm](https://github.com/astral-sh/uv/assets/6826232/c293c272-c80b-459d-8c95-8c46a8d198a1)

## Summary

This PR avoids: (1) using the lookahead resolver when `--no-deps` is

specified (we'll never use those requirements), and (2) including any

transitive requirements when searching for allowed URLs, etc., when

`--no-deps` is specified.

Closes https://github.com/astral-sh/uv/issues/3183.

## Summary

This PR leverages our lookahead direct URL resolution to significantly

improve the range of Git URLs that we can accept (e.g., if a user

provides the same requirement, once as a direct dependency, and once as

a tag). We did some of this in #2285, but the solution here is more

general and works for arbitrary transitive URLs.

Closes https://github.com/astral-sh/uv/issues/2614.

## Summary

We iterate over the project "requirements" directly in a variety of

places. However, it's not always the case that an input "requirement" on

its own will _actually_ be part of the resolution, since we support

"overrides".

Historically, then, overrides haven't worked as expected for _direct_

dependencies (and we have some tests that demonstrate the current,

"wrong" behavior). This is just a bug, but it's not really one that

comes up in practice, since it's rare to apply an override to your _own_

dependency.

However, we're now considering expanding the lookahead concept to

include local transitive dependencies. In this case, it's more and more

important that overrides and constraints are handled consistently.

This PR modifies all the locations in which we iterate over requirements

directly, and modifies them to respect overrides (and constraints, where

necessary).

## Summary

This PR enables the resolver to "accept" URLs, prereleases, and local

version specifiers for direct dependencies of path dependencies. As a

result, `uv pip install .` and `uv pip install -e .` now behave

identically, in that neither has a restriction on URL dependencies and

the like.

Closes https://github.com/astral-sh/uv/issues/2643.

Closes https://github.com/astral-sh/uv/issues/1853.

## Summary

This PR ensures that we expand environment variables _before_ sniffing

for the URL scheme (e.g., `file://` vs. `https://` vs. something else).

Closes https://github.com/astral-sh/uv/issues/2375.

## Summary

This PR removes the URL conflict errors when the output of a `uv pip

compile` is used as a constraint to a subsequent `uv pip compile`.

If you run `uv pip compile`, the output file will contain your Git

dependencies, but pinned to a specific commit, like:

```

git+https://github.com/pallets/werkzeug@32e69512134c2f8183c6438b2b2e13fd24e9d19f

```

If you then use the output as a constraint to a subsequent resolution

(e.g., perhaps you require

`git+https://github.com/pallets/werkzeug@main`), we currently fail. I

think this is a reasonable workflow to support when all of these

requirements are coming from _your own_ dependencies. So we now assume

when resolving that the former is a more precise variant of the latter.

Closes https://github.com/astral-sh/uv/issues/1903.

Closes https://github.com/astral-sh/uv/issues/2266.

## Test Plan

`cargo test`

## Summary

This revives a PR from long ago

(https://github.com/astral-sh/uv/pull/383 and

https://github.com/zanieb/pubgrub/pull/24) that modifies how we deal

with dependencies that are declared multiple times within a single

package.

To quote from the originating PR:

> Uses an experimental pubgrub branch (#370) that allows us to handle

multiple version ranges for a single dependency to the solver which

results in better error messages because the derivation tree contains

all of the relevant versions. Previously, the version ranges were merged

(by us) in the resolver before handing them to pubgrub since only one

range could be provided per package. Since we don't merge the versions

anymore, we no longer give the solver an empty range for conflicting

requirements; instead the solver comes to that conclusion from the

provided versions. You can see the improved error message for direct

dependencies in [this

snapshot](https://github.com/astral-sh/puffin/pull/383/files#diff-a0437f2c20cde5e2f15199a3bf81a102b92580063268417847ec9c793a115bd0).

The main issue with that PR was around its handling of URL dependencies,

so this PR _also_ refactors how we handle those. Previously, we stored

URL dependencies on `PubGrubPackage`, but they were omitted from the

hash and equality implementations of `PubGrubPackage`. This led to some

really careful codepaths wherein we had to ensure that we always visited

URLs before non-URL packages, so that the URL-inclusive versions were

included in any hashmaps, etc. I considered preserving this approach,

but it would require us to rely on lots of internal details of PubGrub

(since we'd now be relying on PubGrub to merge those packages in the

"right" order).

So, instead, we now _always_ set the URL on a given package, whenever

that package was _given_ a URL upfront. I think this is easier to reason

about: if the user provided a URL for `flask`, then we should just

always add the URL for `flask`. If we see some other URL for `flask`, we

error, like before. If we see some unknown URL for `flask`, we error,

like before.

Closes https://github.com/astral-sh/uv/issues/1522.

Closes https://github.com/astral-sh/uv/issues/1821.

Closes https://github.com/astral-sh/uv/issues/1615.