## Introduction

PEP 621 is limited. Specifically, it lacks

* Relative path support

* Editable support

* Workspace support

* Index pinning or any sort of index specification

The semantics of urls are a custom extension, PEP 440 does not specify

how to use git references or subdirectories, instead pip has a custom

stringly format. We need to somehow support these while still stying

compatible with PEP 621.

## `tool.uv.source`

Drawing inspiration from cargo, poetry and rye, we add `tool.uv.sources`

or (for now stub only) `tool.uv.workspace`:

```toml

[project]

name = "albatross"

version = "0.1.0"

dependencies = [

"tqdm >=4.66.2,<5",

"torch ==2.2.2",

"transformers[torch] >=4.39.3,<5",

"importlib_metadata >=7.1.0,<8; python_version < '3.10'",

"mollymawk ==0.1.0"

]

[tool.uv.sources]

tqdm = { git = "https://github.com/tqdm/tqdm", rev = "cc372d09dcd5a5eabdc6ed4cf365bdb0be004d44" }

importlib_metadata = { url = "https://github.com/python/importlib_metadata/archive/refs/tags/v7.1.0.zip" }

torch = { index = "torch-cu118" }

mollymawk = { workspace = true }

[tool.uv.workspace]

include = [

"packages/mollymawk"

]

[tool.uv.indexes]

torch-cu118 = "https://download.pytorch.org/whl/cu118"

```

See `docs/specifying_dependencies.md` for a detailed explanation of the

format. The basic gist is that `project.dependencies` is what ends up on

pypi, while `tool.uv.sources` are your non-published additions. We do

support the full range or PEP 508, we just hide it in the docs and

prefer the exploded table for easier readability and less confusing with

actual url parts.

This format should eventually be able to subsume requirements.txt's

current use cases. While we will continue to support the legacy `uv pip`

interface, this is a piece of the uv's own top level interface. Together

with `uv run` and a lockfile format, you should only need to write

`pyproject.toml` and do `uv run`, which generates/uses/updates your

lockfile behind the scenes, no more pip-style requirements involved. It

also lays the groundwork for implementing index pinning.

## Changes

This PR implements:

* Reading and lowering `project.dependencies`,

`project.optional-dependencies` and `tool.uv.sources` into a new

requirements format, including:

* Git dependencies

* Url dependencies

* Path dependencies, including relative and editable

* `pip install` integration

* Error reporting for invalid `tool.uv.sources`

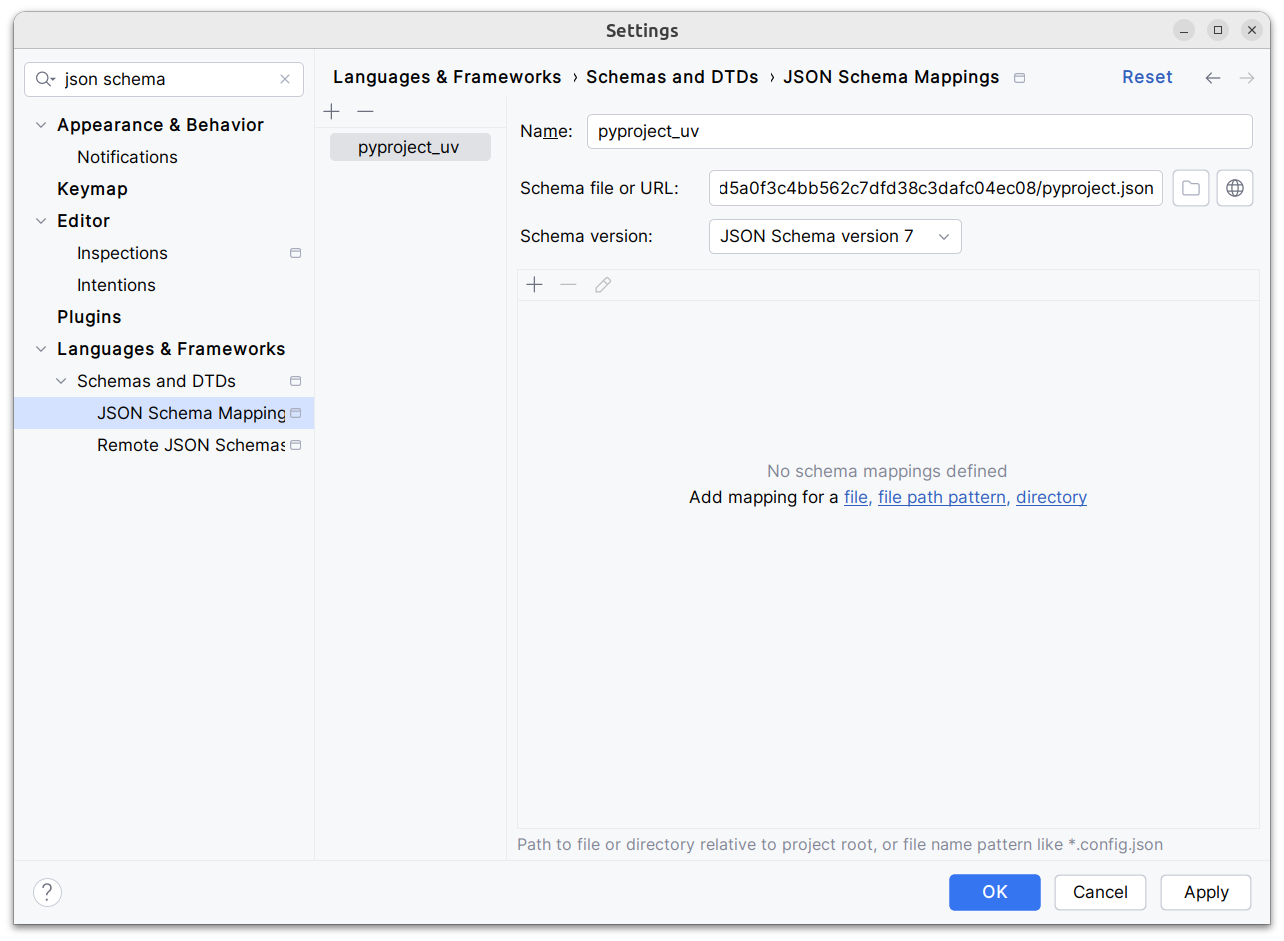

* Json schema integration (works in pycharm, see below)

* Draft user-level docs (see `docs/specifying_dependencies.md`)

It does not implement:

* No `pip compile` testing, deprioritizing towards our own lockfile

* Index pinning (stub definitions only)

* Development dependencies

* Workspace support (stub definitions only)

* Overrides in pyproject.toml

* Patching/replacing dependencies

One technically breaking change is that we now require user provided

pyproject.toml to be valid wrt to PEP 621. Included files still fall

back to PEP 517. That means `pip install -r requirements.txt` requires

it to be valid while `pip install -r requirements.txt` with `-e .` as

content falls back to PEP 517 as before.

## Implementation

The `pep508` requirement is replaced by a new `UvRequirement` (name up

for bikeshedding, not particularly attached to the uv prefix). The still

existing `pep508_rs::Requirement` type is a url format copied from pip's

requirements.txt and doesn't appropriately capture all features we

want/need to support. The bulk of the diff is changing the requirement

type throughout the codebase.

We still use `VerbatimUrl` in many places, where we would expect a

parsed/decomposed url type, specifically:

* Reading core metadata except top level pyproject.toml files, we fail a

step later instead if the url isn't supported.

* Allowed `Urls`.

* `PackageId` with a custom `CanonicalUrl` comparison, instead of

canonicalizing urls eagerly.

* `PubGrubPackage`: We eventually convert the `VerbatimUrl` back to a

`Dist` (`Dist::from_url`), instead of remembering the url.

* Source dist types: We use verbatim url even though we know and require

that these are supported urls we can and have parsed.

I tried to make improve the situation be replacing `VerbatimUrl`, but

these changes would require massive invasive changes (see e.g.

https://github.com/astral-sh/uv/pull/3253). A main problem is the ref

`VersionOrUrl` and applying overrides, which assume the same

requirement/url type everywhere. In its current form, this PR increases

this tech debt.

I've tried to split off PRs and commits, but the main refactoring is

still a single monolith commit to make it compile and the tests pass.

## Demo

Adding

d1ae3b85d5/pyproject.json

as json schema (v7) to pycharm for `pyproject.toml`, you can try the IDE

support already:

[dove.webm](https://github.com/astral-sh/uv/assets/6826232/c293c272-c80b-459d-8c95-8c46a8d198a1)

In *some* places in our crates, `serde` (and `rkyv`) are optional

dependencies. I believe this was done out of reasons of "good sense,"

that is, it follows a Rust ecosystem pattern where serde integration

tends to be an opt-in crate feature. (And similarly for `rkyv`.)

However, ultimately, `uv` itself requires `serde` and `rkyv` to

function. Since our crates are strictly internal, there are limited

consumers for our crates without `serde` (and `rkyv`) enabled. I think

one possibility is that optional `serde` (and `rkyv`) integration means

that someone can do this:

cargo test -p pep440_rs

And this will run tests _without_ `serde` or `rkyv` enabled. That in

turn could lead to faster iteration time by reducing compile times. But,

I'm not sure this is worth supporting. The iterative compilation times

of

individual crates are probably fast enough in debug mode, even with

`serde` and `rkyv` enabled. Namely, `serde` and `rkyv` themselves

shouldn't need to be re-compiled in most cases. On `main`:

```

from-scratch: `cargo test -p pep440_rs --lib` 0.685

incremental: `cargo test -p pep440_rs --lib` 0.278s

from-scratch: `cargo test -p pep440_rs --features serde,rkyv --lib` 3.948s

incremental: `cargo test -p pep440_rs --features serde,rkyv --lib` 0.321s

```

So while a from-scratch build does take significantly longer, an

incremental build is about the same.

The benefit of doing this change is two-fold:

1. It brings out crates into alignment with "reality." In particular,

some crates were _implicitly_ relying on `serde` being enabled

without explicitly declaring it. This technically means that our

`Cargo.toml`s were wrong in some cases, but it is hard to observe it

because of feature unification in a Cargo workspace.

2. We no longer need to deal with the cognitive burden of writing

`#[cfg_attr(feature = "serde", ...)]` everywhere.

This is meant to be a base on which to build. There are some parts

which are implicitly incomplete and others which are explicitly

incomplete. The latter are indicated by TODO comments.

Here is a non-exhaustive list of incomplete things. In many cases, these

are incomplete simply because the data isn't present in a

`ResolutionGraph`. Future work will need to refactor our resolver so

that this data is correctly passed down.

* Not all wheels are included. Only the "selected" wheel for the current

distribution is included.

* Marker expressions are always absent.

* We don't emit hashes for certainly kinds of distributions (direct

URLs, git, and path).

* We don't capture git information from a dependency specification.

Right now, we just always emit "default branch."

There are perhaps also other changes we might want to make to the format

of a more cosmetic nature. Right now, all arrays are encoded using

whatever the `toml` crate decides to do. But we might want to exert more

control over this. For example, by using inline tables or squashing more

things into strings (like I did for `Source` and `Hash`). I think the

main trade-off here is that table arrays are somewhat difficult to read

(especially without indentation), where as squashing things down into a

more condensed format potentially makes future compatible additions

harder.

I also went pretty light on the documentation here than what I would

normally do. That's primarily because I think this code is going to

go through some evolution and I didn't want to spend too much time

documenting something that is likely to change.

Finally, here's an example of the lock file format in TOML for the

`anyio` dependency. I generated it with the following command:

```

cargo run -p uv -- pip compile -p3.10 ~/astral/tmp/reqs/anyio.in --unstable-uv-lock-file

```

And that writes out a `uv.lock` file:

```toml

version = 1

[[distribution]]

name = "anyio"

version = "4.3.0"

source = "registry+https://pypi.org/simple"

[[distribution.wheel]]

url = "2f20c40b45242c0b33774da0e2e34f/anyio-4.3.0-py3-none-any.whl"

hash = "sha256:048e05d0f6caeed70d731f3db756d35dcc1f35747c8c403364a8332c630441b8"

[[distribution.dependencies]]

name = "exceptiongroup"

version = "1.2.1"

source = "registry+https://pypi.org/simple"

[[distribution.dependencies]]

name = "idna"

version = "3.7"

source = "registry+https://pypi.org/simple"

[[distribution.dependencies]]

name = "sniffio"

version = "1.3.1"

source = "registry+https://pypi.org/simple"

[[distribution.dependencies]]

name = "typing-extensions"

version = "4.11.0"

source = "registry+https://pypi.org/simple"

[[distribution]]

name = "exceptiongroup"

version = "1.2.1"

source = "registry+https://pypi.org/simple"

[[distribution.wheel]]

url = "79fe92dd414cadab75055b0ae00b33/exceptiongroup-1.2.1-py3-none-any.whl"

hash = "sha256:5258b9ed329c5bbdd31a309f53cbfb0b155341807f6ff7606a1e801a891b29ad"

[[distribution]]

name = "idna"

version = "3.7"

source = "registry+https://pypi.org/simple"

[[distribution.wheel]]

url = "741d8c8280948df2ea0eda2c8b79e8/idna-3.7-py3-none-any.whl"

hash = "sha256:82fee1fc78add43492d3a1898bfa6d8a904cc97d8427f683ed8e798d07761aa0"

[[distribution]]

name = "sniffio"

version = "1.3.1"

source = "registry+https://pypi.org/simple"

[[distribution.wheel]]

url = "75a9c94214239abab1ea2cc8f38b40/sniffio-1.3.1-py3-none-any.whl"

hash = "sha256:2f6da418d1f1e0fddd844478f41680e794e6051915791a034ff65e5f100525a2"

[[distribution]]

name = "typing-extensions"

version = "4.11.0"

source = "registry+https://pypi.org/simple"

[[distribution.wheel]]

url = "936e2092671b43269810cd589ceaf5/typing_extensions-4.11.0-py3-none-any.whl"

hash = "sha256:c1f94d72897edaf4ce775bb7558d5b79d8126906a14ea5ed1635921406c0387a"

```

The only thing a `OnceMap` really needs to be able to do with the value

is to clone it. All extant uses benefited from having this done for them

by automatically wrapping values in an `Arc`. But this isn't necessarily

true for all things. For example, a value might have an `Arc` internally

to making cloning cheap in other contexts, and it doesn't make sense to

re-wrap it in an `Arc` just to use it with a `OnceMap`. Or

alternatively, cloning might just be cheap enough on its own that an

`Arc` isn't worth it.

## Summary

resolves https://github.com/astral-sh/uv/issues/3106

## Test Plan

added a simple test where the password provided in `UV_INDEX_URL` is

hidden in the output as expected.

## Summary

This PR adds basic struct definitions along with a "workspace" concept

for discovering settings. (The "workspace" terminology is used to match

Ruff; I did not invent it.)

A few notes:

- We discover any `pyproject.toml` or `uv.toml` file in any parent

directory of the current working directory. (We could adjust this to

look at the directories of the input files.)

- We don't actually do anything with the configuration yet; but those

PRs are large and I want this to be reviewed in isolation.

## Summary

This PR enables `--require-hashes` with unnamed requirements. The key

change is that `PackageId` becomes `VersionId` (since it refers to a

package at a specific version), and the new `PackageId` consists of

_either_ a package name _or_ a URL. The hashes are keyed by `PackageId`,

so we can generate the `RequiredHashes` before we have names for all

packages, and enforce them throughout.

Closes#2979.

## Summary

This PR enables hash generation for URL requirements when the user

provides `--generate-hashes` to `pip compile`. While we include the

hashes from the registry already, today, we omit hashes for URLs.

To power hash generation, we introduce a `HashPolicy` abstraction:

```rust

#[derive(Debug, Clone, Copy, PartialEq, Eq)]

pub enum HashPolicy<'a> {

/// No hash policy is specified.

None,

/// Hashes should be generated (specifically, a SHA-256 hash), but not validated.

Generate,

/// Hashes should be validated against a pre-defined list of hashes. If necessary, hashes should

/// be generated so as to ensure that the archive is valid.

Validate(&'a [HashDigest]),

}

```

All of the methods on the distribution database now accept this policy,

instead of accepting `&'a [HashDigest]`.

Closes#2378.

## Summary

This PR modifies the distribution database to return both the

`Metadata23` and the computed hashes when clients request metadata.

No behavior changes, but this will be necessary to power

`--generate-hashes`.

## Summary

If the user runs with `--generate-hashes`, and the lockfile doesn't

contain _any_ hashes for a package (despite being pinned), we should add

new hashes. This mirrors running `uv pip compile --generate-hashes` for

the first time with an existing lockfile.

Closes#2962.

## Summary

Right now, we have a `Hashes` representation that looks like:

```rust

/// A dictionary mapping a hash name to a hex encoded digest of the file.

///

/// PEP 691 says multiple hashes can be included and the interpretation is left to the client.

#[derive(Debug, Clone, Eq, PartialEq, Default, Deserialize)]

pub struct Hashes {

pub md5: Option<Box<str>>,

pub sha256: Option<Box<str>>,

pub sha384: Option<Box<str>>,

pub sha512: Option<Box<str>>,

}

```

It stems from the PyPI API, which returns a dictionary of hashes.

We tend to pass these around as a vector of `Vec<Hashes>`. But it's a

bit strange because each entry in that vector could contain multiple

hashes. And it makes it difficult to ask questions like "Is

`sha256:ab21378ca980a8` in the set of hashes"?

This PR instead treats `Hashes` as the PyPI-internal type, and uses a

new `Vec<HashDigest>` everywhere in our own APIs.

## Summary

This partially revives https://github.com/astral-sh/uv/pull/2135 (with

some modifications) to enable users to opt-in to looking for packages

across multiple indexes.

The behavior is such that, in version selection, we take _any_

compatible version from a "higher-priority" index over the compatible

versions of a "lower-priority" index, even if that means we might accept

an "older" version.

Closes https://github.com/astral-sh/uv/issues/2775.

## Summary

Rather than storing the `redirects` on the resolver, this PR just

re-uses the "convert this URL to precise" logic when we convert to a

`Resolution` after-the-fact. I think this is a lot simpler: it removes

state from the resolver, and simplifies a lot of the hooks around

distribution fetching (e.g., `get_or_build_wheel_metadata` no longer

returns `(Metadata23, Option<Url>)`).

## Summary

This fixes a potential bug that revealed itself in

https://github.com/astral-sh/uv/pull/2761. We don't run into this now

because we always use "allowed URLs", stores the "last" compatible URL

in the map. But the use of the "raw" URL (rather than the "canonical"

URL) means that other writers have to follow that same assumption and

iterate over dependencies in-order.

## Summary

We iterate over the project "requirements" directly in a variety of

places. However, it's not always the case that an input "requirement" on

its own will _actually_ be part of the resolution, since we support

"overrides".

Historically, then, overrides haven't worked as expected for _direct_

dependencies (and we have some tests that demonstrate the current,

"wrong" behavior). This is just a bug, but it's not really one that

comes up in practice, since it's rare to apply an override to your _own_

dependency.

However, we're now considering expanding the lookahead concept to

include local transitive dependencies. In this case, it's more and more

important that overrides and constraints are handled consistently.

This PR modifies all the locations in which we iterate over requirements

directly, and modifies them to respect overrides (and constraints, where

necessary).

Previously, we did not consider installed distributions as candidates

while performing resolution. Here, we update the resolver to use

installed distributions that satisfy requirements instead of pulling new

distributions from the registry.

The implementation details are as follows:

- We now provide `SitePackages` to the `CandidateSelector`

- If an installed distribution satisfies the requirement, we prefer it

over remote distributions

- We do not want to allow installed distributions in some cases, i.e.,

upgrade and reinstall

- We address this by introducing an `Exclusions` type which tracks

installed packages to ignore during selection

- There's a new `ResolvedDist` wrapper with `Installed(InstalledDist)`

and `Installable(Dist)` variants

- This lets us pass already installed distributions throughout the

resolver

The user-facing behavior is thoroughly covered in the tests, but

briefly:

- Installing a package that depends on an already-installed package

prefers the local version over the index

- Installing a package with a name that matches an already-installed URL

package does not reinstall from the index

- Reinstalling (--reinstall) a package by name _will_ pull from the

index even if an already-installed URL package is present

- To reinstall the URL package, you must specify the URL in the request

Closes https://github.com/astral-sh/uv/issues/1661

Addresses:

- https://github.com/astral-sh/uv/issues/1476

- https://github.com/astral-sh/uv/issues/1856

- https://github.com/astral-sh/uv/issues/2093

- https://github.com/astral-sh/uv/issues/2282

- https://github.com/astral-sh/uv/issues/2383

- https://github.com/astral-sh/uv/issues/2560

## Test plan

- [x] Reproduction at `charlesnicholson/uv-pep420-bug` passes

- [x] Unit test for editable package

([#1476](https://github.com/astral-sh/uv/issues/1476))

- [x] Unit test for previously installed package with empty registry

- [x] Unit test for local non-editable package

- [x] Unit test for new version available locally but not in registry

([#2093](https://github.com/astral-sh/uv/issues/2093))

- ~[ ] Unit test for wheel not available in registry but already

installed locally

([#2282](https://github.com/astral-sh/uv/issues/2282))~ (seems

complicated and not worthwhile)

- [x] Unit test for install from URL dependency then with matching

version ([#2383](https://github.com/astral-sh/uv/issues/2383))

- [x] Unit test for install of new package that depends on installed

package does not change version

([#2560](https://github.com/astral-sh/uv/issues/2560))

- [x] Unit test that `pip compile` does _not_ consider installed

packages

## Summary

This PR removes the custom `DistFinder` that we use in `pip sync`. This

originally existed because `VersionMap` wasn't lazy, and so we saved a

lot of time in `DistFinder` by reading distribution data lazily. But

now, AFAICT, there's really no benefit. Maintaining `DistFinder` means

we effectively have to maintain two resolvers, and end up fixing bugs in

`DistFinder` that don't exist in the `Resolver` (like #2688.

Closes#2694.

Closes#2443.

## Test Plan

I ran this benchmark a bunch. It's basically a wash. Sometimes one is

faster than the other.

```

❯ python -m scripts.bench \

--uv-path ./target/release/main \

--uv-path ./target/release/uv \

scripts/requirements/compiled/trio.txt --min-runs 50 --benchmark install-warm --warmup 25

Benchmark 1: ./target/release/main (install-warm)

Time (mean ± σ): 54.0 ms ± 10.6 ms [User: 8.7 ms, System: 98.1 ms]

Range (min … max): 45.5 ms … 94.3 ms 50 runs

Warning: Statistical outliers were detected. Consider re-running this benchmark on a quiet PC without any interferences from other programs. It might help to use the '--warmup' or '--prepare' options.

Benchmark 2: ./target/release/uv (install-warm)

Time (mean ± σ): 50.7 ms ± 9.2 ms [User: 8.7 ms, System: 98.6 ms]

Range (min … max): 44.0 ms … 98.6 ms 50 runs

Warning: The first benchmarking run for this command was significantly slower than the rest (77.6 ms). This could be caused by (filesystem) caches that were not filled until after the first run. You should consider using the '--warmup' option to fill those caches before the actual benchmark. Alternatively, use the '--prepare' option to clear the caches before each timing run.

Summary

'./target/release/uv (install-warm)' ran

1.06 ± 0.29 times faster than './target/release/main (install-warm)'

```

With https://github.com/pubgrub-rs/pubgrub/pull/190, pubgrub attaches

all types to a dependency provider to reduce the number of generics. We

need a dummy dependency provider now to emulate this. On the plus side,

pep440_rs drops its pubgrub dependency.

## Summary

We strip extras by default, but there are some valid use-cases in which

they're required (see the linked issue). This PR doesn't change our

default, but it does add `--no-strip-extras`, which lets users preserve

extras in the output requirements.

Closes https://github.com/astral-sh/uv/issues/1595.

## Summary

When a user runs with `--output-file` and `--generate-hashes`, we should

_only_ update the hashes if the pinned version itself changes.

Closes https://github.com/astral-sh/uv/issues/1530.

## Summary

It turns out that when we iterate over the incompatibilities of a

package, PubGrub will _also_ show us the inverse dependencies. I suspect

this was rare, because we have a version check at the bottom... So, this

specifically required that you had some dependency that didn't end up

appearing in the output resolution, but that matched the version

constraints of the package you care about.

In this case, `langchain-community` depends on `langchain-core`. So we

were seeing an incompatibility like:

```rust

FromDependencyOf(Package(PackageName("langchain-community"), None, None), Range { segments: [(Included("0.0.10"), Included("0.0.10")), (Included("0.0.11"), Included("0.0.11"))] }, Package(PackageName("langchain-core"), None, None), Range { segments: [(Included("0.1.8"), Excluded("0.2"))] })

```

Where we were iterating over `langchain-core`, and looking for version

`0.0.11`... which happens to match `langchain-community`.

(`langchain-community was omitted from the resolution; hence, it didn't

exist in the map.)

Closes https://github.com/astral-sh/uv/issues/2358.

## Summary

PyPI now supports Metadata 2.2, which means distributions with Metadata

2.2-compliant metadata will start to appear. The upside is that if a

source distribution includes a `PKG-INFO` file with (1) a metadata

version of 2.2 or greater, and (2) no dynamic fields (at least, of the

fields we rely on), we can read the metadata from the `PKG-INFO` file

directly rather than running _any_ of the PEP 517 build hooks.

Closes https://github.com/astral-sh/uv/issues/2009.

## Summary

In uv, we're going to use `--no-emit-package` for this, to convey that

the package will be included in the resolution but not in the output

file. It also mirrors flags like `--emit-index-url`.

We're also including an `--unsafe-package` alias.

Closes https://github.com/astral-sh/uv/issues/1415.

## Summary

This revives a PR from long ago

(https://github.com/astral-sh/uv/pull/383 and

https://github.com/zanieb/pubgrub/pull/24) that modifies how we deal

with dependencies that are declared multiple times within a single

package.

To quote from the originating PR:

> Uses an experimental pubgrub branch (#370) that allows us to handle

multiple version ranges for a single dependency to the solver which

results in better error messages because the derivation tree contains

all of the relevant versions. Previously, the version ranges were merged

(by us) in the resolver before handing them to pubgrub since only one

range could be provided per package. Since we don't merge the versions

anymore, we no longer give the solver an empty range for conflicting

requirements; instead the solver comes to that conclusion from the

provided versions. You can see the improved error message for direct

dependencies in [this

snapshot](https://github.com/astral-sh/puffin/pull/383/files#diff-a0437f2c20cde5e2f15199a3bf81a102b92580063268417847ec9c793a115bd0).

The main issue with that PR was around its handling of URL dependencies,

so this PR _also_ refactors how we handle those. Previously, we stored

URL dependencies on `PubGrubPackage`, but they were omitted from the

hash and equality implementations of `PubGrubPackage`. This led to some

really careful codepaths wherein we had to ensure that we always visited

URLs before non-URL packages, so that the URL-inclusive versions were

included in any hashmaps, etc. I considered preserving this approach,

but it would require us to rely on lots of internal details of PubGrub

(since we'd now be relying on PubGrub to merge those packages in the

"right" order).

So, instead, we now _always_ set the URL on a given package, whenever

that package was _given_ a URL upfront. I think this is easier to reason

about: if the user provided a URL for `flask`, then we should just

always add the URL for `flask`. If we see some other URL for `flask`, we

error, like before. If we see some unknown URL for `flask`, we error,

like before.

Closes https://github.com/astral-sh/uv/issues/1522.

Closes https://github.com/astral-sh/uv/issues/1821.

Closes https://github.com/astral-sh/uv/issues/1615.

## Summary

Hello there! The motivation for this feature is described here #1678

## Test Plan

I've added unit tests and also tested this manually on my work project

by comparing it to the original `pip-compile` output - it looks much

like the `pip-compile` generated lock file.

## Summary

If you're developing on a package like `attrs` locally, and it has a

recursive extra like `attrs[dev]`, it turns out that we then try to find

the `attrs` in `attrs[dev]` from the registry, rather than recognizing

that it's part of the editable.

This PR fixes the issue by making editables slightly more first-class

throughout the resolver. Instead of mocking metadata, we explicitly

check for extras in various places. Part of the problem here is that we

treated editables as URL dependencies, but when we saw an _extra_ like

`attrs[dev]`, we didn't map that back to the URL. So now, we treat them

as registry dependencies, but with the appropriate guardrails

throughout.

Closes https://github.com/astral-sh/uv/issues/1447.

## Test Plan

- Cloned `attrs`.

- Ran `cargo run venv && cargo run pip install -e ".[dev]" -v`.

First, replace all usages in files in-place. I used my editor for this.

If someone wants to add a one-liner that'd be fun.

Then, update directory and file names:

```

# Run twice for nested directories

find . -type d -print0 | xargs -0 rename s/puffin/uv/g

find . -type d -print0 | xargs -0 rename s/puffin/uv/g

# Update files

find . -type f -print0 | xargs -0 rename s/puffin/uv/g

```

Then add all the files again

```

# Add all the files again

git add crates

git add python/uv

# This one needs a force-add

git add -f crates/uv-trampoline

```