The only pubgrub error that can occur is a `NoSolutionError`, and the

only place it can occur is `unit_propagation`, all other variants if

`PubGrubError` are unreachable. By changing the return type on pubgrub's

side (https://github.com/astral-sh/pubgrub/pull/28), we can remove the

pattern matching and the `unreachable!()` asserts on `PubGrubError`.

Our pubgrub error wrapper used to have a two phased initialization,

first mostly stubs in `solve[_tracked]()` and then adding the actual

context in `resolve()`. When constructing the error in `solve` we

already have all this context, so we can unify this to a regular

constructor and remove the special casing in `resolve()` and `hints()`.

Downstack PR: #4481

## Introduction

We support forking the dependency resolution to support conflicting

registry requirements for different platforms, say on package range is

required for an older python version while a newer is required for newer

python versions, or dependencies that are different per platform. We

need to extend this support to direct URL requirements.

```toml

dependencies = [

"iniconfig @ 62565a6e1ceac6173dc9db836a5b46/iniconfig-2.0.0-py3-none-any.whl ; python_version >= '3.12'",

"iniconfig @ b3c12c6d70988d7baea9578f3c48f3/iniconfig-1.1.1-py2.py3-none-any.whl ; python_version < '3.12'"

]

```

This did not work because `Urls` was built on the assumption that there

is a single allowed URL per package. We collect all allowed URL ahead of

resolution by following direct URL dependencies (including path

dependencies) transitively, i.e. a registry distribution can't require a

URL.

## The same package can have Registry and URL requirements

Consider the following two cases:

requirements.in:

```text

werkzeug==2.0.0

werkzeug @ 960bb4017c4aed12b5ed8b78e0153e/Werkzeug-2.0.0-py3-none-any.whl

```

pyproject.toml:

```toml

dependencies = [

"iniconfig == 1.1.1 ; python_version < '3.12'",

"iniconfig @ git+https://github.com/pytest-dev/iniconfig@93f5930e668c0d1ddf4597e38dd0dea4e2665e7a ; python_version >= '3.12'",

]

```

In the first case, we want the URL to override the registry dependency,

in the second case we want to fork and have one branch use the registry

and the other the URL. We have to know about this in

`PubGrubRequirement::from_registry_requirement`, but we only fork after

the current method.

Consider the following case too:

a:

```

c==1.0.0

b @ https://b.zip

```

b:

```

c @ https://c_new.zip ; python_version >= '3.12'",

c @ https://c_old.zip ; python_version < '3.12'",

```

When we convert the requirements of `a`, we can't know the url of `c`

yet. The solution is to remove the `Url` from `PubGrubPackage`: The

`Url` is redundant with `PackageName`, there can be only one url per

package name per fork. We now do the following: We track the urls from

requirements in `PubGrubDependency`. After forking, we call

`add_package_version_dependencies` where we apply override URLs, check

if the URL is allowed and check if the url is unique in this fork. When

we request a distribution, we ask the fork urls for the real URL. Since

we prioritize url dependencies over registry dependencies and skip

packages with `Urls` entries in pre-visiting, we know that when fetching

a package, we know if it has a url or not.

## URL conflicts

pyproject.toml (invalid):

```toml

dependencies = [

"iniconfig @ e96292c7f723f1fa332fe4ed6dfbec/iniconfig-1.1.0.tar.gz",

"iniconfig @ b3c12c6d70988d7baea9578f3c48f3/iniconfig-1.1.1-py2.py3-none-any.whl ; python_version < '3.12'",

"iniconfig @ 62565a6e1ceac6173dc9db836a5b46/iniconfig-2.0.0-py3-none-any.whl ; python_version >= '3.12'",

]

```

On the fork state, we keep `ForkUrls` that check for conflicts after

forking, rejecting the third case because we added two packages of the

same name with different URLs.

We need to flatten out the requirements before transformation into

pubgrub requirements to get the full list of other requirements which

may contain a URL, which was changed in a previous PR: #4430.

## Complex Example

a:

```toml

dependencies = [

# Force a split

"anyio==4.3.0 ; python_version >= '3.12'",

"anyio==4.2.0 ; python_version < '3.12'",

# Include URLs transitively

"b"

]

```

b:

```toml

dependencies = [

# Only one is used in each split.

"b1 ; python_version < '3.12'",

"b2 ; python_version >= '3.12'",

"b3 ; python_version >= '3.12'",

]

```

b1:

```toml

dependencies = [

"iniconfig @ b3c12c6d70988d7baea9578f3c48f3/iniconfig-1.1.1-py2.py3-none-any.whl",

]

```

b2:

```toml

dependencies = [

"iniconfig @ 62565a6e1ceac6173dc9db836a5b46/iniconfig-2.0.0-py3-none-any.whl",

]

```

b3:

```toml

dependencies = [

"iniconfig @ e96292c7f723f1fa332fe4ed6dfbec/iniconfig-1.1.0.tar.gz",

]

```

In this example, all packages are url requirements (directory

requirements) and the root package is `a`. We first split on `a`, `b`

being in each split. In the first fork, we reach `b1`, the fork URLs are

empty, we insert the iniconfig 1.1.1 URL, and then we skip over `b2` and

`b3` since the mark is disjoint with the fork markers. In the second

fork, we skip over `b1`, visit `b2`, insert the iniconfig 2.0.0 URL into

the again empty fork URLs, then visit `b3` and try to insert the

iniconfig 1.1.0 URL. At this point we find a conflict for the iniconfig

URL and error.

## Closing

The git tests are slow, but they make the best example for different URL

types i could find.

Part of #3927. This PR does not handle `Locals` or pre-releases yet.

## Summary

This PR adds a lowering similar to that seen in

https://github.com/astral-sh/uv/pull/3100, but this time, for markers.

Like `PubGrubPackageInner::Extra`, we now have

`PubGrubPackageInner::Marker`. The dependencies of the `Marker` are

`PubGrubPackageInner::Package` with and without the marker.

As an example of why this is useful: assume we have `urllib3>=1.22.0` as

a direct dependency. Later, we see `urllib3 ; python_version > '3.7'` as

a transitive dependency. As-is, we might (for some reason) pick a very

old version of `urllib3` to satisfy `urllib3 ; python_version > '3.7'`,

then attempt to fetch its dependencies, which could even involve

building a very old version of `urllib3 ; python_version > '3.7'`. Once

we fetch the dependencies, we would see that `urllib3` at the same

version is _also_ a dependency (because we tack it on). In the new

scheme though, as soon as we "choose" the very old version of `urllib3 ;

python_version > '3.7'`, we'd then see that `urllib3` (the base package)

is also a dependency; so we see a conflict before we even fetch the

dependencies of the old variant.

With this, I can successfully resolve the case in #4099.

Closes https://github.com/astral-sh/uv/issues/4099.

Follow-up to #4016.

This exposes `Range` and `PubGrubSpecifier` from outside the resolver to

use pubgrub's union creating a dependency edge we don't really want.

## Summary

Externally, development dependencies are currently structured as a flat

list of PEP 580-compatible requirements:

```toml

[tool.uv]

dev-dependencies = ["werkzeug"]

```

When locking, we lock all development dependencies; when syncing, users

can provide `--dev`.

Internally, though, we model them as dependency groups, similar to

Poetry, PDM, and [PEP 735](https://peps.python.org/pep-0735). This

enables us to change out the user-facing frontend without changing the

internal implementation, once we've decided how these should be exposed

to users.

A few important decisions encoded in the implementation (which we can

change later):

1. Groups are enabled globally, for all dependencies. This differs from

extras, which are enabled on a per-requirement basis. Note, however,

that we'll only discover groups for uv-enabled packages anyway.

2. Installing a group requires installing the base package. We rely on

this in PubGrub to ensure that we resolve to the same version (even

though we only expect groups to come from workspace dependencies anyway,

which are unique). But anyway, that's encoded in the resolver right now,

just as it is for extras.

## Summary

I believe this is no longer necessary. Part of the problem here is that

we can't _know_ the full set of available Python versions, especially

once we start resolving against a `Requires-Python` rather than a fixed

set of two versions.

When parsing requirements from any source, directly parse the url parts

(and reject unsupported urls) instead of parsing url parts at a later

stage. This removes a bunch of error branches and concludes the work

parsing url parts once and passing them around everywhere.

Many usages of the assembled `VerbatimUrl` remain, but these can be

removed incrementally.

Please review commit-by-commit.

Pubgrub stores incompatibilities as (package name, version range)

tuples, meaning it needs to clone the package name for each

incompatibility, and each non-borrowed operation on incompatibilities.

https://github.com/astral-sh/uv/pull/3673 made me realize that

`PubGrubPackage` has gotten large (expensive to copy), so like `Version`

and other structs, i've added an `Arc` wrapper around it.

It's a pity clippy forbids `.deref()`, it's less opaque than `&**` and

has IDE support (clicking on `.deref()` jumps to the right impl).

## Benchmarks

It looks like this matters most for complex resolutions which, i assume

because they carry larger `PubGrubPackageInner::Package` and

`PubGrubPackageInner::Extra` types.

```bash

hyperfine --warmup 5 "./uv-main pip compile -q ./scripts/requirements/jupyter.in" "./uv-branch pip compile -q ./scripts/requirements/jupyter.in"

hyperfine --warmup 5 "./uv-main pip compile -q ./scripts/requirements/airflow.in" "./uv-branch pip compile -q ./scripts/requirements/airflow.in"

hyperfine --warmup 5 "./uv-main pip compile -q ./scripts/requirements/boto3.in" "./uv-branch pip compile -q ./scripts/requirements/boto3.in"

```

```

Benchmark 1: ./uv-main pip compile -q ./scripts/requirements/jupyter.in

Time (mean ± σ): 18.2 ms ± 1.6 ms [User: 14.4 ms, System: 26.0 ms]

Range (min … max): 15.8 ms … 22.5 ms 181 runs

Benchmark 2: ./uv-branch pip compile -q ./scripts/requirements/jupyter.in

Time (mean ± σ): 17.8 ms ± 1.4 ms [User: 14.4 ms, System: 25.3 ms]

Range (min … max): 15.4 ms … 23.1 ms 159 runs

Summary

./uv-branch pip compile -q ./scripts/requirements/jupyter.in ran

1.02 ± 0.12 times faster than ./uv-main pip compile -q ./scripts/requirements/jupyter.in

```

```

Benchmark 1: ./uv-main pip compile -q ./scripts/requirements/airflow.in

Time (mean ± σ): 153.7 ms ± 3.5 ms [User: 165.2 ms, System: 157.6 ms]

Range (min … max): 150.4 ms … 163.0 ms 19 runs

Benchmark 2: ./uv-branch pip compile -q ./scripts/requirements/airflow.in

Time (mean ± σ): 123.9 ms ± 4.6 ms [User: 152.4 ms, System: 133.8 ms]

Range (min … max): 118.4 ms … 138.1 ms 24 runs

Summary

./uv-branch pip compile -q ./scripts/requirements/airflow.in ran

1.24 ± 0.05 times faster than ./uv-main pip compile -q ./scripts/requirements/airflow.in

```

```

Benchmark 1: ./uv-main pip compile -q ./scripts/requirements/boto3.in

Time (mean ± σ): 327.0 ms ± 3.8 ms [User: 344.5 ms, System: 71.6 ms]

Range (min … max): 322.7 ms … 334.6 ms 10 runs

Benchmark 2: ./uv-branch pip compile -q ./scripts/requirements/boto3.in

Time (mean ± σ): 311.2 ms ± 3.1 ms [User: 339.3 ms, System: 63.1 ms]

Range (min … max): 307.8 ms … 317.0 ms 10 runs

Summary

./uv-branch pip compile -q ./scripts/requirements/boto3.in ran

1.05 ± 0.02 times faster than ./uv-main pip compile -q ./scripts/requirements/boto3.in

```

<!--

Thank you for contributing to uv! To help us out with reviewing, please

consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

This PR introduces parallelism to the resolver. Specifically, we can

perform PubGrub resolution on a separate thread, while keeping all I/O

on the tokio thread. We already have the infrastructure set up for this

with the channel and `OnceMap`, which makes this change relatively

simple. The big change needed to make this possible is removing the

lifetimes on some of the types that need to be shared between the

resolver and pubgrub thread.

A related PR, https://github.com/astral-sh/uv/pull/1163, found that

adding `yield_now` calls improved throughput. With optimal scheduling we

might be able to get away with everything on the same thread here.

However, in the ideal pipeline with perfect prefetching, the resolution

and prefetching can run completely in parallel without depending on one

another. While this would be very difficult to achieve, even with our

current prefetching pattern we see a consistent performance improvement

from parallelism.

This does also require reverting a few of the changes from

https://github.com/astral-sh/uv/pull/3413, but not all of them. The

sharing is isolated to the resolver task.

## Test Plan

On smaller tasks performance is mixed with ~2% improvements/regressions

on both sides. However, on medium-large resolution tasks we see the

benefits of parallelism, with improvements anywhere from 10-50%.

```

./scripts/requirements/jupyter.in

Benchmark 1: ./target/profiling/baseline (resolve-warm)

Time (mean ± σ): 29.2 ms ± 1.8 ms [User: 20.3 ms, System: 29.8 ms]

Range (min … max): 26.4 ms … 36.0 ms 91 runs

Benchmark 2: ./target/profiling/parallel (resolve-warm)

Time (mean ± σ): 25.5 ms ± 1.0 ms [User: 19.5 ms, System: 25.5 ms]

Range (min … max): 23.6 ms … 27.8 ms 99 runs

Summary

./target/profiling/parallel (resolve-warm) ran

1.15 ± 0.08 times faster than ./target/profiling/baseline (resolve-warm)

```

```

./scripts/requirements/boto3.in

Benchmark 1: ./target/profiling/baseline (resolve-warm)

Time (mean ± σ): 487.1 ms ± 6.2 ms [User: 464.6 ms, System: 61.6 ms]

Range (min … max): 480.0 ms … 497.3 ms 10 runs

Benchmark 2: ./target/profiling/parallel (resolve-warm)

Time (mean ± σ): 430.8 ms ± 9.3 ms [User: 529.0 ms, System: 77.2 ms]

Range (min … max): 417.1 ms … 442.5 ms 10 runs

Summary

./target/profiling/parallel (resolve-warm) ran

1.13 ± 0.03 times faster than ./target/profiling/baseline (resolve-warm)

```

```

./scripts/requirements/airflow.in

Benchmark 1: ./target/profiling/baseline (resolve-warm)

Time (mean ± σ): 478.1 ms ± 18.8 ms [User: 482.6 ms, System: 205.0 ms]

Range (min … max): 454.7 ms … 508.9 ms 10 runs

Benchmark 2: ./target/profiling/parallel (resolve-warm)

Time (mean ± σ): 308.7 ms ± 11.7 ms [User: 428.5 ms, System: 209.5 ms]

Range (min … max): 287.8 ms … 323.1 ms 10 runs

Summary

./target/profiling/parallel (resolve-warm) ran

1.55 ± 0.08 times faster than ./target/profiling/baseline (resolve-warm)

```

## Summary

I think this is overall good change because it explicitly encodes (in

the type system) something that was previously implicit. I'm not a huge

fan of the names here, open to input.

It covers some of https://github.com/astral-sh/uv/issues/3506 but I

don't think it _closes_ it.

Pubgrub got a new feature where all unavailability is a custom, instead

of the reasonless `UnavailableDependencies` and our custom `String` type

previously (https://github.com/pubgrub-rs/pubgrub/pull/208). This PR

introduces a `UnavailableReason` that tracks either an entire version

being unusable, or a specific version. The error messages now also track

this difference properly.

The pubgrub commit is our main rebased onto the merged

https://github.com/pubgrub-rs/pubgrub/pull/208, i'll push

`konsti/main-rebase-generic-reason` to `main` after checking for rebase

problems.

## Summary

All of the resolver code is run on the main thread, so a lot of the

`Send` bounds and uses of `DashMap` and `Arc` are unnecessary. We could

also switch to using single-threaded versions of `Mutex` and `Notify` in

some places, but there isn't really a crate that provides those I would

be comfortable with using.

The `Arc` in `OnceMap` can't easily be removed because of the uv-auth

code which uses the

[reqwest-middleware](https://docs.rs/reqwest-middleware/latest/reqwest_middleware/trait.Middleware.html)

crate, that seems to adds unnecessary `Send` bounds because of

`async-trait`. We could duplicate the code and create a `OnceMapLocal`

variant, but I don't feel that's worth it.

## Introduction

PEP 621 is limited. Specifically, it lacks

* Relative path support

* Editable support

* Workspace support

* Index pinning or any sort of index specification

The semantics of urls are a custom extension, PEP 440 does not specify

how to use git references or subdirectories, instead pip has a custom

stringly format. We need to somehow support these while still stying

compatible with PEP 621.

## `tool.uv.source`

Drawing inspiration from cargo, poetry and rye, we add `tool.uv.sources`

or (for now stub only) `tool.uv.workspace`:

```toml

[project]

name = "albatross"

version = "0.1.0"

dependencies = [

"tqdm >=4.66.2,<5",

"torch ==2.2.2",

"transformers[torch] >=4.39.3,<5",

"importlib_metadata >=7.1.0,<8; python_version < '3.10'",

"mollymawk ==0.1.0"

]

[tool.uv.sources]

tqdm = { git = "https://github.com/tqdm/tqdm", rev = "cc372d09dcd5a5eabdc6ed4cf365bdb0be004d44" }

importlib_metadata = { url = "https://github.com/python/importlib_metadata/archive/refs/tags/v7.1.0.zip" }

torch = { index = "torch-cu118" }

mollymawk = { workspace = true }

[tool.uv.workspace]

include = [

"packages/mollymawk"

]

[tool.uv.indexes]

torch-cu118 = "https://download.pytorch.org/whl/cu118"

```

See `docs/specifying_dependencies.md` for a detailed explanation of the

format. The basic gist is that `project.dependencies` is what ends up on

pypi, while `tool.uv.sources` are your non-published additions. We do

support the full range or PEP 508, we just hide it in the docs and

prefer the exploded table for easier readability and less confusing with

actual url parts.

This format should eventually be able to subsume requirements.txt's

current use cases. While we will continue to support the legacy `uv pip`

interface, this is a piece of the uv's own top level interface. Together

with `uv run` and a lockfile format, you should only need to write

`pyproject.toml` and do `uv run`, which generates/uses/updates your

lockfile behind the scenes, no more pip-style requirements involved. It

also lays the groundwork for implementing index pinning.

## Changes

This PR implements:

* Reading and lowering `project.dependencies`,

`project.optional-dependencies` and `tool.uv.sources` into a new

requirements format, including:

* Git dependencies

* Url dependencies

* Path dependencies, including relative and editable

* `pip install` integration

* Error reporting for invalid `tool.uv.sources`

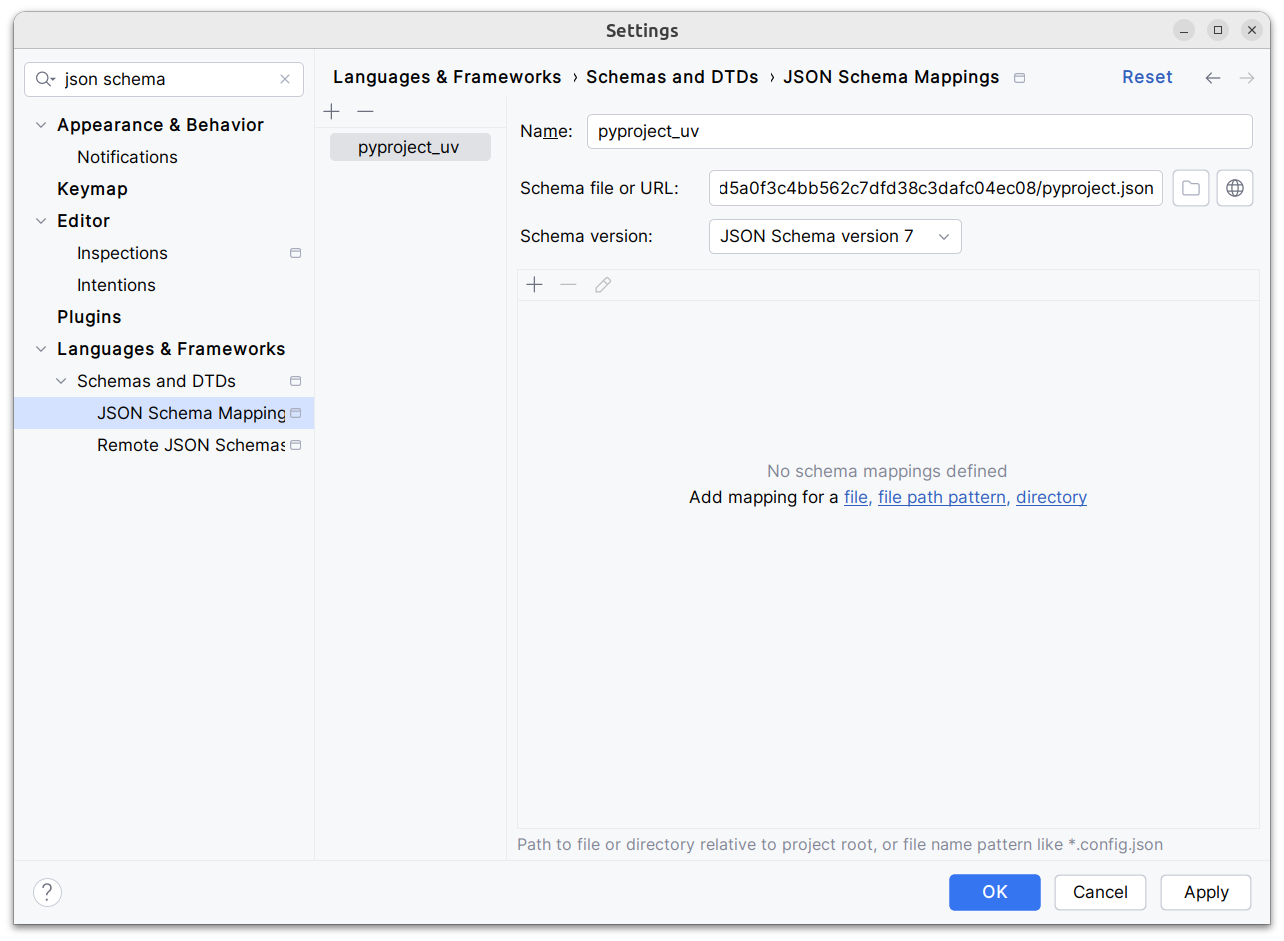

* Json schema integration (works in pycharm, see below)

* Draft user-level docs (see `docs/specifying_dependencies.md`)

It does not implement:

* No `pip compile` testing, deprioritizing towards our own lockfile

* Index pinning (stub definitions only)

* Development dependencies

* Workspace support (stub definitions only)

* Overrides in pyproject.toml

* Patching/replacing dependencies

One technically breaking change is that we now require user provided

pyproject.toml to be valid wrt to PEP 621. Included files still fall

back to PEP 517. That means `pip install -r requirements.txt` requires

it to be valid while `pip install -r requirements.txt` with `-e .` as

content falls back to PEP 517 as before.

## Implementation

The `pep508` requirement is replaced by a new `UvRequirement` (name up

for bikeshedding, not particularly attached to the uv prefix). The still

existing `pep508_rs::Requirement` type is a url format copied from pip's

requirements.txt and doesn't appropriately capture all features we

want/need to support. The bulk of the diff is changing the requirement

type throughout the codebase.

We still use `VerbatimUrl` in many places, where we would expect a

parsed/decomposed url type, specifically:

* Reading core metadata except top level pyproject.toml files, we fail a

step later instead if the url isn't supported.

* Allowed `Urls`.

* `PackageId` with a custom `CanonicalUrl` comparison, instead of

canonicalizing urls eagerly.

* `PubGrubPackage`: We eventually convert the `VerbatimUrl` back to a

`Dist` (`Dist::from_url`), instead of remembering the url.

* Source dist types: We use verbatim url even though we know and require

that these are supported urls we can and have parsed.

I tried to make improve the situation be replacing `VerbatimUrl`, but

these changes would require massive invasive changes (see e.g.

https://github.com/astral-sh/uv/pull/3253). A main problem is the ref

`VersionOrUrl` and applying overrides, which assume the same

requirement/url type everywhere. In its current form, this PR increases

this tech debt.

I've tried to split off PRs and commits, but the main refactoring is

still a single monolith commit to make it compile and the tests pass.

## Demo

Adding

d1ae3b85d5/pyproject.json

as json schema (v7) to pycharm for `pyproject.toml`, you can try the IDE

support already:

[dove.webm](https://github.com/astral-sh/uv/assets/6826232/c293c272-c80b-459d-8c95-8c46a8d198a1)

The only thing a `OnceMap` really needs to be able to do with the value

is to clone it. All extant uses benefited from having this done for them

by automatically wrapping values in an `Arc`. But this isn't necessarily

true for all things. For example, a value might have an `Arc` internally

to making cloning cheap in other contexts, and it doesn't make sense to

re-wrap it in an `Arc` just to use it with a `OnceMap`. Or

alternatively, cloning might just be cheap enough on its own that an

`Arc` isn't worth it.

## Summary

I found some of these too bare (e.g., when they _just_ show a package

name with no other information). For me, this makes it easier to

differentiate error message copy from data. But open to other opinions.

Take a look at the fixture changes and LMK!

Given requirements like:

```

black==23.1.0

black[colorama]

```

The resolver will (on `main`) add a dependency on Black, and then try to

use the most recent version of Black to satisfy `black[colorama]`. For

sake of example, assume `black==24.0.0` is the most recent version. Once

the selects this most recent version, it'll fetch the metadata, then

return the dependencies for `black==24.0.0` with the `colorama` extra

enabled. Finally, it will tack on `black==24.0.0` (a dependency on the

base package). The resolver will then detect a conflict between

`black==23.1.0` and `black==24.0.0`, and throw out

`black[colorama]==24.0.0`, trying to next most-recent version.

This is both wasteful and can cause problems, since we're fetching

metadata for versions that will _never_ satisfy the resolver. In the

`apache-airflow[all]` case, I also ran into an issue whereby we were

attempting to build very old versions of `apache-airflow` due to

`apache-airflow[pandas]`, which in turn led to resolution failures.

The solution proposed here is that we create a new proxy package with

exactly two dependencies: one on `black` and one of `black[colorama]`.

Both of these packages must be at the same version as the proxy package,

so the resolver knows much _earlier_ that (in the above example) the

extra variant _must_ match `23.1.0`.

## Summary

This PR adds support for hash-checking mode in `pip install` and `pip

sync`. It's a large change, both in terms of the size of the diff and

the modifications in behavior, but it's also one that's hard to merge in

pieces (at least, with any test coverage) since it needs to work

end-to-end to be useful and testable.

Here are some of the most important highlights:

- We store hashes in the cache. Where we previously stored pointers to

unzipped wheels in the `archives` directory, we now store pointers with

a set of known hashes. So every pointer to an unzipped wheel also

includes its known hashes.

- By default, we don't compute any hashes. If the user runs with

`--require-hashes`, and the cache doesn't contain those hashes, we

invalidate the cache, redownload the wheel, and compute the hashes as we

go. For users that don't run with `--require-hashes`, there will be no

change in performance. For users that _do_, the only change will be if

they don't run with `--generate-hashes` -- then they may see some

repeated work between resolution and installation, if they use `pip

compile` then `pip sync`.

- Many of the distribution types now include a `hashes` field, like

`CachedDist` and `LocalWheel`.

- Our behavior is similar to pip, in that we enforce hashes when pulling

any remote distributions, and when pulling from our own cache. Like pip,

though, we _don't_ enforce hashes if a distribution is _already_

installed.

- Hash validity is enforced in a few different places:

1. During resolution, we enforce hash validity based on the hashes

reported by the registry. If we need to access a source distribution,

though, we then enforce hash validity at that point too, prior to

running any untrusted code. (This is enforced in the distribution

database.)

2. In the install plan, we _only_ add cached distributions that have

matching hashes. If a cached distribution is missing any hashes, or the

hashes don't match, we don't return them from the install plan.

3. In the downloader, we _only_ return distributions with matching

hashes.

4. The final combination of "things we install" are: (1) the wheels from

the cache, and (2) the downloaded wheels. So this ensures that we never

install any mismatching distributions.

- Like pip, if `--require-hashes` is provided, we require that _all_

distributions are pinned with either `==` or a direct URL. We also

require that _all_ distributions have hashes.

There are a few notable TODOs:

- We don't support hash-checking mode for unnamed requirements. These

should be _somewhat_ rare, though? Since `pip compile` never outputs

unnamed requirements. I can fix this, it's just some additional work.

- We don't automatically enable `--require-hashes` with a hash exists in

the requirements file. We require `--require-hashes`.

Closes#474.

## Test Plan

I'd like to add some tests for registries that report incorrect hashes,

but otherwise: `cargo test`

## Summary

This partially revives https://github.com/astral-sh/uv/pull/2135 (with

some modifications) to enable users to opt-in to looking for packages

across multiple indexes.

The behavior is such that, in version selection, we take _any_

compatible version from a "higher-priority" index over the compatible

versions of a "lower-priority" index, even if that means we might accept

an "older" version.

Closes https://github.com/astral-sh/uv/issues/2775.

Previously, we did not consider installed distributions as candidates

while performing resolution. Here, we update the resolver to use

installed distributions that satisfy requirements instead of pulling new

distributions from the registry.

The implementation details are as follows:

- We now provide `SitePackages` to the `CandidateSelector`

- If an installed distribution satisfies the requirement, we prefer it

over remote distributions

- We do not want to allow installed distributions in some cases, i.e.,

upgrade and reinstall

- We address this by introducing an `Exclusions` type which tracks

installed packages to ignore during selection

- There's a new `ResolvedDist` wrapper with `Installed(InstalledDist)`

and `Installable(Dist)` variants

- This lets us pass already installed distributions throughout the

resolver

The user-facing behavior is thoroughly covered in the tests, but

briefly:

- Installing a package that depends on an already-installed package

prefers the local version over the index

- Installing a package with a name that matches an already-installed URL

package does not reinstall from the index

- Reinstalling (--reinstall) a package by name _will_ pull from the

index even if an already-installed URL package is present

- To reinstall the URL package, you must specify the URL in the request

Closes https://github.com/astral-sh/uv/issues/1661

Addresses:

- https://github.com/astral-sh/uv/issues/1476

- https://github.com/astral-sh/uv/issues/1856

- https://github.com/astral-sh/uv/issues/2093

- https://github.com/astral-sh/uv/issues/2282

- https://github.com/astral-sh/uv/issues/2383

- https://github.com/astral-sh/uv/issues/2560

## Test plan

- [x] Reproduction at `charlesnicholson/uv-pep420-bug` passes

- [x] Unit test for editable package

([#1476](https://github.com/astral-sh/uv/issues/1476))

- [x] Unit test for previously installed package with empty registry

- [x] Unit test for local non-editable package

- [x] Unit test for new version available locally but not in registry

([#2093](https://github.com/astral-sh/uv/issues/2093))

- ~[ ] Unit test for wheel not available in registry but already

installed locally

([#2282](https://github.com/astral-sh/uv/issues/2282))~ (seems

complicated and not worthwhile)

- [x] Unit test for install from URL dependency then with matching

version ([#2383](https://github.com/astral-sh/uv/issues/2383))

- [x] Unit test for install of new package that depends on installed

package does not change version

([#2560](https://github.com/astral-sh/uv/issues/2560))

- [x] Unit test that `pip compile` does _not_ consider installed

packages

With https://github.com/pubgrub-rs/pubgrub/pull/190, pubgrub attaches

all types to a dependency provider to reduce the number of generics. We

need a dummy dependency provider now to emulate this. On the plus side,

pep440_rs drops its pubgrub dependency.

## Summary

This PR adds limited support for PEP 440-compatible local version

testing. Our behavior is _not_ comprehensively in-line with the spec.

However, it does fix by _far_ the biggest practical limitation, and

resolves all the issues that've been raised on uv related to local

versions without introducing much complexity into the resolver, so it

feels like a good tradeoff for me.

I'll summarize the change here, but for more context, see [Andrew's

write-up](https://github.com/astral-sh/uv/issues/1855#issuecomment-1967024866)

in the linked issue.

Local version identifiers are really tricky because of asymmetry.

`==1.2.3` should allow `1.2.3+foo`, but `==1.2.3+foo` should not allow

`1.2.3`. It's very hard to map them to PubGrub, because PubGrub doesn't

think of things in terms of individual specifiers (unlike the PEP 440

spec) -- it only thinks in terms of ranges.

Right now, resolving PyTorch and friends fails, because...

- The user provides requirements like `torch==2.0.0+cu118` and

`torchvision==0.15.1+cu118`.

- We then match those exact versions.

- We then look at the requirements of `torchvision==0.15.1+cu118`, which

includes `torch==2.0.0`.

- Under PEP 440, this is fine, because `torch @ 2.0.0+cu118` should be

compatible with `torch==2.0.0`.

- In our model, though, it's not, because these are different versions.

If we change our comparison logic in various places to allow this, we

risk breaking some fundamental assumptions of PubGrub around version

continuity.

- Thus, we fail to resolve, because we can't accept both `torch @ 2.0.0`

and `torch @ 2.0.0+cu118`.

As compared to the solutions we explored in

https://github.com/astral-sh/uv/issues/1855#issuecomment-1967024866, at

a high level, this approach differs in that we lie about the

_dependencies_ of packages that rely on our local-version-using package,

rather than lying about the versions that exist, or the version we're

returning, etc.

In short:

- When users specify local versions upfront, we keep track of them. So,

above, we'd take note of `torch` and `torchvision`.

- When we convert the dependencies of a package to PubGrub ranges, we

check if the requirement matches `torch` or `torchvision`. If it's

an`==`, we check if it matches (in the above example) for

`torch==2.0.0`. If so, we _change_ the requirement to

`torch==2.0.0+cu118`. (If it's `==` some other version, we return an

incompatibility.)

In other words, we selectively override the declared dependencies by

making them _more specific_ if a compatible local version was specified

upfront.

The net effect here is that the motivating PyTorch resolutions all work.

And, in general, transitive local versions work as expected.

The thing that still _doesn't_ work is: imagine if there were _only_

local versions of `torch` available. Like, `torch @ 2.0.0` didn't exist,

but `torch @ 2.0.0+cpu` did, and `torch @ 2.0.0+gpu` did, and so on.

`pip install torch==2.0.0` would arbitrarily choose one one `2.0.0+cpu`

or `2.0.0+gpu`, and that's correct as per PEP 440 (local version

segments should be completely ignored on `torch==2.0.0`). However, uv

would fail to identify a compatible version. I'd _probably_ prefer to

fix this, although candidly I think our behavior is _ok_ in practice,

and it's never been reported as an issue.

Closes https://github.com/astral-sh/uv/issues/1855.

Closes https://github.com/astral-sh/uv/issues/2080.

Closes https://github.com/astral-sh/uv/issues/2328.

## Summary

Per [PEP 508](https://peps.python.org/pep-0508/), `python_version` is

just major and minor:

Right now, we're using the provided version directly, so if it's, e.g.,

`-p 3.11.8`, we'll inject the wrong marker. This was causing `pandas` to

omit `numpy` when `-p 3.11.8` was provided, since its markers look like:

```

Requires-Dist: numpy<2,>=1.22.4; python_version < "3.11"

Requires-Dist: numpy<2,>=1.23.2; python_version == "3.11"

Requires-Dist: numpy<2,>=1.26.0; python_version >= "3.12"

```

Closes https://github.com/astral-sh/uv/issues/2392.

## Summary

This is a more robust fix for

https://github.com/astral-sh/uv/issues/2300.

The basic issue is:

- When we resolve, we attempt to pre-fetch the distribution metadata for

candidate packages.

- It's possible that the resolution completes _without_ those pre-fetch

responses. (In the linked issue, this was mainly because we were running

with `--no-deps`, but the pre-fetch was causing us to attempt to build a

package to get its dependencies. The resolution would then finish before

the build completed.)

- In that case, the `Index` will be marked as "waiting" for that

response -- but it'll never come through.

- If there's a subsequent call to the `Index`, to see if we should fetch

or are waiting for that response, we'll end up waiting for it forever,

since it _looks_ like it's in-flight (but isn't). (In the linked issue,

we had to build the source distribution for the install phase of `pip

install`, but `setuptools` was in this bad state from the _resolve_

phase.)

This PR modifies the resolver to ensure that we flush the stream of

requests before returning. Specifically, we now `join` rather than

`select` between the resolution and request-handling futures.

This _could_ be wasteful, since we don't _need_ those requests, but it

at least ensures that every `.wait` is followed by ` .done`. In

practice, I expect this not to have any significant effect on

performance, since we end up using the pre-fetched distributions almost

every time.

## Test Plan

I ran through the test plan from

https://github.com/astral-sh/uv/pull/2373, but ran the build 10 times

and ensured it never crashed. (I reverted

https://github.com/astral-sh/uv/pull/2373, since that _also_ fixes the

issue in the proximate case, by never fetching `setuptools` during the

resolve phase.)

I also added logging to verify that requests are being handled _after_

the resolution completes, as expected.

I also introduced an arbitrary error in `fetch` to ensure that the error

was immediately propagated.

Address a few pedantic lints

lints are separated into separate commits so they can be reviewed

individually.

I've not added enforcement for any of these lints, but that could be

added if desirable.

## Summary

This revives a PR from long ago

(https://github.com/astral-sh/uv/pull/383 and

https://github.com/zanieb/pubgrub/pull/24) that modifies how we deal

with dependencies that are declared multiple times within a single

package.

To quote from the originating PR:

> Uses an experimental pubgrub branch (#370) that allows us to handle

multiple version ranges for a single dependency to the solver which

results in better error messages because the derivation tree contains

all of the relevant versions. Previously, the version ranges were merged

(by us) in the resolver before handing them to pubgrub since only one

range could be provided per package. Since we don't merge the versions

anymore, we no longer give the solver an empty range for conflicting

requirements; instead the solver comes to that conclusion from the

provided versions. You can see the improved error message for direct

dependencies in [this

snapshot](https://github.com/astral-sh/puffin/pull/383/files#diff-a0437f2c20cde5e2f15199a3bf81a102b92580063268417847ec9c793a115bd0).

The main issue with that PR was around its handling of URL dependencies,

so this PR _also_ refactors how we handle those. Previously, we stored

URL dependencies on `PubGrubPackage`, but they were omitted from the

hash and equality implementations of `PubGrubPackage`. This led to some

really careful codepaths wherein we had to ensure that we always visited

URLs before non-URL packages, so that the URL-inclusive versions were

included in any hashmaps, etc. I considered preserving this approach,

but it would require us to rely on lots of internal details of PubGrub

(since we'd now be relying on PubGrub to merge those packages in the

"right" order).

So, instead, we now _always_ set the URL on a given package, whenever

that package was _given_ a URL upfront. I think this is easier to reason

about: if the user provided a URL for `flask`, then we should just

always add the URL for `flask`. If we see some other URL for `flask`, we

error, like before. If we see some unknown URL for `flask`, we error,

like before.

Closes https://github.com/astral-sh/uv/issues/1522.

Closes https://github.com/astral-sh/uv/issues/1821.

Closes https://github.com/astral-sh/uv/issues/1615.

First, replace all usages in files in-place. I used my editor for this.

If someone wants to add a one-liner that'd be fun.

Then, update directory and file names:

```

# Run twice for nested directories

find . -type d -print0 | xargs -0 rename s/puffin/uv/g

find . -type d -print0 | xargs -0 rename s/puffin/uv/g

# Update files

find . -type f -print0 | xargs -0 rename s/puffin/uv/g

```

Then add all the files again

```

# Add all the files again

git add crates

git add python/uv

# This one needs a force-add

git add -f crates/uv-trampoline

```