mirror of

https://github.com/astral-sh/uv

synced 2026-01-23 14:30:14 -05:00

## Introduction

PEP 621 is limited. Specifically, it lacks

* Relative path support

* Editable support

* Workspace support

* Index pinning or any sort of index specification

The semantics of urls are a custom extension, PEP 440 does not specify

how to use git references or subdirectories, instead pip has a custom

stringly format. We need to somehow support these while still stying

compatible with PEP 621.

## `tool.uv.source`

Drawing inspiration from cargo, poetry and rye, we add `tool.uv.sources`

or (for now stub only) `tool.uv.workspace`:

```toml

[project]

name = "albatross"

version = "0.1.0"

dependencies = [

"tqdm >=4.66.2,<5",

"torch ==2.2.2",

"transformers[torch] >=4.39.3,<5",

"importlib_metadata >=7.1.0,<8; python_version < '3.10'",

"mollymawk ==0.1.0"

]

[tool.uv.sources]

tqdm = { git = "https://github.com/tqdm/tqdm", rev = "cc372d09dcd5a5eabdc6ed4cf365bdb0be004d44" }

importlib_metadata = { url = "https://github.com/python/importlib_metadata/archive/refs/tags/v7.1.0.zip" }

torch = { index = "torch-cu118" }

mollymawk = { workspace = true }

[tool.uv.workspace]

include = [

"packages/mollymawk"

]

[tool.uv.indexes]

torch-cu118 = "https://download.pytorch.org/whl/cu118"

```

See `docs/specifying_dependencies.md` for a detailed explanation of the

format. The basic gist is that `project.dependencies` is what ends up on

pypi, while `tool.uv.sources` are your non-published additions. We do

support the full range or PEP 508, we just hide it in the docs and

prefer the exploded table for easier readability and less confusing with

actual url parts.

This format should eventually be able to subsume requirements.txt's

current use cases. While we will continue to support the legacy `uv pip`

interface, this is a piece of the uv's own top level interface. Together

with `uv run` and a lockfile format, you should only need to write

`pyproject.toml` and do `uv run`, which generates/uses/updates your

lockfile behind the scenes, no more pip-style requirements involved. It

also lays the groundwork for implementing index pinning.

## Changes

This PR implements:

* Reading and lowering `project.dependencies`,

`project.optional-dependencies` and `tool.uv.sources` into a new

requirements format, including:

* Git dependencies

* Url dependencies

* Path dependencies, including relative and editable

* `pip install` integration

* Error reporting for invalid `tool.uv.sources`

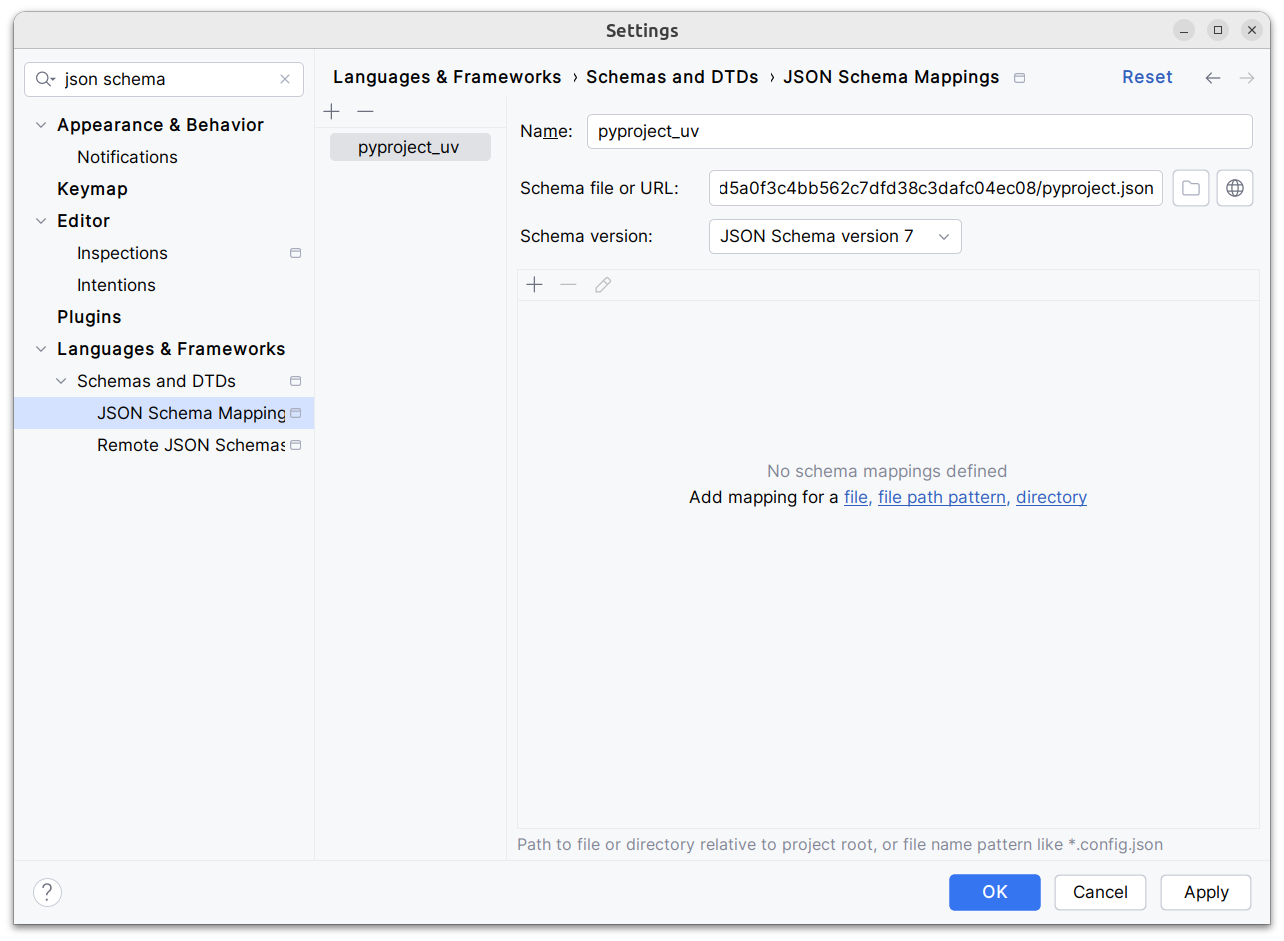

* Json schema integration (works in pycharm, see below)

* Draft user-level docs (see `docs/specifying_dependencies.md`)

It does not implement:

* No `pip compile` testing, deprioritizing towards our own lockfile

* Index pinning (stub definitions only)

* Development dependencies

* Workspace support (stub definitions only)

* Overrides in pyproject.toml

* Patching/replacing dependencies

One technically breaking change is that we now require user provided

pyproject.toml to be valid wrt to PEP 621. Included files still fall

back to PEP 517. That means `pip install -r requirements.txt` requires

it to be valid while `pip install -r requirements.txt` with `-e .` as

content falls back to PEP 517 as before.

## Implementation

The `pep508` requirement is replaced by a new `UvRequirement` (name up

for bikeshedding, not particularly attached to the uv prefix). The still

existing `pep508_rs::Requirement` type is a url format copied from pip's

requirements.txt and doesn't appropriately capture all features we

want/need to support. The bulk of the diff is changing the requirement

type throughout the codebase.

We still use `VerbatimUrl` in many places, where we would expect a

parsed/decomposed url type, specifically:

* Reading core metadata except top level pyproject.toml files, we fail a

step later instead if the url isn't supported.

* Allowed `Urls`.

* `PackageId` with a custom `CanonicalUrl` comparison, instead of

canonicalizing urls eagerly.

* `PubGrubPackage`: We eventually convert the `VerbatimUrl` back to a

`Dist` (`Dist::from_url`), instead of remembering the url.

* Source dist types: We use verbatim url even though we know and require

that these are supported urls we can and have parsed.

I tried to make improve the situation be replacing `VerbatimUrl`, but

these changes would require massive invasive changes (see e.g.

https://github.com/astral-sh/uv/pull/3253). A main problem is the ref

`VersionOrUrl` and applying overrides, which assume the same

requirement/url type everywhere. In its current form, this PR increases

this tech debt.

I've tried to split off PRs and commits, but the main refactoring is

still a single monolith commit to make it compile and the tests pass.

## Demo

Adding

d1ae3b85d5/pyproject.json

as json schema (v7) to pycharm for `pyproject.toml`, you can try the IDE

support already:

[dove.webm](https://github.com/astral-sh/uv/assets/6826232/c293c272-c80b-459d-8c95-8c46a8d198a1)

212 lines

7.6 KiB

Rust

212 lines

7.6 KiB

Rust

use std::path::PathBuf;

|

|

use std::str::FromStr;

|

|

|

|

use anyhow::Result;

|

|

use clap::Parser;

|

|

use futures::StreamExt;

|

|

use indicatif::ProgressStyle;

|

|

use itertools::Itertools;

|

|

use tokio::time::Instant;

|

|

use tracing::{info, info_span, Span};

|

|

use tracing_indicatif::span_ext::IndicatifSpanExt;

|

|

|

|

use distribution_types::{IndexLocations, Requirement};

|

|

use pep440_rs::{Version, VersionSpecifier, VersionSpecifiers};

|

|

use pep508_rs::VersionOrUrl;

|

|

use uv_cache::{Cache, CacheArgs};

|

|

use uv_client::{OwnedArchive, RegistryClient, RegistryClientBuilder};

|

|

use uv_configuration::{ConfigSettings, NoBinary, NoBuild, SetupPyStrategy};

|

|

use uv_dispatch::BuildDispatch;

|

|

use uv_interpreter::PythonEnvironment;

|

|

use uv_normalize::PackageName;

|

|

use uv_resolver::{FlatIndex, InMemoryIndex};

|

|

use uv_types::{BuildContext, BuildIsolation, InFlight};

|

|

|

|

#[derive(Parser)]

|

|

pub(crate) struct ResolveManyArgs {

|

|

/// Path to a file containing one requirement per line.

|

|

requirements: PathBuf,

|

|

#[clap(long)]

|

|

limit: Option<usize>,

|

|

/// Don't build source distributions. This means resolving will not run arbitrary code. The

|

|

/// cached wheels of already built source distributions will be reused.

|

|

#[clap(long)]

|

|

no_build: bool,

|

|

/// Run this many tasks in parallel.

|

|

#[clap(long, default_value = "50")]

|

|

num_tasks: usize,

|

|

/// Force the latest version when no version is given.

|

|

#[clap(long)]

|

|

latest_version: bool,

|

|

#[command(flatten)]

|

|

cache_args: CacheArgs,

|

|

}

|

|

|

|

/// Try to find the latest version of a package, ignoring error because we report them during resolution properly

|

|

async fn find_latest_version(

|

|

client: &RegistryClient,

|

|

package_name: &PackageName,

|

|

) -> Option<Version> {

|

|

client

|

|

.simple(package_name)

|

|

.await

|

|

.ok()

|

|

.into_iter()

|

|

.flatten()

|

|

.filter_map(|(_index, raw_simple_metadata)| {

|

|

let simple_metadata = OwnedArchive::deserialize(&raw_simple_metadata);

|

|

Some(simple_metadata.into_iter().next()?.version)

|

|

})

|

|

.max()

|

|

}

|

|

|

|

pub(crate) async fn resolve_many(args: ResolveManyArgs) -> Result<()> {

|

|

let cache = Cache::try_from(args.cache_args)?;

|

|

|

|

let data = fs_err::read_to_string(&args.requirements)?;

|

|

|

|

let tf_models_nightly = PackageName::from_str("tf-models-nightly").unwrap();

|

|

let lines = data

|

|

.lines()

|

|

.map(pep508_rs::Requirement::from_str)

|

|

.filter_ok(|req| req.name != tf_models_nightly);

|

|

|

|

let requirements: Vec<pep508_rs::Requirement> = if let Some(limit) = args.limit {

|

|

lines.take(limit).collect::<Result<_, _>>()?

|

|

} else {

|

|

lines.collect::<Result<_, _>>()?

|

|

};

|

|

let total = requirements.len();

|

|

|

|

let venv = PythonEnvironment::from_virtualenv(&cache)?;

|

|

let in_flight = InFlight::default();

|

|

let client = RegistryClientBuilder::new(cache.clone()).build();

|

|

|

|

let header_span = info_span!("resolve many");

|

|

header_span.pb_set_style(&ProgressStyle::default_bar());

|

|

header_span.pb_set_length(total as u64);

|

|

let _header_span_enter = header_span.enter();

|

|

|

|

let no_build = if args.no_build {

|

|

NoBuild::All

|

|

} else {

|

|

NoBuild::None

|

|

};

|

|

|

|

let mut tasks = futures::stream::iter(requirements)

|

|

.map(|requirement| {

|

|

async {

|

|

// Use a separate `InMemoryIndex` for each requirement.

|

|

let index = InMemoryIndex::default();

|

|

let index_locations = IndexLocations::default();

|

|

let setup_py = SetupPyStrategy::default();

|

|

let flat_index = FlatIndex::default();

|

|

let config_settings = ConfigSettings::default();

|

|

|

|

// Create a `BuildDispatch` for each requirement.

|

|

let build_dispatch = BuildDispatch::new(

|

|

&client,

|

|

&cache,

|

|

venv.interpreter(),

|

|

&index_locations,

|

|

&flat_index,

|

|

&index,

|

|

&in_flight,

|

|

setup_py,

|

|

&config_settings,

|

|

BuildIsolation::Isolated,

|

|

install_wheel_rs::linker::LinkMode::default(),

|

|

&no_build,

|

|

&NoBinary::None,

|

|

);

|

|

|

|

let start = Instant::now();

|

|

|

|

let requirement = if args.latest_version && requirement.version_or_url.is_none() {

|

|

if let Some(version) = find_latest_version(&client, &requirement.name).await {

|

|

let equals_version = VersionOrUrl::VersionSpecifier(

|

|

VersionSpecifiers::from(VersionSpecifier::equals_version(version)),

|

|

);

|

|

pep508_rs::Requirement {

|

|

name: requirement.name,

|

|

extras: requirement.extras,

|

|

version_or_url: Some(equals_version),

|

|

marker: None,

|

|

}

|

|

} else {

|

|

requirement

|

|

}

|

|

} else {

|

|

requirement

|

|

};

|

|

|

|

let result = build_dispatch

|

|

.resolve(&[

|

|

Requirement::from_pep508(requirement.clone()).expect("Invalid requirement")

|

|

])

|

|

.await;

|

|

(requirement.to_string(), start.elapsed(), result)

|

|

}

|

|

})

|

|

.buffer_unordered(args.num_tasks);

|

|

|

|

let mut success = 0usize;

|

|

let mut errors = Vec::new();

|

|

while let Some(result) = tasks.next().await {

|

|

let (package, duration, result) = result;

|

|

match result {

|

|

Ok(_) => {

|

|

info!(

|

|

"Success ({}/{}, {} ms): {}",

|

|

success + errors.len(),

|

|

total,

|

|

duration.as_millis(),

|

|

package,

|

|

);

|

|

success += 1;

|

|

}

|

|

Err(err) => {

|

|

let err_formatted =

|

|

if err

|

|

.source()

|

|

.and_then(|err| err.source())

|

|

.is_some_and(|err| {

|

|

err.to_string() == "Building source distributions is disabled"

|

|

})

|

|

{

|

|

"Building source distributions is disabled".to_string()

|

|

} else {

|

|

err.chain()

|

|

.map(|err| {

|

|

let formatted = err.to_string();

|

|

// Cut overly long c/c++ compile output

|

|

if formatted.lines().count() > 20 {

|

|

let formatted: Vec<_> = formatted.lines().collect();

|

|

formatted[..20].join("\n")

|

|

+ "\n[...]\n"

|

|

+ &formatted[formatted.len() - 20..].join("\n")

|

|

} else {

|

|

formatted

|

|

}

|

|

})

|

|

.join("\n Caused by: ")

|

|

};

|

|

info!(

|

|

"Error for {} ({}/{}, {} ms): {}",

|

|

package,

|

|

success + errors.len(),

|

|

total,

|

|

duration.as_millis(),

|

|

err_formatted

|

|

);

|

|

errors.push(package);

|

|

}

|

|

}

|

|

Span::current().pb_inc(1);

|

|

}

|

|

|

|

info!("Errors: {}", errors.join(", "));

|

|

info!("Success: {}, Error: {}", success, errors.len());

|

|

Ok(())

|

|

}

|