## Summary

We'll revert back to the crates.io release once it's up-to-date, but

better to get this out now that Python 3.12 is released.

## Test Plan

`cargo test`

## Summary

This PR enables `ruff format` to format Jupyter notebooks.

Most of the work is contained in a new `format_source` method that

formats a generic `SourceKind`, then returns `Some(transformed)` if the

source required formatting, or `None` otherwise.

Closes https://github.com/astral-sh/ruff/issues/7598.

## Test Plan

Ran `cat foo.py | cargo run -p ruff_cli -- format --stdin-filename

Untitled.ipynb`; verified that the console showed a reasonable error:

```console

warning: Failed to read notebook Untitled.ipynb: Expected a Jupyter Notebook, which must be internally stored as JSON, but this file isn't valid JSON: EOF while parsing a value at line 1 column 0

```

Ran `cat Untitled.ipynb | cargo run -p ruff_cli -- format

--stdin-filename Untitled.ipynb`; verified that the JSON output

contained formatted source code.

## Summary

When writing back notebooks via `stdout`, we need to write back the

entire JSON content, not _just_ the fixed source code. Otherwise,

writing the output _back_ to the file will yield an invalid notebook.

Closes https://github.com/astral-sh/ruff/issues/7747

## Test Plan

`cargo test`

## Summary

It turns out that _some_ identifiers can contain newlines --

specifically, dot-delimited import identifiers, like:

```python

import foo\

.bar

```

At present, we print all identifiers verbatim, which causes us to retain

the `\` in the formatted output. This also leads to violating some debug

assertions (see the linked issue, though that's a symptom of this

formatting failure).

This PR adds detection for import identifiers that contain newlines, and

formats them via `text` (slow) rather than `source_code_slice` (fast) in

those cases.

Closes https://github.com/astral-sh/ruff/issues/7734.

## Test Plan

`cargo test`

## Summary

There's no way for users to fix this warning if they're intentionally

using an "invalid" PEP 593 annotation, as is the case in CPython. This

is a symptom of having warnings that aren't themselves diagnostics. If

we want this to be user-facing, we should add a diagnostic for it!

## Test Plan

Ran `cargo run -p ruff_cli -- check foo.py -n` on:

```python

from typing import Annotated

Annotated[int]

```

## Summary

If we have, e.g.:

```python

sum((

factor.dims for factor in bases

), [])

```

We generate three edits: two insertions (for the `operator` and

`functools` imports), and then one replacement (for the `sum` call

itself). We need to ensure that the insertions come before the

replacement; otherwise, the edits will appear overlapping and

out-of-order.

Closes https://github.com/astral-sh/ruff/issues/7718.

## Summary

This PR fixes a bug where if a Windows newline (`\r\n`) character was

escaped, then only the `\r` was consumed and not `\n` leading to an

unterminated string error.

## Test Plan

Add new test cases to check the newline escapes.

fixes: #7632

## Summary

This PR fixes the bug where the value of a string node type includes the

escaped mac/windows newline character.

Note that the token value still includes them, it's only removed when

parsing the string content.

## Test Plan

Add new test cases for the string node type to check that the escapes

aren't being included in the string value.

fixes: #7723

## Summary

This PR modifies the `line-too-long` and `doc-line-too-long` rules to

ignore lines that are too long due to the presence of a pragma comment

(e.g., `# type: ignore` or `# noqa`). That is, if a line only exceeds

the limit due to the pragma comment, it will no longer be flagged as

"too long". This behavior mirrors that of the formatter, thus ensuring

that we don't flag lines under E501 that the formatter would otherwise

avoid wrapping.

As a concrete example, given a line length of 88, the following would

_no longer_ be considered an E501 violation:

```python

# The string literal is 88 characters, including quotes.

"shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:sh" # type: ignore

```

This, however, would:

```python

# The string literal is 89 characters, including quotes.

"shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:shape:sha" # type: ignore

```

In addition to mirroring the formatter, this also means that adding a

pragma comment (like `# noqa`) won't _cause_ additional violations to

appear (namely, E501). It's very common for users to add a `# type:

ignore` or similar to a line, only to find that they then have to add a

suppression comment _after_ it that was required before, as in `# type:

ignore # noqa: E501`.

Closes https://github.com/astral-sh/ruff/issues/7471.

## Test Plan

`cargo test`

## Summary

This PR fixes the bug where the `NotebookIndex` was not being computed

when

using stdin as the input source.

## Test Plan

On `main`, the diagnostic output won't include the cell number when

using stdin

while it'll be included after this fix.

### `main`

```console

$ cat ~/playground/ruff/notebooks/test.ipynb | cargo run --bin ruff -- check --isolated --no-cache - --stdin-filename ~/playground/ruff/notebooks/test.ipynb

/Users/dhruv/playground/ruff/notebooks/test.ipynb:2:8: F401 [*] `math` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:7:8: F811 Redefinition of unused `random` from line 1

/Users/dhruv/playground/ruff/notebooks/test.ipynb:8:8: F401 [*] `pprint` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:12:4: F632 [*] Use `==` to compare constant literals

/Users/dhruv/playground/ruff/notebooks/test.ipynb:13:38: F632 [*] Use `==` to compare constant literals

Found 5 errors.

[*] 4 potentially fixable with the --fix option.

```

### `dhruv/notebook-index-stdin`

```console

$ cat ~/playground/ruff/notebooks/test.ipynb | cargo run --bin ruff -- check --isolated --no-cache - --stdin-filename ~/playground/ruff/notebooks/test.ipynb

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 3:2:8: F401 [*] `math` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5:1:8: F811 Redefinition of unused `random` from line 1

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5:2:8: F401 [*] `pprint` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6:2:4: F632 [*] Use `==` to compare constant literals

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6:3:38: F632 [*] Use `==` to compare constant literals

Found 5 errors.

[*] 4 potentially fixable with the --fix option.

```

## Summary

This PR implements a variety of optimizations to improve performance of

the Eradicate rule, which always shows up in all-rules benchmarks and

bothers me. (These improvements are not hugely important, but it was

kind of a fun Friday thing to spent a bit of time on.)

The improvements include:

- Doing cheaper work first (checking for some explicit substrings

upfront).

- Using `aho-corasick` to speed an exact substring search.

- Merging multiple regular expressions using a `RegexSet`.

- Removing some unnecessary `\s*` and other pieces from the regular

expressions (since we already trim strings before matching on them).

## Test Plan

I benchmarked this function in a standalone crate using a variety of

cases. Criterion reports that this version is up to 80% faster, and

almost every case is at least 50% faster:

```

Eradicate/Detection/# Warn if we are installing over top of an existing installation. This can

time: [101.84 ns 102.32 ns 102.82 ns]

change: [-77.166% -77.062% -76.943%] (p = 0.00 < 0.05)

Performance has improved.

Found 3 outliers among 100 measurements (3.00%)

3 (3.00%) high mild

Eradicate/Detection/#from foo import eradicate

time: [74.872 ns 75.096 ns 75.314 ns]

change: [-84.180% -84.131% -84.079%] (p = 0.00 < 0.05)

Performance has improved.

Found 1 outliers among 100 measurements (1.00%)

1 (1.00%) high mild

Eradicate/Detection/# encoding: utf8

time: [46.522 ns 46.862 ns 47.237 ns]

change: [-29.408% -28.918% -28.471%] (p = 0.00 < 0.05)

Performance has improved.

Found 7 outliers among 100 measurements (7.00%)

6 (6.00%) high mild

1 (1.00%) high severe

Eradicate/Detection/# Issue #999

time: [16.942 ns 16.994 ns 17.058 ns]

change: [-57.243% -57.064% -56.815%] (p = 0.00 < 0.05)

Performance has improved.

Found 3 outliers among 100 measurements (3.00%)

2 (2.00%) high mild

1 (1.00%) high severe

Eradicate/Detection/# type: ignore

time: [43.074 ns 43.163 ns 43.262 ns]

change: [-17.614% -17.390% -17.152%] (p = 0.00 < 0.05)

Performance has improved.

Found 5 outliers among 100 measurements (5.00%)

3 (3.00%) high mild

2 (2.00%) high severe

Eradicate/Detection/# user_content_type, _ = TimelineEvent.objects.using(db_alias).get_or_create(

time: [209.40 ns 209.81 ns 210.23 ns]

change: [-32.806% -32.630% -32.470%] (p = 0.00 < 0.05)

Performance has improved.

Eradicate/Detection/# this is = to that :(

time: [72.659 ns 73.068 ns 73.473 ns]

change: [-68.884% -68.775% -68.655%] (p = 0.00 < 0.05)

Performance has improved.

Found 9 outliers among 100 measurements (9.00%)

7 (7.00%) high mild

2 (2.00%) high severe

Eradicate/Detection/#except Exception:

time: [92.063 ns 92.366 ns 92.691 ns]

change: [-64.204% -64.052% -63.909%] (p = 0.00 < 0.05)

Performance has improved.

Found 4 outliers among 100 measurements (4.00%)

2 (2.00%) high mild

2 (2.00%) high severe

Eradicate/Detection/#print(1)

time: [68.359 ns 68.537 ns 68.725 ns]

change: [-72.424% -72.356% -72.278%] (p = 0.00 < 0.05)

Performance has improved.

Found 2 outliers among 100 measurements (2.00%)

1 (1.00%) low mild

1 (1.00%) high mild

Eradicate/Detection/#'key': 1 + 1,

time: [79.604 ns 79.865 ns 80.135 ns]

change: [-69.787% -69.667% -69.549%] (p = 0.00 < 0.05)

Performance has improved.

```

## Summary

The parser now uses the raw source code as global context and slices

into it to parse debug text. It turns out we were always passing in the

_old_ source code, so when code was fixed, we were making invalid

accesses. This PR modifies the call to use the _fixed_ source code,

which will always be consistent with the tokens.

Closes https://github.com/astral-sh/ruff/issues/7711.

## Test Plan

`cargo test`

## Summary

This wasn't necessary in the past, since we _only_ applied this rule to

bodies that contained two statements, one of which was a `pass`. Now

that it applies to any `pass` in a block with multiple statements, we

can run into situations in which we remove both passes, and so need to

apply the fixes in isolation.

See:

https://github.com/astral-sh/ruff/issues/7455#issuecomment-1741107573.

## Summary

The markdown documentation was present, but in the wrong place, so was

not displaying on the website. I moved it and added some references.

Related to #2646.

## Test Plan

`python scripts/check_docs_formatted.py`

Previously attempted to repair these tests at

https://github.com/astral-sh/ruff/pull/6992 but I don't think we should

prioritize that and instead I would like to remove this dead code.

## Summary

Extend `unnecessary-pass` (`PIE790`) to trigger on all unnecessary

`pass` statements by checking for `pass` statements in any class or

function body with more than one statement.

Closes#7600.

## Test Plan

`cargo test`

Part of #1646.

## Summary

Implement `S505`

([`weak_cryptographic_key`](https://bandit.readthedocs.io/en/latest/plugins/b505_weak_cryptographic_key.html))

rule from `bandit`.

For this rule, `bandit` [reports the issue

with](https://github.com/PyCQA/bandit/blob/1.7.5/bandit/plugins/weak_cryptographic_key.py#L47-L56):

- medium severity for DSA/RSA < 2048 bits and EC < 224 bits

- high severity for DSA/RSA < 1024 bits and EC < 160 bits

Since Ruff does not handle severities for `bandit`-related rules, we

could either report the issue if we have lower values than medium

severity, or lower values than high one. Two reasons led me to choose

the first option:

- a medium severity issue is still a security issue we would want to

report to the user, who can then decide to either handle the issue or

ignore it

- `bandit` [maps the EC key algorithms to their respective key lengths

in

bits](https://github.com/PyCQA/bandit/blob/1.7.5/bandit/plugins/weak_cryptographic_key.py#L112-L133),

but there is no value below 160 bits, so technically `bandit` would

never report medium severity issues for EC keys, only high ones

Another consideration is that as shared just above, for EC key

algorithms, `bandit` has a mapping to map the algorithms to their

respective key lengths. In the implementation in Ruff, I rather went

with an explicit list of EC algorithms known to be vulnerable (which

would thus be reported) rather than implementing a mapping to retrieve

the associated key length and comparing it with the minimum value.

## Test Plan

Snapshot tests from

https://github.com/PyCQA/bandit/blob/1.7.5/examples/weak_cryptographic_key_sizes.py.

## Summary

Extend the `task-tags` checking logic to ignore TODO tags (with or

without parentheses). For example,

```python

# TODO(tjkuson): Rewrite in Rust

```

is no longer flagged as commented-out code.

Closes#7031.

I also updated the documentation to inform users that the rule is prone

to false positives like this!

EDIT: Accidentally linked to the wrong issue when first opening this PR,

now corrected.

## Test Plan

`cargo test`

## Summary

When lexing a number like `0x995DC9BBDF1939FA` that exceeds our small

number representation, we were only storing the portion after the base

(in this case, `995DC9BBDF1939FA`). When using that representation in

code generation, this could lead to invalid syntax, since

`995DC9BBDF1939FA)` on its own is not a valid integer.

This PR modifies the code to store the full span, including the radix

prefix.

See:

https://github.com/astral-sh/ruff/issues/7455#issuecomment-1739802958.

## Test Plan

`cargo test`

Closes#7434

Replaces the `PREVIEW` selector (removed in #7389) with a configuration

option `explicit-preview-rules` which requires selectors to use exact

rule codes for all preview rules. This allows users to enable preview

without opting into all preview rules at once.

## Test plan

Unit tests

## Summary

At present, `quote-style` is used universally. However, [PEP

8](https://peps.python.org/pep-0008/) and [PEP

257](https://peps.python.org/pep-0257/) suggest that while either single

or double quotes are acceptable in general (as long as they're

consistent), docstrings and triple-quoted strings should always use

double quotes. In our research, the vast majority of Ruff users that

enable the `flake8-quotes` rules only enable them for inline strings

(i.e., non-triple-quoted strings).

Additionally, many Black forks (like Blue and Pyink) use double quotes

for docstrings and triple-quoted strings.

Our decision for now is to always prefer double quotes for triple-quoted

strings (which should include docstrings). Based on feedback, we may

consider adding additional options (e.g., a `"preserve"` mode, to avoid

changing quotes; or a `"multiline-quote-style"` to override this).

Closes https://github.com/astral-sh/ruff/issues/7615.

## Test Plan

`cargo test`

## Summary

Extends the pragma comment detection in the formatter to support

case-insensitive `noqa` (as supposed by Ruff), plus a variety of other

pragmas (`isort:`, `nosec`, etc.).

Also extracts the detection out into the trivia crate so that we can

reuse it in the linter (see:

https://github.com/astral-sh/ruff/issues/7471).

## Test Plan

`cargo test`

## Summary

No-op refactor, but we can evaluate early if the first part of

`preserve_parentheses || has_comments` is `true`, and thus avoid looking

up the node comments.

## Test Plan

`cargo test`

## Summary

The formatting for tuple patterns is now intended to match that of `for`

loops:

- Always parenthesize single-element tuples.

- Don't break on the trailing comma in single-element tuples.

- For other tuples, preserve the parentheses, and insert if-breaks.

Closes https://github.com/astral-sh/ruff/issues/7681.

## Test Plan

`cargo test`

## Summary

`PGH002`, which checks for use of deprecated `logging.warn` calls, did

not check for calls made on the attribute `warn` yet. Since

https://github.com/astral-sh/ruff/pull/7521 we check both cases for

similar rules wherever possible. To be consistent this PR expands PGH002

to do the same.

## Test Plan

Expanded existing fixtures with `logger.warn()` calls

## Issue links

Fixes final inconsistency mentioned in

https://github.com/astral-sh/ruff/issues/7502

## Summary

As we bind the `ast::ExprCall` in the big `match expr` in

`expression.rs`

```rust

Expr::Call(

call @ ast::ExprCall {

...

```

There is no need for additional `let/if let` checks on `ExprCall` in

downstream rules. Found a few older rules which still did this while

working on something else. This PR removes the redundant check from

these rules.

## Test Plan

`cargo test`

## Summary

It's common practice to name derive macros the same as the trait that they implement (`Debug`, `Display`, `Eq`, `Serialize`, ...).

This PR renames the `ConfigurationOptions` derive macro to `OptionsMetadata` to match the trait name.

## Test Plan

`cargo build`

## Summary

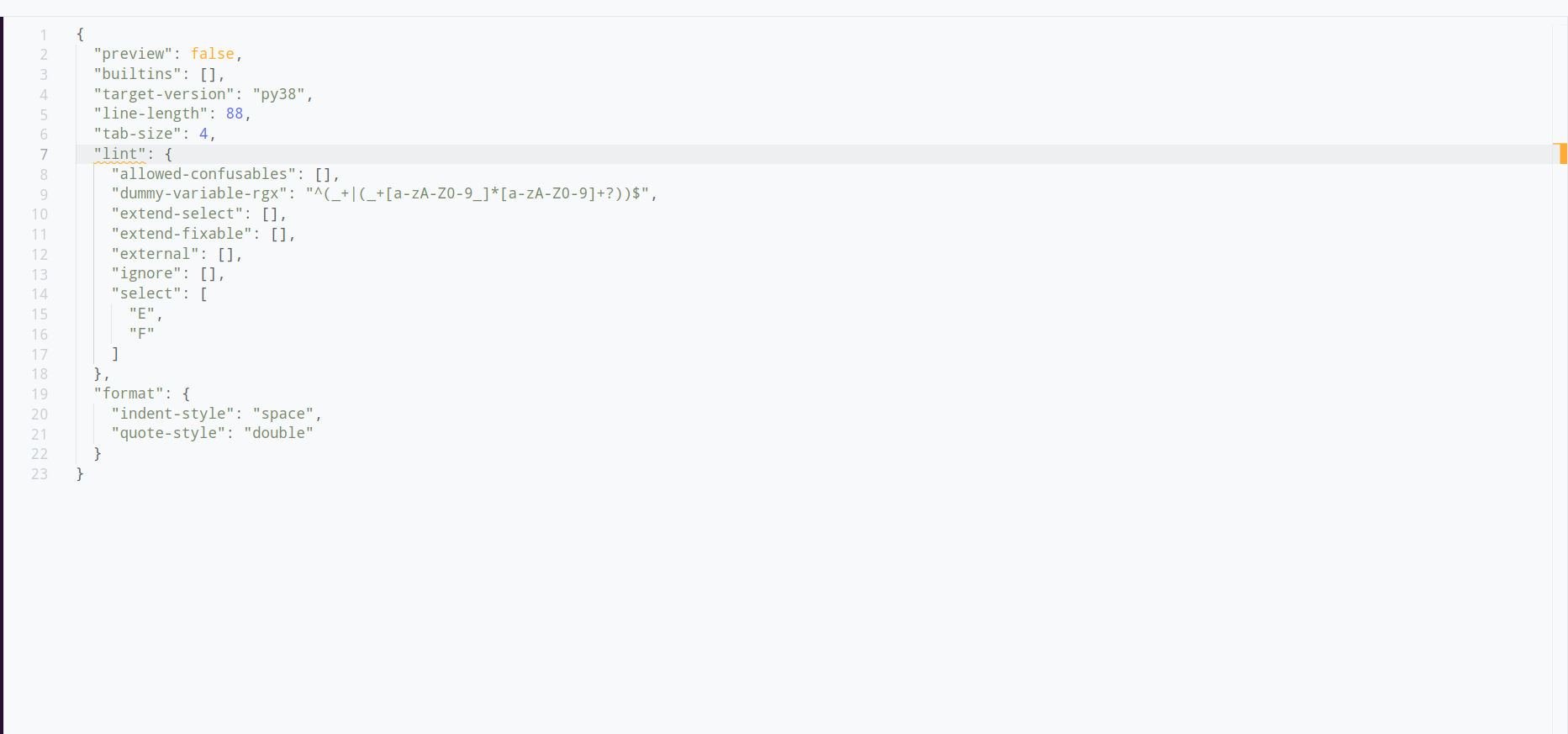

This PR adds a new `lint` section to the configuration that groups all linter-specific settings. The existing top-level configurations continue to work without any warning because the `lint.*` settings are experimental.

The configuration merges the top level and `lint.*` settings where the settings in `lint` have higher precedence (override the top-level settings). The reasoning behind this is that the settings in `lint.` are more specific and more specific settings should override less specific settings.

I decided against showing the new `lint.*` options on our website because it would make the page extremely long (it's technically easy to do, just attribute `lint` with `[option_group`]). We may want to explore adding an `alias` field to the `option` attribute and show the alias on the website along with its regular name.

## Test Plan

* I added new integration tests

* I verified that the generated `options.md` is identical

* Verified the default settings in the playground

## Summary

This PR adds support for named expressions when analyzing `__all__`

assignments, as per https://github.com/astral-sh/ruff/issues/7672. It

also loosens the enforcement around assignments like: `__all__ =

list(some_other_expression)`. We shouldn't flag these as invalid, even

though we can't analyze the members, since we _know_ they evaluate to a

`list`.

Closes https://github.com/astral-sh/ruff/issues/7672.

## Test Plan

`cargo test`

## Summary

Fixes#7616 by ensuring that

[B006](https://docs.astral.sh/ruff/rules/mutable-argument-default/#mutable-argument-default-b006)

fixes are inserted after module imports.

I have created a new test file, `B006_5.py`. This is mainly because I

have been working on this on and off, and the merge conflicts were

easier to handle in a separate file. If needed, I can move it into

another file.

## Test Plan

`cargo test`

## Summary

Expands several rules to also check for `Expr::Name` values. As they

would previously not consider:

```python

from logging import error

error("foo")

```

as potential violations

```python

import logging

logging.error("foo")

```

as potential violations leading to inconsistent behaviour.

The rules impacted are:

- `BLE001`

- `TRY400`

- `TRY401`

- `PLE1205`

- `PLE1206`

- `LOG007`

- `G001`-`G004`

- `G101`

- `G201`

- `G202`

## Test Plan

Fixtures for all impacted rules expanded.

## Issue Link

Refers: https://github.com/astral-sh/ruff/issues/7502

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

The note about rules being in preview was not being displayed for legacy

nursery rules.

Adds a link to the new preview documentation as well.

## Test Plan

<!-- How was it tested? -->

Built locally and checked a nursery rule e.g.

http://127.0.0.1:8000/ruff/rules/no-indented-block-comment/

## Summary

Pass around a `Settings` struct instead of individual members to

simplify function signatures and to make it easier to add new settings.

This PR was suggested in [this

comment](https://github.com/astral-sh/ruff/issues/1567#issuecomment-1734182803).

## Note on the choices

I chose which functions to modify based on which seem most likely to use

new settings, but suggestions on my choices are welcome!

## Summary

This PR fixes the bug where the cell indices displayed in the `--diff` output

and the ones in the normal output were different. This was due to the fact that

the `--diff` output was using the `enumerate` function to iterate over

the cells which starts at 0.

## Test Plan

Ran the following command with and without the `--diff` flag:

```console

cargo run --bin ruff -- check --no-cache --isolated ~/playground/ruff/notebooks/test.ipynb

```

### `main`

<details><summary>Diagnostics output:</summary>

<p>

```console

$ cargo run --bin ruff -- check --no-cache --isolated ~/playground/ruff/notebooks/test.ipynb

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 3:2:8: F401 [*] `math` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5:1:8: F811 Redefinition of unused `random` from line 1

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5:2:8: F401 [*] `pprint` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6:2:4: F632 [*] Use `==` to compare constant literals

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6:3:38: F632 [*] Use `==` to compare constant literals

Found 5 errors.

[*] 4 potentially fixable with the --fix option.

```

</p>

</details>

<details><summary>Diff output:</summary>

<p>

```console

$ cargo run --bin ruff -- check --no-cache --isolated ~/playground/ruff/notebooks/test.ipynb --diff

--- /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 2

+++ /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 2

@@ -1,2 +1 @@

-import random

-import math

+import random

--- /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 4

+++ /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 4

@@ -1,4 +1,3 @@

import random

-import pprint

random.randint(10, 20)

--- /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5

+++ /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5

@@ -1,3 +1,3 @@

foo = 1

-if foo is 2:

- raise ValueError(f"Invalid foo: {foo is 1}")

+if foo == 2:

+ raise ValueError(f"Invalid foo: {foo == 1}")

Would fix 4 errors.

```

</p>

</details>

### `dhruv/consistent-cell-indices`

<details><summary>Diagnostic output:</summary>

<p>

```console

$ cargo run --bin ruff -- check --no-cache --isolated ~/playground/ruff/notebooks/test.ipynb

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 3:2:8: F401 [*] `math` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5:1:8: F811 Redefinition of unused `random` from line 1

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5:2:8: F401 [*] `pprint` imported but unused

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6:2:4: F632 [*] Use `==` to compare constant literals

/Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6:3:38: F632 [*] Use `==` to compare constant literals

Found 5 errors.

[*] 4 potentially fixable with the --fix option.

```

</p>

</details>

<details><summary>Diff output:</summary>

<p>

```console

$ cargo run --bin ruff -- check --no-cache --isolated ~/playground/ruff/notebooks/test.ipynb --diff

--- /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 3

+++ /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 3

@@ -1,2 +1 @@

-import random

-import math

+import random

--- /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5

+++ /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 5

@@ -1,4 +1,3 @@

import random

-import pprint

random.randint(10, 20)

--- /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6

+++ /Users/dhruv/playground/ruff/notebooks/test.ipynb:cell 6

@@ -1,3 +1,3 @@

foo = 1

-if foo is 2:

- raise ValueError(f"Invalid foo: {foo is 1}")

+if foo == 2:

+ raise ValueError(f"Invalid foo: {foo == 1}")

Would fix 4 errors.

```

</p>

</details>

fixes: #6673

I got confused and refactored a bit, now the naming should be more

consistent. This is the basis for the range formatting work.

Chages:

* `format_module` -> `format_module_source` (format a string)

* `format_node` -> `format_module_ast` (format a program parsed into an

AST)

* Added `parse_ok_tokens` that takes `Token` instead of `Result<Token>`

* Call the source code `source` consistently

* Added a `tokens_and_ranges` helper

* `python_ast` -> `module` (because that's the type)

**Summary** Check that `closefd` and `opener` aren't being used with

`builtin.open()` before suggesting `Path.open()` because pathlib doesn't

support these arguments.

Closes#7620

**Test Plan** New cases in the fixture.

## Summary

This is a follow-up to #7469 that attempts to achieve similar gains, but

without introducing malachite. Instead, this PR removes the `BigInt`

type altogether, instead opting for a simple enum that allows us to

store small integers directly and only allocate for values greater than

`i64`:

```rust

/// A Python integer literal. Represents both small (fits in an `i64`) and large integers.

#[derive(Clone, PartialEq, Eq, Hash)]

pub struct Int(Number);

#[derive(Debug, Clone, PartialEq, Eq, Hash)]

pub enum Number {

/// A "small" number that can be represented as an `i64`.

Small(i64),

/// A "large" number that cannot be represented as an `i64`.

Big(Box<str>),

}

impl std::fmt::Display for Number {

fn fmt(&self, f: &mut std::fmt::Formatter<'_>) -> std::fmt::Result {

match self {

Number::Small(value) => write!(f, "{value}"),

Number::Big(value) => write!(f, "{value}"),

}

}

}

```

We typically don't care about numbers greater than `isize` -- our only

uses are comparisons against small constants (like `1`, `2`, `3`, etc.),

so there's no real loss of information, except in one or two rules where

we're now a little more conservative (with the worst-case being that we

don't flag, e.g., an `itertools.pairwise` that uses an extremely large

value for the slice start constant). For simplicity, a few diagnostics

now show a dedicated message when they see integers that are out of the

supported range (e.g., `outdated-version-block`).

An additional benefit here is that we get to remove a few dependencies,

especially `num-bigint`.

## Test Plan

`cargo test`

## Summary

This is whitespace as per `is_python_whitespace`, and right now it tends

to lead to panics in the formatter. Seems reasonable to treat it as

whitespace in the `SimpleTokenizer` too.

Closes .https://github.com/astral-sh/ruff/issues/7624.

## Summary

Given:

```python

if True:

if True:

pass

else:

pass

# a

# b

# c

else:

pass

```

We want to preserve the newline after the `# c` (before the `else`).

However, the `last_node` ends at the `pass`, and the comments are

trailing comments on the `pass`, not trailing comments on the

`last_node` (the `if`). As such, when counting the trailing newlines on

the outer `if`, we abort as soon as we see the comment (`# a`).

This PR changes the logic to skip _all_ comments (even those with

newlines between them). This is safe as we know that there are no

"leading" comments on the `else`, so there's no risk of skipping those

accidentally.

Closes https://github.com/astral-sh/ruff/issues/7602.

## Test Plan

No change in compatibility.

Before:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1631 |

| django | 0.99983 | 2760 | 36 |

| transformers | 0.99963 | 2587 | 319 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99979 | 3496 | 22 |

| warehouse | 0.99967 | 648 | 15 |

| zulip | 0.99972 | 1437 | 21 |

After:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1631 |

| django | 0.99983 | 2760 | 36 |

| transformers | 0.99963 | 2587 | 319 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99983 | 3496 | 18 |

| warehouse | 0.99967 | 648 | 15 |

| zulip | 0.99972 | 1437 | 21 |

## Summary

This PR fixes the autofix behavior for `PT022` to create an additional

edit for the return type if it's present. The edit will update the

return type from `Generator[T, ...]` to `T`. As per the [official

documentation](https://docs.python.org/3/library/typing.html?highlight=typing%20generator#typing.Generator),

the first position is the yield type, so we can ignore other positions.

```python

typing.Generator[YieldType, SendType, ReturnType]

```

## Test Plan

Add new test cases, `cargo test` and review the snapshots.

fixes: #7610

## Summary

Implement

[`simplify-print`](https://github.com/dosisod/refurb/blob/master/refurb/checks/builtin/print.py)

as `print-empty-string` (`FURB105`).

Extends the original rule in that it also checks for multiple empty

string positional arguments with an empty string separator.

Related to #1348.

## Test Plan

`cargo test`

Similar to tuples, a generator _can_ be parenthesized or

unparenthesized. Only search for bracketed comments if it contains its

own parentheses.

Closes https://github.com/astral-sh/ruff/issues/7623.

## Summary

Given:

```python

if True:

if True:

if True:

pass

#a

#b

#c

else:

pass

```

When determining the placement of the various comments, we compute the

indentation depth of each comment, and then compare it to the depth of

the previous statement. It turns out this can lead to reordering

comments, e.g., above, `#b` is assigned as a trailing comment of `pass`,

and so gets reordered above `#a`.

This PR modifies the logic such that when we compute the indentation

depth of `#b`, we limit it to at most the indentation depth of `#a`. In

other words, when analyzing comments at the end of branches, we don't

let successive comments go any _deeper_ than their preceding comments.

Closes https://github.com/astral-sh/ruff/issues/7602.

## Test Plan

`cargo test`

No change in similarity.

Before:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1631 |

| django | 0.99983 | 2760 | 36 |

| transformers | 0.99963 | 2587 | 319 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99979 | 3496 | 22 |

| warehouse | 0.99967 | 648 | 15 |

| zulip | 0.99972 | 1437 | 21 |

After:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1631 |

| django | 0.99983 | 2760 | 36 |

| transformers | 0.99963 | 2587 | 319 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99979 | 3496 | 22 |

| warehouse | 0.99967 | 648 | 15 |

| zulip | 0.99972 | 1437 | 21 |

## Summary

Given:

```python

# -*- coding: utf-8 -*-

import random

# Defaults for arguments are defined here

# args.threshold = None;

logger = logging.getLogger("FastProject")

```

We want to count the number of newlines after `import random`, to ensure

that there's _at least one_, but up to two.

Previously, we used the end range of the statement (then skipped

trivia); instead, we need to use the end of the _last comment_. This is

similar to #7556.

Closes https://github.com/astral-sh/ruff/issues/7604.

## Summary

B005 only flags `.strip()` calls for which the argument includes

duplicate characters. This is consistent with bugbear, but isn't

explained in the documentation.

## Summary

Currently, this happens

```sh

$ echo "print()" | ruff format -

#Notice that nothing went to stdout

```

Which does not match `ruff check --fix - ` behavior and deletes my code

every time I format it (more or less 5 times per minute 😄).

I just checked that my example works as the change was very

straightforward.

It is apparently possible to add files to the git index, even if they

are part of the gitignore (see e.g.

https://stackoverflow.com/questions/45400361/why-is-gitignore-not-ignoring-my-files,

even though it's strange that the gitignore entries existed before the

files were added, i wouldn't know how to get them added in that case). I

ran

```

git rm -r --cached .

```

then change the gitignore not actually ignore those files with the

exception of

`crates/ruff_cli/resources/test/fixtures/cache_mutable/source.py`, which

is actually a generated file.

## Summary

This is only used for the `level` field in relative imports (e.g., `from

..foo import bar`). It seems unnecessary to use a wrapper here, so this

PR changes to a `u32` directly.

## Summary

When we format the trailing comments on a clause body, we check if there

are any newlines after the last statement; if not, we insert one.

This logic didn't take into account that the last statement could itself

have trailing comments, as in:

```python

if True:

pass

# comment

else:

pass

```

We were thus inserting a newline after the comment, like:

```python

if True:

pass

# comment

else:

pass

```

In the context of function definitions, this led to an instability,

since we insert a newline _after_ a function, which would in turn lead

to the bug above appearing in the second formatting pass.

Closes https://github.com/astral-sh/ruff/issues/7465.

## Test Plan

`cargo test`

Small improvement in `transformers`, but no regressions.

Before:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1631 |

| django | 0.99983 | 2760 | 36 |

| transformers | 0.99956 | 2587 | 404 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99983 | 3496 | 18 |

| warehouse | 0.99967 | 648 | 15 |

| zulip | 0.99972 | 1437 | 21 |

After:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1631 |

| django | 0.99983 | 2760 | 36 |

| **transformers** | **0.99957** | **2587** | **402** |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99983 | 3496 | 18 |

| warehouse | 0.99967 | 648 | 15 |

| zulip | 0.99972 | 1437 | 21 |

## Summary

If a function has no parameters (and no comments within the parameters'

`()`), we're supposed to wrap the return annotation _whenever_ it

breaks. However, our `empty_parameters` test didn't properly account for

the case in which the parameters include a newline (but no other

content), like:

```python

def get_dashboards_hierarchy(

) -> Dict[Type['BaseDashboard'], List[Type['BaseDashboard']]]:

"""Get hierarchy of dashboards classes.

Returns:

Dict of dashboards classes.

"""

dashboards_hierarchy = {}

```

This PR fixes that detection. Instead of lexing, it now checks if the

parameters itself is empty (or if it contains comments).

Closes https://github.com/astral-sh/ruff/issues/7457.

## Summary

## Stack Summary

This stack splits `Settings` into `FormatterSettings` and `LinterSettings` and moves it into `ruff_workspace`. This change is necessary to add the `FormatterSettings` to `Settings` without adding `ruff_python_formatter` as a dependency to `ruff_linter` (and the linter should not contain the formatter settings).

A quick overview of our settings struct at play:

* `Options`: 1:1 representation of the options in the `pyproject.toml` or `ruff.toml`. Used for deserialization.

* `Configuration`: Resolved `Options`, potentially merged from multiple configurations (when using `extend`). The representation is very close if not identical to the `Options`.

* `Settings`: The resolved configuration that uses a data format optimized for reading. Optional fields are initialized with their default values. Initialized by `Configuration::into_settings` .

The goal of this stack is to split `Settings` into tool-specific resolved `Settings` that are independent of each other. This comes at the advantage that the individual crates don't need to know anything about the other tools. The downside is that information gets duplicated between `Settings`. Right now the duplication is minimal (`line-length`, `tab-width`) but we may need to come up with a solution if more expensive data needs sharing.

This stack focuses on `Settings`. Splitting `Configuration` into some smaller structs is something I'll follow up on later.

## PR Summary

This PR moves the `ResolverSettings` and `Settings` struct to `ruff_workspace`. `LinterSettings` remains in `ruff_linter` because it gets passed to lint rules, the `Checker` etc.

## Test Plan

`cargo test`

## Stack Summary

This stack splits `Settings` into `FormatterSettings` and `LinterSettings` and moves it into `ruff_workspace`. This change is necessary to add the `FormatterSettings` to `Settings` without adding `ruff_python_formatter` as a dependency to `ruff_linter` (and the linter should not contain the formatter settings).

A quick overview of our settings struct at play:

* `Options`: 1:1 representation of the options in the `pyproject.toml` or `ruff.toml`. Used for deserialization.

* `Configuration`: Resolved `Options`, potentially merged from multiple configurations (when using `extend`). The representation is very close if not identical to the `Options`.

* `Settings`: The resolved configuration that uses a data format optimized for reading. Optional fields are initialized with their default values. Initialized by `Configuration::into_settings` .

The goal of this stack is to split `Settings` into tool-specific resolved `Settings` that are independent of each other. This comes at the advantage that the individual crates don't need to know anything about the other tools. The downside is that information gets duplicated between `Settings`. Right now the duplication is minimal (`line-length`, `tab-width`) but we may need to come up with a solution if more expensive data needs sharing.

This stack focuses on `Settings`. Splitting `Configuration` into some smaller structs is something I'll follow up on later.

## PR Summary

This PR extracts the linter-specific settings into a new `LinterSettings` struct and adds it as a `linter` field to the `Settings` struct. This is in preparation for moving `Settings` from `ruff_linter` to `ruff_workspace`

## Test Plan

`cargo test`

## Stack Summary

This stack splits `Settings` into `FormatterSettings` and `LinterSettings` and moves it into `ruff_workspace`. This change is necessary to add the `FormatterSettings` to `Settings` without adding `ruff_python_formatter` as a dependency to `ruff_linter` (and the linter should not contain the formatter settings).

A quick overview of our settings struct at play:

* `Options`: 1:1 representation of the options in the `pyproject.toml` or `ruff.toml`. Used for deserialization.

* `Configuration`: Resolved `Options`, potentially merged from multiple configurations (when using `extend`). The representation is very close if not identical to the `Options`.

* `Settings`: The resolved configuration that uses a data format optimized for reading. Optional fields are initialized with their default values. Initialized by `Configuration::into_settings` .

The goal of this stack is to split `Settings` into tool-specific resolved `Settings` that are independent of each other. This comes at the advantage that the individual crates don't need to know anything about the other tools. The downside is that information gets duplicated between `Settings`. Right now the duplication is minimal (`line-length`, `tab-width`) but we may need to come up with a solution if more expensive data needs sharing.

This stack focuses on `Settings`. Splitting `Configuration` into some smaller structs is something I'll follow up on later.

## PR Summary

This PR extracts a `ResolverSettings` struct that holds all the resolver-relevant fields (uninteresting for the `Formatter` or `Linter`). This will allow us to move the `ResolverSettings` out of `ruff_linter` further up in the stack.

## Test Plan

`cargo test`

(I'll to more extensive testing at the top of this stack)

## Summary

This PR fixes the way NoQA range is inserted to the `NoqaMapping`.

Previously, the way the mapping insertion logic worked was as follows:

1. If the range which is to be inserted _touched_ the previous range, meaning

that the end of the previous range was the same as the start of the new

range, then the new range was added in addition to the previous range.

2. Else, if the new range intersected the previous range, then the previous

range was replaced with the new _intersection_ of the two ranges.

The problem with this logic is that it does not work for the following case:

```python

assert foo, \

"""multi-line

string"""

```

Now, the comments cannot be added to the same line which ends with a continuation

character. So, the `NoQA` directive has to be added to the next line. But, the

next line is also a triple-quoted string, so the `NoQA` directive for that line

needs to be added to the next line. This creates a **union** pattern instead of an

**intersection** pattern.

But, only union doesn't suffice because (1) means that for the edge case where

the range touch only at the end, the union won't take place.

### Solution

1. Replace '<=' with '<' to have a _strict_ insertion case

2. Use union instead of intersection

## Test Plan

Add a new test case. Run the test suite to ensure that nothing is broken.

### Integration

1. Make a `test.py` file with the following contents:

```python

assert foo, \

"""multi-line

string"""

```

2. Run the following command:

```console

$ cargo run --bin ruff -- check --isolated --no-cache --select=F821 test.py

/Users/dhruv/playground/ruff/fstring.py:1:8: F821 Undefined name `foo`

Found 1 error.

```

3. Use `--add-noqa`:

```console

$ cargo run --bin ruff -- check --isolated --no-cache --select=F821 --add-noqa test.py

Added 1 noqa directive.

```

4. Check that the NoQA directive was added in the correct position:

```python

assert foo, \

"""multi-line

string""" # noqa: F821

```

5. Run the `check` command to ensure that the NoQA directive is respected:

```console

$ cargo run --bin ruff -- check --isolated --no-cache --select=F821 test.py

```

fixes: #7530

## Summary

Implement

[`no-ignored-enumerate-items`](https://github.com/dosisod/refurb/blob/master/refurb/checks/builtin/no_ignored_enumerate.py)

as `unnecessary-enumerate` (`FURB148`).

The auto-fix considers if a `start` argument is passed to the

`enumerate()` function. If only the index is used, then the suggested

fix is to pass the `start` value to the `range()` function. So,

```python

for i, _ in enumerate(xs, 1):

...

```

becomes

```python

for i in range(1, len(xs)):

...

```

If the index is ignored and only the value is ignored, and if a start

value greater than zero is passed to `enumerate()`, the rule doesn't

produce a suggestion. I couldn't find a unanimously accepted best way to

iterate over a collection whilst skipping the first n elements. The rule

still triggers, however.

Related to #1348.

## Test Plan

`cargo test`

---------

Co-authored-by: Dhruv Manilawala <dhruvmanila@gmail.com>

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

**Summary** Instead of emitting a bogus token per char, we now only emit

on single last bogus token. This leads to much more concise output.

**Test Plan** Updated fixtures

Closes#5497

Needs MkDocs 1.5 to be released.

- [x] https://github.com/mkdocs/mkdocs/milestone/15

## Summary

Uses MkDocs' `not_in_nav` config to hide spam about files in

`docs/rules/` not being in nav.

Close#7479

The `@override` was already implemented

## Test Plan

Tested the code in the issue. After removing all the noqa's, only one

occurrence of `BadName()` raised a violation.

Added a fixture

## Summary

This PR implements a new rule for `flake8-logging` plugin that checks

for uses of `logging.exception()` with `exc_info` set to `False` or a

falsy value. It suggests using `logging.error` in these cases instead.

I am unsure about the name. Open to suggestions there, went with the

most explicit name I could think of in the meantime.

Refer https://github.com/astral-sh/ruff/issues/7248

## Test Plan

Added a new fixture cases and ran `cargo test`

## Summary

This PR updates the `W191` (`tab-indentation`) rule from a line-based to

a token-based rule.

Earlier, the rule used the `triple_quoted_string_ranges` from the

indexer to skip over any lines _inside_ a triple-quoted string. This was the only

use of the ranges. These ranges were extracted through the tokens, so instead

we can directly use the newline tokens to perform the check.

This would also mean that we can remove the `triple_quoted_string_ranges` from

the indexer but I'll hold that off until we have a better idea on #7326

but I don't think it would be a problem to remove it.

This will also fix#7379 once PEP 701 changes are merged.

## Test Plan

`cargo test`

## Summary

This PR implements a new rule for `flake8-logging` plugin that checks

for

`logging.getLogger` calls with either `__file__` or `__cached__` as the

first

argument and generates a suggested fix to use `__name__` instead.

Refer: #7248

## Test Plan

Add test cases and `cargo test`

## Summary

The tokenizer was split into a forward and a backwards tokenizer. The

backwards tokenizer uses the same names as the forwards ones (e.g.

`next_token`). The backwards tokenizer gets the comment ranges that we

already built to skip comments.

---------

Co-authored-by: Micha Reiser <micha@reiser.io>

## Summary

We're planning to move the documentation from

[https://beta.ruff.rs/docs](https://beta.ruff.rs/docs) to

[https://docs.astral.sh/ruff](https://docs.astral.sh/ruff), for a few

reasons:

1. We want to remove the `beta` from the domain, as Ruff is no longer

considered beta software.

2. We want to migrate to a structure that could accommodate multiple

future tools living under one domain.

The docs are actually already live at

[https://docs.astral.sh/ruff](https://docs.astral.sh/ruff), but later

today, I'll add a permanent redirect from the previous to the new

domain. **All existing links will continue to work, now and in

perpetuity.**

This PR contains the code changes necessary for the updated

documentation. As part of this effort, I moved the playground and

documentation from my personal Cloudflare account to our team Cloudflare

account (hence the new `--project-name` references). After merging, I'll

also update the secrets on this repo.

## Summary

Given a trailing operator comment in a unary expression, like:

```python

if (

not # comment

a):

...

```

We were attaching these to the operand (`a`), but formatting them in the

unary operator via special handling. Parents shouldn't format the

comments of their children, so this instead attaches them as dangling

comments on the unary expression. (No intended change in formatting.)

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

Adds the maximum of 320 for the line-length setting to the JSON schema

for better integration with IDEs.

Related https://github.com/astral-sh/ruff/pull/6873

## Test Plan

<!-- How was it tested? -->

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

The previous reference was “CWE-78: Improper Neutralization of Special

Elements used in an OS Command ('OS Command Injection')”, which

describes another issue. The new reference is “CWE-426: Untrusted Search

Path”, which describes exactly the problem that this rule should warn

about.

## Test Plan

The change was not tested, as it only changes two numbers in the

documentation.

## Summary

Adds `LOG009` from

[flake8-logging](https://github.com/adamchainz/flake8-logging). Also

adds the boilerplate for a new plugin

Checks for usages of undocumented `logging.WARN` constant and suggests

replacement with `logging.WARNING`.

## Test Plan

`cargo test` with fresh fixture

## Issue links

Refers: https://github.com/astral-sh/ruff/issues/7248

## Summary

Extends UP040 to support moving type variables with

bounds/constraints/variance that are used in type aliases to use PEP-695

syntax.

Part of #4617.

## Test Plan

The existing tests added by #6314 already cover the relevant cases.

Rules like D209 and D205 are only intended to apply to multi-line

docstrings. If a docstring is single-quoted, but extends via a

continuation, it should be excluded (it'll be flagged by another rule

anyway). Closes https://github.com/astral-sh/ruff/issues/7058.

## Summary

At some point, we removed these so that they wouldn't be autocompleted

for users, since we wanted to discourage usage of `ALL`. But given that

they're valid values, I think that was a bad idea -- it leads to an even

more confusing experience whereby JSON Schema validators tell you that

you have an error, when you don't.

Closes https://github.com/astral-sh/ruff/issues/7261.

The rule selector is not useful because `--select PREVIEW` only targets

Ruff developers and `--ignore PREVIEW` has no effect due to its low

specificity. We may restore it later if useful.

`ComparableExpr` includes the `ExprContext` field on an expression, so,

e.g., the two tuples in `(a, b) = (a, b)` won't be considered equal.

Similarly, the tuples in `[(a, b) for (a, b) in c]` _also_ wouldn't be

considered equal. I find this behavior surprising, since

`ComparableExpr` is intended to allow you to compare two ASTs, but

`ExprContext` is really encoding information about the broader context

for the expression.

Bumps [shlex](https://github.com/comex/rust-shlex) from 1.1.0 to 1.2.0.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/comex/rust-shlex/blob/master/CHANGELOG.md">shlex's

changelog</a>.</em></p>

<blockquote>

<h1>1.2.0</h1>

<ul>

<li>Adds <code>bytes</code> module to support operating directly on byte

strings.</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li>See full diff in <a

href="https://github.com/comex/rust-shlex/commits">compare view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Co-authored-by: Micha Reiser <micha@reiser.io>

## Summary

Given a statement like:

```python

result = (

f(111111111111111111111111111111111111111111111111111111111111111111111111111111111)

+ 1

)()

```

When we go to parenthesize the target of the assignment, we use

`maybe_parenthesize_expression` with `Parenthesize::IfBreaks`. This then

checks if the call on the right-hand side needs to be parenthesized, the

implementation of which looks like:

```rust

impl NeedsParentheses for ExprCall {

fn needs_parentheses(

&self,

_parent: AnyNodeRef,

context: &PyFormatContext,

) -> OptionalParentheses {

if CallChainLayout::from_expression(self.into(), context.source())

== CallChainLayout::Fluent

{

OptionalParentheses::Multiline

} else if context.comments().has_dangling(self) {

OptionalParentheses::Always

} else {

self.func.needs_parentheses(self.into(), context)

}

}

}

```

Checking for `self.func.needs_parentheses(self.into(), context)` is

problematic, since, as in the example above, `self.func` may _already_

be parenthesized -- in which case, we _don't_ want to parenthesize the

entire expression. If we do, we end up with this non-ideal formatting:

```python

result = (

(

f(

111111111111111111111111111111111111111111111111111111111111111111111111111111111

)

+ 1

)()

)

```

This PR modifies the `NeedsParentheses` implementations for call chain

expressions to return `Never` if the inner expression has its own

parentheses, in which case, the formatting implementations for those

expressions will preserve them anyway.

Closes https://github.com/astral-sh/ruff/issues/7370.

## Test Plan

Zulip improves a bit, everything else is unchanged.

Before:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1632 |

| django | 0.99981 | 2760 | 40 |

| transformers | 0.99944 | 2587 | 413 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99983 | 3496 | 18 |

| warehouse | 0.99834 | 648 | 20 |

| zulip | 0.99956 | 1437 | 23 |

After:

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1632 |

| django | 0.99981 | 2760 | 40 |

| transformers | 0.99944 | 2587 | 413 |

| twine | 1.00000 | 33 | 0 |

| typeshed | 0.99983 | 3496 | 18 |

| warehouse | 0.99834 | 648 | 20 |

| **zulip** | **0.99962** | **1437** | **22** |

## Summary

When fixing `reversed(sorted(x, reverse=False))`, we rewrite as

`sorted(x, reverse=True)`. However, if the `reverse` argument isn't

`True` or `False`, we leave it as-is, which is incorrect.

Now, given `reversed(sorted(x, reverse=y))`, we rewrite as `sorted(x,

reverse=not y)`.

## Summary

Adds warnings for cases where:

- A selector does not include any rules because preview is disabled

- A nursery rule is selected without the preview flag

## Test plan

Add integration tests

Moves the new rule from nursery to preview for the upcoming release.

Adds new test coverage for selection of a single preview rule and fixes

a bug where preview rules were incorrectly selectable with exact codes.

## Summary

This PR bumps the pyproject-toml crate to 0.7.0. The only difference is that it now depends on indexmap 2. I reviewed the indexmap 2 changes and they don't seem relevant to us.

I used this opportunity to remove the default features from `serde_with` which removes our indexmap 1 dependency (and some other unused dependencies)

## Test Plan

`cargo test`

## Motivation

The `ast::Arguments` for call argument are split into positional

arguments (args) and keywords arguments (keywords). We currently assume

that call consists of first args and then keywords, which is generally

the case, but not always:

```python

f(*args, a=2, *args2, **kwargs)

class A(*args, a=2, *args2, **kwargs):

pass

```

The consequence is accidentally reordering arguments

(https://github.com/astral-sh/ruff/pull/7268).

## Summary

`Arguments::args_and_keywords` returns an iterator of an `ArgOrKeyword`

enum that yields args and keywords in the correct order. I've fixed the

obvious `args` and `keywords` usages, but there might be some cases with

wrong assumptions remaining.

## Test Plan

The generator got new test cases, otherwise the stacked PR

(https://github.com/astral-sh/ruff/pull/7268) which uncovered this.

## Summary

This PR updates the `FileCache` to include an optional `NotebookIndex`

to support caching for Jupyter Notebooks.

We only require the index to compute the diagnostics and thus we don't

really need to store the entire `Notebook` on the `Diagnostics` struct.

This means we only need the index to be stored in the cache to

reconstruct the `Diagnostics`.

## Test Plan

Update an existing test case to run over the fixtures under

`ruff_notebook` crate where there are multiple Jupyter Notebook.

Locally, the following commands were run in order:

1. Remove the cache: `rm -rf .ruff_cache`

2. Run without cache: `cargo run --bin ruff -- check --isolated

crates/ruff_notebook/resources/test/fixtures/jupyter/unused_variable.ipynb

--no-cache`

3. Run with cache: `cargo run --bin ruff -- check --isolated

crates/ruff_notebook/resources/test/fixtures/jupyter/unused_variable.ipynb`

4. Check whether the `.ruff_cache` directory was created or not

5. Run with cache again and verify: `cargo run --bin ruff -- check

--isolated

crates/ruff_notebook/resources/test/fixtures/jupyter/unused_variable.ipynb`

## Benchmarks

https://github.com/astral-sh/ruff/pull/6863#issuecomment-1715675186fixes: #6671

## Summary

Closes#6958.

If a method has the `override` decorator, there is nothing you can do

about incorrect dunder methods, so they should be ignored.

## Test Plan

Overridden incorrect dunder method was added to the tests to verify ruff

doesn't catch it when evaluating the file. Snapshot changes are all just

line number changes

## Summary

This PR updates the lexer test snapshots to include the range value as

well. This is mainly a mechanical refactor.

### Motivation

The main motivation is so that we can verify that the ranges are valid

and do not overlap.

## Test Plan

`cargo test`

## Summary

This PR updates the remaining lexer test cases to use the snapshots.

This is mainly a mechanical refactor.

## Motivation

The main motivation is so that when we add the token range values to the

test case output, it's easier to update the test cases.

The reason they were not using the snapshots before was because of the usage of

`test_case` macro. The macros is mainly used for different EOL test cases. If we

just generate the snapshots directly, then the snapshot name would be suffixed

with `-1`, `-2`, etc. as the test function is still the same. So, we'll create

the snapshot ourselves with the platform name for the respective EOL

test cases.

## Test Plan

`cargo test`

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

Extends work in #7046 (some relevant discussion there)

Changes:

- All nursery rules are now referred to as preview rules

- Documentation for the nursery is updated to describe preview

- Adds a "PREVIEW" selector for preview rules

- This is primarily to allow `--preview --ignore PREVIEW --extend-select

FOO001,BAR200`

- Using `--preview` enables preview rules that match selectors

Notable decisions:

- Preview rules are not selectable by their rule code without enabling

preview

- Retains the "NURSERY" selector for backwards compatibility

- Nursery rules are selectable by their rule code for backwards

compatiblity

Additional work:

- Selection of preview rules without the "--preview" flag should display

a warning

- Use of deprecated nursery selection behavior should display a warning

- Nursery selection should be removed after some time

## Test Plan

<!-- How was it tested? -->

Manual confirmation (i.e. we don't have an preview rules yet just

nursery rules so I added a preview rule for manual testing)

New unit tests

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

## Summary

Another statement on the same line as the docstring would previous make

the D204 (newline after docstring) fix fail:

```python

class StatementOnSameLineAsDocstring:

"After this docstring there's another statement on the same line separated by a semicolon." ;priorities=1

def sort_services(self):

pass

```

The fix handles this case manually:

```python

class StatementOnSameLineAsDocstring:

"After this docstring there's another statement on the same line separated by a semicolon."

priorities=1

def sort_services(self):

pass

```

Fixes#7088

## Test Plan

Added a new `D` test case

## Summary

Fix all but one empty line differences with the black preview style in

typeshed. The remaining differences are breaking with type comments and

trailing commas in function definitions.

I compared the empty line differences with the preview mode of black

since stable has some oddities that would have been hard to replicate

(https://github.com/psf/black/issues/3861). Additionally, it assumes the

style proposed in https://github.com/psf/black/issues/3862.

An edge case that also surfaced with typeshed are newline before

trailing module comments.

**main**

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1632 |

| django | 0.99966 | 2760 | 58 |

| transformers | 0.99930 | 2587 | 447 |

| twine | 1.00000 | 33 | 0 |

| **typeshed** | 0.99978 | 3496 | **2173** |

| warehouse | 0.99825 | 648 | 22 |

| zulip | 0.99950 | 1437 | 27 |

**PR**

| project | similarity index | total files | changed files |

|--------------|------------------:|------------------:|------------------:|

| cpython | 0.76083 | 1789 | 1632 |

| django | 0.99966 | 2760 | 58 |

| transformers | 0.99930 | 2587 | 447 |

| twine | 1.00000 | 33 | 0 |

| **typeshed** | 0.99983 | 3496 | **18** |

| warehouse | 0.99825 | 648 | 22 |

| zulip | 0.99950 | 1437 | 27 |

Closes#6723

## Test Plan

The main driver was the typeshed diff. I added new test cases for all

kinds of possible empty line combinations in stub files, test cases for

newlines before trailing module comments.

---------

Co-authored-by: Micha Reiser <micha@reiser.io>

## Summary

This PR adds the `--preview` and `--no-preview` options to the `format` command (hidden) and passes it through to the formatte.

## Test Plan

I added the `dbg(f.options().preview())` statement in `FormatNodeRule::fmt` and verified that the option gets correctly passed to the formatter.

Show header for formatter comment decoration info

**Summary** Show a header in the formatter comment decoration debug

output that shows which node is preceding/following/enclosing

(https://github.com/astral-sh/ruff/pull/6813#issuecomment-1708119550). I

kept this intentionally condensed to make it easy to use this is a small

sidebar without vertical scrolling.

```console

$ cargo run --bin ruff_python_formatter -- --emit stdout --print-comments scratch.py

# Comment decoration: Range, Preceding, Following, Enclosing, Comment

17..20, Some((ParameterWithDefault, 6..10)), None, (Parameters, 5..22), "# a"

44..47, Some((StmtExpr, 28..39)), Some((StmtExpr, 52..60)), (StmtFunctionDef, 0..60), "# b"

77..80, None, None, (ExprList, 71..82), "# c"

{

Node {

kind: ParameterWithDefault,

range: 6..10,

source: `x=[]`,

}: {

...

```

**Test Plan** It's debug output.

**Summary** The comment visitor used to rebuild the locator for every

comment. Instead, we now keep the locator on the builder. Follow-up to

#6813.

**Test Plan** No formatting changes.

## Summary

Add a configuration option to extend the list of names that can be

accessed without triggering SLF001.

Fixes issue #7018

## Test Plan

Manually tested by creating a python file (`test.py`):

```python

def foo(obj):

obj._meta

```

and a `ruff.toml` file:

```toml

select = ["SLF"]

[flake8-self]

extend-ignore-names = ["_meta"]

```

Then running `cargo run -p ruff_cli -- check test.py --no-cache` (once

with the `extend-ignore-names` line comment out) to see if the

configuration option works.

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>