## Summary

This PR fixes a bug introduced in

https://github.com/astral-sh/ruff/pull/12008 which didn't consider the

two character newline after the line continuation character.

For example, consider the following code highlighted with whitespaces:

```py

call(foo # comment \\r\n

\r\n

def bar():\r\n

....pass\r\n

```

The lexer is at `def` when it's running the re-lexing logic and trying

to move back to a newline character. It encounters `\n` and it's being

escaped (incorrect) but `\r` is being escaped, so it moves the lexer to

`\n` character. This creates an overlap in token ranges which causes the

panic.

```

Name 0..4

Lpar 4..5

Name 5..8

Comment 9..20

NonLogicalNewline 20..22 <-- overlap between

Newline 21..22 <-- these two tokens

NonLogicalNewline 22..23

Def 23..26

...

```

fixes: #12028

## Test Plan

Add a test case with line continuation and windows style newline

character.

## Summary

(I'm pretty sure I added this in the parser re-write but must've got

lost in the rebase?)

This PR raises a syntax error if the type parameter list is empty.

As per the grammar, there should be at least one type parameter:

```

type_params:

| invalid_type_params

| '[' type_param_seq ']'

type_param_seq: ','.type_param+ [',']

```

Verified via the builtin `ast` module as well:

```console

$ python3.13 -m ast parser/_.py

Traceback (most recent call last):

[..]

File "parser/_.py", line 1

def foo[]():

^

SyntaxError: Type parameter list cannot be empty

```

## Test Plan

Add inline test cases and update the snapshots.

## Summary

Right now, it's inconsistent... We sometimes match against the name, and

sometimes against the alias (`asname`). I could see a case for always

matching against the name, but matching against both seems fine too,

since the rule is really about the combination of the two?

Closes https://github.com/astral-sh/ruff/issues/12031.

## Summary

This PR fixes a bug where Ruff would raise `E203` for f-string debug

expression. This isn't valid because whitespaces are important for debug

expressions.

fixes: #12023

## Test Plan

Add test case and make sure there are no snapshot changes.

## Summary

This PR updates the parser test infrastructure to validate the token

ranges.

From the code documentation:

```

/// Verifies that:

/// * the ranges are strictly increasing when loop the tokens in insertion order

/// * all ranges are within the length of the source code

```

Follow-up from #12016 and #12017resolves: #11938

## Test Plan

Make sure that there are no failures.

## Summary

This PR updates the unterminated string error range to not include the

final newline character.

This is a follow-up to #12016 and required for #12019

This is not done for when the unterminated string goes till the end of

file (not a newline character). The unterminated f-string range is

correct.

### Why is this required for #12019 ?

Because otherwise the token ranges will overlap. For example:

```py

f"{"

f"{foo!r"

```

Here, the re-lexing logic recovers from an unterminated f-string and

thus emitting a `Newline` token for the one at the end of the first

line. But, currently the `Unknown` and the `Newline` token would overlap

because the `Unknown` token (unterminated string literal) range would

include the newline character.

## Test Plan

Update and validate the snapshot.

## Summary

This PR fixes the range highlighted for the line continuation error.

Previously, it would highlight an incorrect range:

```

1 | call(a, b, \\\

| ^^ Syntax Error: unexpected character after line continuation character

2 |

3 | def bar():

|

```

And now:

```

|

1 | call(a, b, \\\

| ^ Syntax Error: unexpected character after line continuation character

2 |

3 | def bar():

|

```

This is implemented by avoiding to update the token range for the

`Unknown` token which is emitted when there's a lexical error. Instead,

the `push_error` helper method will be responsible to update the range

to the error location.

This actually becomes a requirement which can be seen in follow-up PRs.

## Test Plan

Update and validate the snapshot.

## Summary

This PR fixes a bug where the re-lexing logic didn't consider the line

continuation character being present before the newline character. This

meant that the lexer was being moved back to the newline character which

is actually ignored via `\`.

Considering the following code:

```py

f'middle {'string':\

'format spec'}

```

The old token stream is:

```

...

Colon 18..19

FStringMiddle 19..29 (flags = F_STRING)

Newline 20..21

Indent 21..29

String 29..42

Rbrace 42..43

...

```

Notice how the ranges are overlapping between the `FStringMiddle` token

and the tokens emitted after moving the lexer backwards.

After this fix, the new token stream which is without moving the lexer

backwards in this scenario:

```

FStringStart 0..2 (flags = F_STRING)

FStringMiddle 2..9 (flags = F_STRING)

Lbrace 9..10

String 10..18

Colon 18..19

FStringMiddle 19..29 (flags = F_STRING)

FStringEnd 29..30 (flags = F_STRING)

Name 30..36

Name 37..41

Unknown 41..44

Newline 44..45

```

fixes: #12004

## Test Plan

Add test cases and update the snapshots.

## Summary

This PR updates `F811` rule to include assignment as possible shadowed

binding. This will fix issue: #11828 .

## Test Plan

Add a test file, F811_30.py, which includes a redefinition after an

assignment and a verified snapshot file.

## Summary

Addresses #11974 to add a `RUF` rule to replace `print` expressions in

`assert` statements with the inner message.

An autofix is available, but is considered unsafe as it changes

behaviour of the execution, notably:

- removal of the printout in `stdout`, and

- `AssertionError` instance containing a different message.

While the detection of the condition is a straightforward matter,

deciding how to resolve the print arguments into a string literal can be

a relatively subjective matter. The implementation of this PR chooses to

be as tolerant as possible, and will attempt to reformat any number of

`print` arguments containing single or concatenated strings or variables

into either a string literal, or a f-string if any variables or

placeholders are detected.

## Test Plan

`cargo test`.

## Examples

For ease of discussion, this is the diff for the tests:

```diff

# Standard Case

# Expects:

# - single StringLiteral

-assert True, print("This print is not intentional.")

+assert True, "This print is not intentional."

# Concatenated string literals

# Expects:

# - single StringLiteral

-assert True, print("This print" " is not intentional.")

+assert True, "This print is not intentional."

# Positional arguments, string literals

# Expects:

# - single StringLiteral concatenated with " "

-assert True, print("This print", "is not intentional")

+assert True, "This print is not intentional"

# Concatenated string literals combined with Positional arguments

# Expects:

# - single stringliteral concatenated with " " only between `print` and `is`

-assert True, print("This " "print", "is not intentional.")

+assert True, "This print is not intentional."

# Positional arguments, string literals with a variable

# Expects:

# - single FString concatenated with " "

-assert True, print("This", print.__name__, "is not intentional.")

+assert True, f"This {print.__name__} is not intentional."

# Mixed brackets string literals

# Expects:

# - single StringLiteral concatenated with " "

-assert True, print("This print", 'is not intentional', """and should be removed""")

+assert True, "This print is not intentional and should be removed"

# Mixed brackets with other brackets inside

# Expects:

# - single StringLiteral concatenated with " " and escaped brackets

-assert True, print("This print", 'is not "intentional"', """and "should" be 'removed'""")

+assert True, "This print is not \"intentional\" and \"should\" be 'removed'"

# Positional arguments, string literals with a separator

# Expects:

# - single StringLiteral concatenated with "|"

-assert True, print("This print", "is not intentional", sep="|")

+assert True, "This print|is not intentional"

# Positional arguments, string literals with None as separator

# Expects:

# - single StringLiteral concatenated with " "

-assert True, print("This print", "is not intentional", sep=None)

+assert True, "This print is not intentional"

# Positional arguments, string literals with variable as separator, needs f-string

# Expects:

# - single FString concatenated with "{U00A0}"

-assert True, print("This print", "is not intentional", sep=U00A0)

+assert True, f"This print{U00A0}is not intentional"

# Unnecessary f-string

# Expects:

# - single StringLiteral

-assert True, print(f"This f-string is just a literal.")

+assert True, "This f-string is just a literal."

# Positional arguments, string literals and f-strings

# Expects:

# - single FString concatenated with " "

-assert True, print("This print", f"is not {'intentional':s}")

+assert True, f"This print is not {'intentional':s}"

# Positional arguments, string literals and f-strings with a separator

# Expects:

# - single FString concatenated with "|"

-assert True, print("This print", f"is not {'intentional':s}", sep="|")

+assert True, f"This print|is not {'intentional':s}"

# A single f-string

# Expects:

# - single FString

-assert True, print(f"This print is not {'intentional':s}")

+assert True, f"This print is not {'intentional':s}"

# A single f-string with a redundant separator

# Expects:

# - single FString

-assert True, print(f"This print is not {'intentional':s}", sep="|")

+assert True, f"This print is not {'intentional':s}"

# Complex f-string with variable as separator

# Expects:

# - single FString concatenated with "{U00A0}", all placeholders preserved

condition = "True is True"

maintainer = "John Doe"

-assert True, print("Unreachable due to", condition, f", ask {maintainer} for advice", sep=U00A0)

+assert True, f"Unreachable due to{U00A0}{condition}{U00A0}, ask {maintainer} for advice"

# Empty print

# Expects:

# - `msg` entirely removed from assertion

-assert True, print()

+assert True

# Empty print with separator

# Expects:

# - `msg` entirely removed from assertion

-assert True, print(sep=" ")

+assert True

# Custom print function that actually returns a string

# Expects:

@@ -100,4 +100,4 @@

# Use of `builtins.print`

# Expects:

# - single StringLiteral

-assert True, builtins.print("This print should be removed.")

+assert True, "This print should be removed."

```

## Known Issues

The current implementation resolves all arguments and separators of the

`print` expression into a single string, be it

`StringLiteralValue::single` or a `FStringValue::single`. This:

- potentially joins together strings well beyond the ideal character

limit for each line, and

- does not preserve multi-line strings in their original format, in

favour of a single line `"...\n...\n..."` format.

These are purely formatting issues only occurring in unusual scenarios.

Additionally, the autofix will tolerate `print` calls that were

previously invalid:

```python

assert True, print("this", "should not be allowed", sep=42)

```

This will be transformed into

```python

assert True, f"this{42}should not be allowed"

```

which some could argue is an alteration of behaviour.

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

Documentation mentions:

> PEP 563 enabled the use of a number of convenient type annotations,

such as `list[str]` instead of `List[str]`

but it meant [PEP 585](https://peps.python.org/pep-0585/) instead.

[PEP 563](https://peps.python.org/pep-0563/) is the one defining `from

__future__ import annotations`.

## Test Plan

No automated test required, just verify that

https://peps.python.org/pep-0585/ is the correct reference.

## Summary

I look at the token stream a lot, not specifically in the playground but

in the terminal output and it's annoying to scroll a lot to find

specific location. Most of the information is also redundant.

The final format we end up with is: `<kind> <range> (flags = ...)` e.g.,

`String 0..4 (flags = BYTE_STRING)` where the flags part is only

populated if there are any flags set.

## Summary

Fixes#11651.

Fixes#11851.

We were double-closing a notebook document from the index, once in

`textDocument/didClose` and then in the `notebookDocument/didClose`

handler. The second time this happens, taking a snapshot fails.

I've rewritten how we handle snapshots for closing notebooks / notebook

cells so that any failure is simply logged instead of propagating

upwards. This implementation works consistently even if we don't receive

`textDocument/didClose` notifications for each specific cell, since they

get closed (and the diagnostics get cleared) in the notebook document

removal process.

## Test Plan

1. Open an untitled, unsaved notebook with the `Create: New Jupyter

Notebook` command from the VS Code command palette (`Ctrl/Cmd + Shift +

P`)

2. Without saving the document, close it.

3. No error popup should appear.

4. Run the debug command (`Ruff: print debug information`) to confirm

that there are no open documents

## Summary

This PR does some housekeeping into moving certain structs into related

modules. Specifically,

1. Move `LexicalError` from `lexer.rs` to `error.rs` which also contains

the `ParseError`

2. Move `Token`, `TokenFlags` and `TokenValue` from `lexer.rs` to

`token.rs`

## Summary

This PR removes the duplication around `is_trivia` functions.

There are two of them in the codebase:

1. In `pycodestyle`, it's for newline, indent, dedent, non-logical

newline and comment

2. In the parser, it's for non-logical newline and comment

The `TokenKind::is_trivia` method used (1) but that's not correct in

that context. So, this PR introduces a new `is_non_logical_token` helper

method for the `pycodestyle` crate and updates the

`TokenKind::is_trivia` implementation with (2).

This also means we can remove `Token::is_trivia` method and the

standalone `token_source::is_trivia` function and use the one on

`TokenKind`.

## Test Plan

`cargo insta test`

## Summary

Closes#11914.

This PR introduces a snapshot test that replays the LSP requests made

during a document formatting request, and confirms that the notebook

document is updated in the expected way.

## Summary

Fixes#11911.

`shellexpand` is now used on `logFile` to expand the file path, allowing

the usage of `~` and environment variables.

## Test Plan

1. Set `logFile` in either Neovim or Helix to a file path that needs

expansion, like `~/.config/helix/ruff_logs.txt`.

2. Ensure that `RUFF_TRACE` is set to `messages` or `verbose`

3. Open a Python file in Neovim/Helix

4. Confirm that a file at the path specified was created, with the

expected logs.

## Summary

This PR updates the logical line rules entry-point function to only run

the logic if any of the rules within that group is enabled.

Although this shouldn't really give any performance improvements, it's

better not to do additional work if we can. This is also consistent with

how other rules are run.

## Test Plan

`cargo insta test`

## Summary

This PR avoids moving back the lexer for a triple-quoted f-string during

the re-lexing phase.

The reason this is a problem is that for a triple-quoted f-string the

newlines are part of the f-string itself, specifically they'll be part

of the `FStringMiddle` token. So, if we moved the lexer back, there

would be a `Newline` token whose range would be in between an

`FStringMiddle` token. This creates a panic in downstream usage.

fixes: #11937

## Test Plan

Add test cases and validate the snapshots.

## Summary

This PR avoids the `depth` counter when detecting indentation from

non-logical lines because it seems to never be used. It might have been

a leftover when the logic was added originally in #11608.

## Test Plan

`cargo insta test`

## Summary

This PR updates the linter to show all the parse errors as diagnostics

instead of just the first one.

Note that this doesn't affect the parse error displayed as error log

message. This will be removed in a follow-up PR.

### Breaking?

I don't think this is a breaking change even though this might give more

diagnostics. The main reason is that this shouldn't affect any users

because it'll only give additional diagnostics in the case of multiple

syntax errors.

## Test Plan

Add an integration test case which would raise more than one parse

error.

## Summary

This PR updates the re-lexing logic to avoid consuming the trailing

whitespace and move the lexer explicitly to the last newline character

encountered while moving backwards.

Consider the following code snippet as taken from the test case

highlighted with whitespace (`.`) and newline (`\n`) characters:

```py

# There are trailing whitespace before the newline character but those whitespaces are

# part of the comment token

f"""hello {x # comment....\n

# ^

y = 1\n

```

The parser is at `y` when it's trying to recover from an unclosed `{`,

so it calls into the re-lexing logic which tries to move the lexer back

to the end of the previous line. But, as it consumed all whitespaces it

moved the lexer to the location marked by `^` in the above code snippet.

But, those whitespaces are part of the comment token. This means that

the range for the two tokens were overlapping which introduced the

panic.

Note that this is only a bug when there's a comment with a trailing

whitespace otherwise it's fine to move the lexer to the whitespace

character. This is because the lexer would just skip the whitespace

otherwise. Nevertheless, this PR updates the logic to move it explicitly

to the newline character in all cases.

fixes: #11929

## Test Plan

Add test cases and update the snapshot. Make sure that it doesn't panic

on the code snippet in the linked issue.

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

related to https://github.com/astral-sh/ruff/issues/5306

The check right now only checks in the first 1024 bytes, and that's

really not enough when there's a docstring at the beginning of a file.

A more proper fix might be needed, which might be more complex (and I

don't have the `rust` skills to implement that). But this temporary

"fix" might enable more users to use this.

Context: We want to use this rule in

https://github.com/scikit-learn/scikit-learn/ and we got blocked because

of this hardcoded rule (which TBH took us quite a while to figure out

why it was failing since it's not documented).

## Test Plan

This is already kinda tested, modified the test for the new byte number.

<!-- How was it tested? -->

## Summary

This PR removes most of the syntax errors from the test cases. This

would create noise when https://github.com/astral-sh/ruff/pull/11901 is

complete. These syntax errors are also just noise for the test itself.

## Test Plan

Update the snapshots and verify that they're still the same.

## Summary

This PR is a follow-up on #11845 to add the re-lexing logic for normal

list parsing.

A normal list parsing is basically parsing elements without any

separator in between i.e., there can only be trivia tokens in between

the two elements. Currently, this is only being used for parsing

**assignment statement** and **f-string elements**. Assignment

statements cannot be in a parenthesized context, but f-string can have

curly braces so this PR is specifically for them.

I don't think this is an ideal recovery but the problem is that both

lexer and parser could add an error for f-strings. If the lexer adds an

error it'll emit an `Unknown` token instead while the parser adds the

error directly. I think we'd need to move all f-string errors to be

emitted by the parser instead. This way the parser can correctly inform

the lexer that it's out of an f-string and then the lexer can pop the

current f-string context out of the stack.

## Test Plan

Add test cases, update the snapshots, and run the fuzzer.

## Summary

Fixes https://github.com/astral-sh/ruff-vscode/issues/496.

Cells are no longer removed from the notebook index when a notebook gets

updated, but rather when `textDocument/didClose` is called for them.

This solves an issue where their premature removal from the notebook

cell index would cause their URL to be un-queryable in the

`textDocument/didClose` handler.

## Test Plan

Create and then delete a notebook cell in VS Code. No error should

appear.

## Summary

This PR implements the re-lexing logic in the parser.

This logic is only applied when recovering from an error during list

parsing. The logic is as follows:

1. During list parsing, if an unexpected token is encountered and it

detects that an outer context can understand it and thus recover from

it, it invokes the re-lexing logic in the lexer

2. This logic first checks if the lexer is in a parenthesized context

and returns if it's not. Thus, the logic is a no-op if the lexer isn't

in a parenthesized context

3. It then reduces the nesting level by 1. It shouldn't reset it to 0

because otherwise the recovery from nested list parsing will be

incorrect

4. Then, it tries to find last newline character going backwards from

the current position of the lexer. This avoids any whitespaces but if it

encounters any character other than newline or whitespace, it aborts.

5. Now, if there's a newline character, then it needs to be re-lexed in

a logical context which means that the lexer needs to emit it as a

`Newline` token instead of `NonLogicalNewline`.

6. If the re-lexing gives a different token than the current one, the

token source needs to update it's token collection to remove all the

tokens which comes after the new current position.

It turns out that the list parsing isn't that happy with the results so

it requires some re-arranging such that the following two errors are

raised correctly:

1. Expected comma

2. Recovery context error

For (1), the following scenarios needs to be considered:

* Missing comma between two elements

* Half parsed element because the grammar doesn't allow it (for example,

named expressions)

For (2), the following scenarios needs to be considered:

1. If the parser is at a comma which means that there's a missing

element otherwise the comma would've been consumed by the first `eat`

call above. And, the parser doesn't take the re-lexing route on a comma

token.

2. If it's the first element and the current token is not a comma which

means that it's an invalid element.

resolves: #11640

## Test Plan

- [x] Update existing test snapshots and validate them

- [x] Add additional test cases specific to the re-lexing logic and

validate the snapshots

- [x] Run the fuzzer on 3000+ valid inputs

- [x] Run the fuzzer on invalid inputs

- [x] Run the parser on various open source projects

- [x] Make sure the ecosystem changes are none

## Summary

This PR updates the server capabilities to include the commands that

Ruff supports. This is similar to how there's a list of possible code

actions supported by the server.

I noticed this when I was trying to find whether Helix supported

workspace commands or not based on Jane's comment

(https://github.com/astral-sh/ruff/pull/11831#discussion_r1634984921)

and I found the `:lsp-workspace-command` in the editor but it didn't

show up anything in the picker.

So, I looked at the implementation in Helix

(9c479e6d2d/helix-term/src/commands/typed.rs (L1372-L1384))

which made me realize that Ruff doesn't provide this in its

capabilities. Currently, this does require `ruff` to be first in the

list of language servers in the user config but that should be resolved

by https://github.com/helix-editor/helix/pull/10176. So, the following

config should work:

```toml

[[language]]

name = "python"

# Ruff should come first until https://github.com/helix-editor/helix/pull/10176 is released

language-servers = ["ruff", "pyright"]

```

## Test Plan

1. Neovim's server capabilities output should include the supported

commands:

```

executeCommandProvider = {

commands = { "ruff.applyFormat", "ruff.applyAutofix", "ruff.applyOrganizeImports", "ruff.printDebugInformation" },

workDoneProgress = false

},

```

2. Helix should now display the commands to pick from when

`:lsp-workspace-command` is invoked:

<img width="832" alt="Screenshot 2024-06-13 at 08 47 14"

src="https://github.com/astral-sh/ruff/assets/67177269/09048ecd-c974-4e09-ab56-9482ff3d780b">

## Summary

This PR adds a new enum to determine the kind of terminator token i.e.,

is it actually terminates the list or is it used for error recovery.

This is important because the parser should take the error recovery

route in case the terminator token is used for better error recovery.

This will then try to re-lex the token if it's the case.

I haven't updated any reference to use this new enum as otherwise it'll

update the snapshots. I plan to do that in a follow-up PR so that it's

easier to reason about.

## Test plan

`cargo insta test`

## Summary

This PR separates the terminator token for f-string elements depending

on the context. A list of f-string element can occur either in a regular

f-string or a format spec of an f-string. The terminator token is

different depending on that context.

## Test Plan

`cargo insta test` and verify the updated snapshots.

## Summary

This PR re-uses the `ruff_python_trivia::is_python_whitespace` in the

lexer instead of defining its own. This was mainly to avoid circular

dependency which was resolved in #11261.

## Summary

Add Constraint nodes to flow graph, and narrow types based on that (only

`is None` and `is not None` narrowing supported for now, to prototype

the structure.)

Also add simplification of zero- and one-element unions and

intersections, and flattening of intersections.

There's a lot more normalization logic needed for unions and

intersections (as is obvious from the inferred type in the added

`narrow_none` test), but this will be non-trivial and I'd rather do it

in a separate PR.

Here's a flowchart diagram for the code in the added `narrow_none` test:

The top branch is for the `if` expression in the initial assignment to

`x`; that `Constraint` node would only affect the type of `flag`, which

we don't care about in this test.

The second branch is for the `if` statement, with `Constraint` node

affecting the type of `x`.

## Test Plan

Added tests.

## Summary

Fixes#11744.

We now show a distinct popup message when we fail to get a document

snapshot during command execution. This message more clearly

communicates the issue to the user, instead of a generic "ruff

encountered an error" message.

## Test Plan

Try running `Fix all auto-fixable problems` on an incompatible file (for

example: `settings.json`). You should see the following popup message:

<img width="456" alt="Screenshot 2024-06-11 at 11 47 16 AM"

src="https://github.com/astral-sh/ruff/assets/19577865/3a28e3d7-3896-4dd0-b117-f87300dd3b68">

## Summary

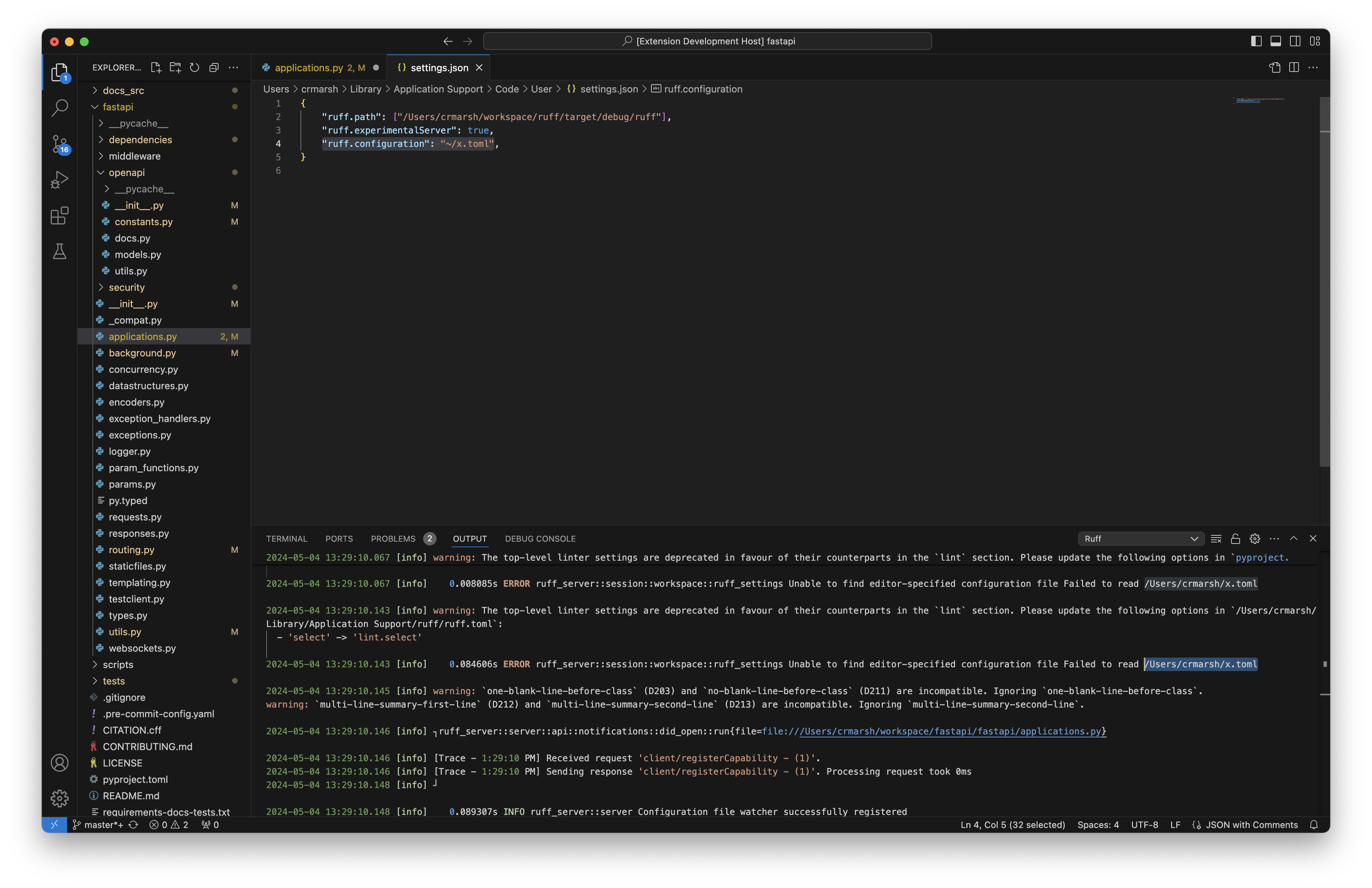

Closes#11715.

Introduces a new command, `ruff.printDebugInformation`. This will print

useful information about the status of the server to `stderr`.

Right now, the information shown by this command includes:

* The path to the server executable

* The version of the executable

* The text encoding being used

* The number of open documents and workspaces

* A list of registered configuration files

* The capabilities of the client

## Test Plan

First, checkout and use [the corresponding `ruff-vscode`

PR](https://github.com/astral-sh/ruff-vscode/pull/495).

Running the `Print debug information` command in VS Code should show

something like the following in the Output channel:

<img width="991" alt="Screenshot 2024-06-11 at 11 41 46 AM"

src="https://github.com/astral-sh/ruff/assets/19577865/ab93c009-bb7b-4291-b057-d44fdc6f9f86">

## Summary

Fixes#10968.

Fixes#11545.

The server's tracing system has been rewritten from the ground up. The

server now has trace level and log level settings which restrict the

tracing events and spans that get logged.

* A `logLevel` setting has been added, which lets a user set the log

level. By default, it is set to `"info"`.

* A `logFile` setting has also been added, which lets the user supply an

optional file to send tracing output (it does not have to exist as a

file yet). By default, if this is unset, tracing output will be sent to

`stderr`.

* A `$/setTrace` handler has also been added, and we also set the trace

level from the initialization options. For editors without direct

support for tracing, the environment variable `RUFF_TRACE` can override

the trace level.

* Small changes have been made to how we display tracing output. We no

longer use `tracing-tree`, and instead use

`tracing_subscriber::fmt::Layer` to format output. Thread names are now

included in traces, and I've made some adjustment to thread worker names

to be more useful.

## Test Plan

In VS Code, with `ruff.trace.server` set to its default value, no logs

from Ruff should appear.

After changing `ruff.trace.server` to either `messages` or `verbose`,

you should see log messages at `info` level or higher appear in Ruff's

output:

<img width="1005" alt="Screenshot 2024-06-10 at 10 35 04 AM"

src="https://github.com/astral-sh/ruff/assets/19577865/6050d107-9815-4bd2-96d0-e86f096a57f5">

In Helix, by default, no logs from Ruff should appear.

To set the trace level in Helix, you'll need to modify your language

configuration as follows:

```toml

[language-server.ruff]

command = "/Users/jane/astral/ruff/target/debug/ruff"

args = ["server", "--preview"]

environment = { "RUFF_TRACE" = "messages" }

```

After doing this, logs of `info` level or higher should be visible in

Helix:

<img width="1216" alt="Screenshot 2024-06-10 at 10 39 26 AM"

src="https://github.com/astral-sh/ruff/assets/19577865/8ff88692-d3f7-4fd1-941e-86fb338fcdcc">

You can use `:log-open` to quickly open the Helix log file.

In Neovim, by default, no logs from Ruff should appear.

To set the trace level in Neovim, you'll need to modify your

configuration as follows:

```lua

require('lspconfig').ruff.setup {

cmd = {"/path/to/debug/executable", "server", "--preview"},

cmd_env = { RUFF_TRACE = "messages" }

}

```

You should see logs appear in `:LspLog` that look like the following:

<img width="1490" alt="Screenshot 2024-06-11 at 11 24 01 AM"

src="https://github.com/astral-sh/ruff/assets/19577865/576cd5fa-03cf-477a-b879-b29a9a1200ff">

You can adjust `logLevel` and `logFile` in `settings`:

```lua

require('lspconfig').ruff.setup {

cmd = {"/path/to/debug/executable", "server", "--preview"},

cmd_env = { RUFF_TRACE = "messages" },

settings = {

logLevel = "debug",

logFile = "your/log/file/path/log.txt"

}

}

```

The `logLevel` and `logFile` can also be set in Helix like so:

```toml

[language-server.ruff.config.settings]

logLevel = "debug"

logFile = "your/log/file/path/log.txt"

```

Even if this log file does not exist, it should now be created and

written to after running the server:

<img width="1148" alt="Screenshot 2024-06-10 at 10 43 44 AM"

src="https://github.com/astral-sh/ruff/assets/19577865/ab533cf7-d5ac-4178-97f1-e56da17450dd">

## Summary

This PR updates the parser to remove building the `CommentRanges` and

instead it'll be built by the linter and the formatter when it's

required.

For the linter, it'll be built and owned by the `Indexer` while for the

formatter it'll be built from the `Tokens` struct and passed as an

argument.

## Test Plan

`cargo insta test`

## Summary

The fix for E203 now produces the same result as ruff format in cases

where a slice ends on a colon and the closing square bracket is on the

following line.

Refers to https://github.com/astral-sh/ruff/issues/10973

## Test Plan

The minimal reproduction case in the ticket was added as test case

producing no error. Additional cases with multiple spaces or a tab

before the colon where added to make sure that the rule still finds

these.

## Summary

As-is, we're using the URL path for all files, leading us to use paths

like:

```

/c%3A/Users/crmar/workspace/fastapi/tests/main.py

```

This doesn't match against per-file ignores and other patterns in Ruff

configuration.

This PR modifies the LSP to use the real file path if available, and the

virtual file path if not.

Closes https://github.com/astral-sh/ruff/issues/11751.

## Test Plan

Ran the LSP on Windows. In the FastAPI repo, added:

```toml

[tool.ruff.lint.per-file-ignores]

"tests/**/*.py" = ["F401"]

```

And verified that an unused import was ignored in `tests` after this

change, but not before.

## Summary

This PR removes the `result-like` dependency and instead implement the

required functionality. The motivation being that `noqa.is_enabled()` is

easier to read than `noqa.into()`.

For context, I was just trying to understand the syntax error workflow

and I saw these flags which were being converted via `into`. I always

find `into` confusing because you never know what's it being converted

into unless you know the type. Later realized that it's just a boolean

flag. After removing the usages from these two flags, it turns out that

the dependency is only being used in one rule so I thought to remove

that as well.

## Test Plan

`cargo insta test`

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

This PR implements the [consider dict

items](https://pylint.pycqa.org/en/latest/user_guide/messages/convention/consider-using-dict-items.html)

rule from Pylint. Enabling this rule flags:

```python

ORCHESTRA = {

"violin": "strings",

"oboe": "woodwind",

"tuba": "brass",

"gong": "percussion",

}

for instrument in ORCHESTRA:

print(f"{instrument}: {ORCHESTRA[instrument]}")

for instrument in ORCHESTRA.keys():

print(f"{instrument}: {ORCHESTRA[instrument]}")

for instrument in (inline_dict := {"foo": "bar"}):

print(f"{instrument}: {inline_dict[instrument]}")

```

For not using `items()` to extract the value out of the dict. We ignore

the case of an assignment, as you can't modify the underlying

representation with the value in the list of tuples returned.

## Test Plan

<!-- How was it tested? -->

`cargo test`.

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

## Summary

Definitions are used in symbol table and in flow graph, and aren't

inherently owned by one or the other; move them into their own

submodule.

## Test Plan

Existing tests.

## Summary

Add support for inferring int literal types from basic arithmetic on int

literals. Just to begin showing examples of resolving more complex

expression types, and because this will be useful in testing walrus

expressions.

## Test Plan

Added test.

## Summary

After looking at this a bit, I think it does make sense to have

`Unbound` as part of the `Definition` enum; if we are modeling `Unbound`

as a type (which currently we are), then every symbol implicitly starts

each scope with a "definition" as unbound, and the cleanest way to model

that is as a real `Definition`. We should be able to handle a definition

of "unbound" anywhere we handle definitions.

But the name `None` wasn't clear enough; changing the name to `Unbound`

and adding a doc comment.

Also change `[first].into_iter()` to `std::iter::once(first)`, from

post-land code review on a prior PR.

## Test Plan

Existing tests.

## Summary

This PR is a follow-up to #11740 to restrict access to the `Parsed`

output by replacing the `parsed` API function with a more specific one.

Currently, that is `comment_ranges` but the linked PR exposes a `tokens`

method.

The main motivation is so that there's no way to get an incorrect

information from the checker. And, it also encapsulates the source of

the comment ranges and the tokens itself. This way it would become

easier to just update the checker if the source for these information

changes in the future.

## Test Plan

`cargo insta test`

## Summary

This PR fixes a bug where the checker would require the tokens for an

invalid offset w.r.t. the source code.

Taking the source code from the linked issue as an example:

```py

relese_version :"0.0is 64"

```

Now, this isn't really a valid type annotation but that's what this PR

is fixing. Regardless of whether it's valid or not, Ruff shouldn't

panic.

The checker would visit the parsed type annotation (`0.0is 64`) and try

to detect any violations. Certain rule logic requests the tokens for the

same but it would fail because the lexer would only have the `String`

token considering original source code. This worked before because the

lexer was invoked again for each rule logic.

The solution is to store the parsed type annotation on the checker if

it's in a typing context and use the tokens from that instead if it's

available. This is enforced by creating a new API on the checker to get

the tokens.

But, this means that there are two ways to get the tokens via the

checker API. I want to restrict this in a follow-up PR (#11741) to only

expose `tokens` and `comment_ranges` as methods and restrict access to

the parsed source code.

fixes: #11736

## Test Plan

- [x] Add a test case for `F632` rule and update the snapshot

- [x] Check all affected rules

- [x] No ecosystem changes

## Summary

This PR updates the return type of `parse_type_annotation` from `Expr`

to `Parsed<ModExpression>`. This is to allow accessing the tokens for

the parsed sub-expression in the follow-up PR.

## Test Plan

`cargo insta test`

## Summary

Fixes https://github.com/astral-sh/ruff-vscode/issues/482.

I've made adjustments to `format` and `format_range` that handle parsing

errors before they become server errors. We'll still log this as a

problem, but there will no longer be a visible popup.

## Test Plan

Instead of seeing a visible error when formatting a document with syntax

issues, you should see this warning in the LSP logs:

<img width="991" alt="Screenshot 2024-06-04 at 3 38 23 PM"

src="https://github.com/astral-sh/ruff/assets/19577865/9d68947d-6462-4ca6-ab5a-65e573c91db6">

Similarly, if you try to format a range with syntax issues, you should

see this warning in the LSP logs instead of a visible error popup:

<img width="1010" alt="Screenshot 2024-06-04 at 3 39 10 PM"

src="https://github.com/astral-sh/ruff/assets/19577865/99fff098-798d-406a-976e-81ead0da0352">

---------

Co-authored-by: Zanie Blue <contact@zanie.dev>

## Summary

This PR fixes a bug where the lexer didn't consider the BOM into the

start offset.

fixes: #11731

## Test Plan

Add multiple test cases which involves BOM character in the source for

the lexer and verify the snapshot.

## Summary

This PR updates the lexer checkpoint to store the cursor offset instead

of cloning the cursor itself. This reduces the size of `LexerCheckpoint`

from 136 to 112 bytes and also removes the need for lifetime.

## Test Plan

`cargo insta test`

## Summary

Ensures that we respect per-file ignores and exemptions for these rules.

Specifically, we allow:

```python

# ruff: noqa: PGH004

```

...to ignore `PGH004`.

## Summary

Should resolve https://github.com/astral-sh/ruff/issues/11454.

This is my first PR to `ruff`, so I may have missed something.

If I understood the suggestion in the issue correctly, rule `PGH004`

should be set to `Preview` again.

## Test Plan

Created two fixtures derived from the issue.

## Summary

Switch name resolution in `infer_expression_type` from resolving the

public type of a symbol, to resolving the reachable definitions of that

symbol from the reference point, using the flow graph.

This surfaced a bug in the flow graph implementation and a bug in symbol

table building, both of which are also fixed here.

The bug in flow graph implementation was that when we pushed and popped

scopes, we didn't maintain a stack of "current flow nodes" in all

stacked scopes, to be restored when we returned to that scope. Now we

do.

The bug in symbol table building that we didn't visit the parts of

functions and class definitions in the correct scopes. E.g. decorators

should be visited in the outer scope, arguments should be visited inside

the type-params scope (if any) but not inside the function body scope,

and only the body itself should actually be visited inside the body

scope. Fixing this requires that we no longer use `walk_stmt` here,

instead we have to visit each individual component.

## Test Plan

Added test.

## Summary

Rename `infer_symbol_type` to `infer_symbol_public_type`, and allow it

to work on symbols with more than one definition. For now, use the most

cautious/sound inference, which is the union of all definitions. We can

prune this union more in future by eliminating definitions if we can

show that they can't be visible (this requires both that the symbol is

definitely later reassigned, and that there is no intervening

call/import that might be able to see the over-written definition).

## Test Plan

Added a test showing inference of union from multiple definitions.

## Summary

This PR fixes a bug where the `Generator` wouldn't add a newline before

a type alias statement. This is because it wasn't using the `statement`

macro which takes care of the newline.

Without this fix, a code like:

```py

type X = int

type Y = str

```

The generator would produce:

```py

type X = inttype Y = str

```

## Test Plan

Add a test case.

## Summary

This PR removes the following dependencies from the `ruff_python_parser`

crate:

* `anyhow` (moved to dev dependencies)

* `is-macro`

* `itertools`

The main motivation is that they aren't used much.

Additionally, it updates the return type of `parse_type_annotation` to

use a more specific `ParseError` instead of the generic `anyhow::Error`.

## Test Plan

`cargo insta test`

## Summary

This PR updates the logic for parsing type annotation to accept a

`ExprStringLiteral` node instead of the string value and the range.

The main motivation of this change is to simplify the implementation of

`parse_type_annotation` function with:

* Use the `opener_len` and `closer_len` from the string flags to get the

raw contents range instead of extracting it via

* `str::leading_quote(expression).unwrap().text_len()`

* `str::trailing_quote(expression).unwrap().text_len()`

* Avoid comparing the string content if we already know that it's

implicitly concatenated

## Test Plan

`cargo insta test`

## Summary

This PR re-orders the lexer methods in the following order:

1. `next_token`

2. `lex_token`

3. `eat_indentation`

4. `handle_indentation`

5. `skip_whitespace`

6. `consume_ascii_character`

7. `try_single_char_prefix`

8. `try_double_char_prefix`

9. `lex_identifier`

10. `lex_fstring_start`

11. `lex_fstring_middle_or_end`

12. `lex_string`

13. `lex_number`

14. `lex_number_radix`

15. `lex_decimal_number`

16. `radix_run`

17. `lex_comment`

18. `lex_ipython_escape_command`

19. `consume_end`

Following was considered for the ordering:

* 1 is the main entry point which delegates to 2

* 3, 4, 5 are all related to whitespace which is done first

* 6 is the entrypoint for an ascii character which delegates to 9, 12,

13, 17, 18, 19

* Others are grouped around similar kind of methods

## Summary

This PR updates the entire parser stack in multiple ways:

### Make the lexer lazy

* https://github.com/astral-sh/ruff/pull/11244

* https://github.com/astral-sh/ruff/pull/11473

Previously, Ruff's lexer would act as an iterator. The parser would

collect all the tokens in a vector first and then process the tokens to

create the syntax tree.

The first task in this project is to update the entire parsing flow to

make the lexer lazy. This includes the `Lexer`, `TokenSource`, and

`Parser`. For context, the `TokenSource` is a wrapper around the `Lexer`

to filter out the trivia tokens[^1]. Now, the parser will ask the token

source to get the next token and only then the lexer will continue and

emit the token. This means that the lexer needs to be aware of the

"current" token. When the `next_token` is called, the current token will

be updated with the newly lexed token.

The main motivation to make the lexer lazy is to allow re-lexing a token

in a different context. This is going to be really useful to make the

parser error resilience. For example, currently the emitted tokens

remains the same even if the parser can recover from an unclosed

parenthesis. This is important because the lexer emits a

`NonLogicalNewline` in parenthesized context while a normal `Newline` in

non-parenthesized context. This different kinds of newline is also used

to emit the indentation tokens which is important for the parser as it's

used to determine the start and end of a block.

Additionally, this allows us to implement the following functionalities:

1. Checkpoint - rewind infrastructure: The idea here is to create a

checkpoint and continue lexing. At a later point, this checkpoint can be

used to rewind the lexer back to the provided checkpoint.

2. Remove the `SoftKeywordTransformer` and instead use lookahead or

speculative parsing to determine whether a soft keyword is a keyword or

an identifier

3. Remove the `Tok` enum. The `Tok` enum represents the tokens emitted

by the lexer but it contains owned data which makes it expensive to

clone. The new `TokenKind` enum just represents the type of token which

is very cheap.

This brings up a question as to how will the parser get the owned value

which was stored on `Tok`. This will be solved by introducing a new

`TokenValue` enum which only contains a subset of token kinds which has

the owned value. This is stored on the lexer and is requested by the

parser when it wants to process the data. For example:

8196720f80/crates/ruff_python_parser/src/parser/expression.rs (L1260-L1262)

[^1]: Trivia tokens are `NonLogicalNewline` and `Comment`

### Remove `SoftKeywordTransformer`

* https://github.com/astral-sh/ruff/pull/11441

* https://github.com/astral-sh/ruff/pull/11459

* https://github.com/astral-sh/ruff/pull/11442

* https://github.com/astral-sh/ruff/pull/11443

* https://github.com/astral-sh/ruff/pull/11474

For context,

https://github.com/RustPython/RustPython/pull/4519/files#diff-5de40045e78e794aa5ab0b8aacf531aa477daf826d31ca129467703855408220

added support for soft keywords in the parser which uses infinite

lookahead to classify a soft keyword as a keyword or an identifier. This

is a brilliant idea as it basically wraps the existing Lexer and works

on top of it which means that the logic for lexing and re-lexing a soft

keyword remains separate. The change here is to remove

`SoftKeywordTransformer` and let the parser determine this based on

context, lookahead and speculative parsing.

* **Context:** The transformer needs to know the position of the lexer

between it being at a statement position or a simple statement position.

This is because a `match` token starts a compound statement while a

`type` token starts a simple statement. **The parser already knows

this.**

* **Lookahead:** Now that the parser knows the context it can perform

lookahead of up to two tokens to classify the soft keyword. The logic

for this is mentioned in the PR implementing it for `type` and `match

soft keyword.

* **Speculative parsing:** This is where the checkpoint - rewind

infrastructure helps. For `match` soft keyword, there are certain cases

for which we can't classify based on lookahead. The idea here is to

create a checkpoint and keep parsing. Based on whether the parsing was

successful and what tokens are ahead we can classify the remaining

cases. Refer to #11443 for more details.

If the soft keyword is being parsed in an identifier context, it'll be

converted to an identifier and the emitted token will be updated as

well. Refer

8196720f80/crates/ruff_python_parser/src/parser/expression.rs (L487-L491).

The `case` soft keyword doesn't require any special handling because

it'll be a keyword only in the context of a match statement.

### Update the parser API

* https://github.com/astral-sh/ruff/pull/11494

* https://github.com/astral-sh/ruff/pull/11505

Now that the lexer is in sync with the parser, and the parser helps to

determine whether a soft keyword is a keyword or an identifier, the

lexer cannot be used on its own. The reason being that it's not

sensitive to the context (which is correct). This means that the parser

API needs to be updated to not allow any access to the lexer.

Previously, there were multiple ways to parse the source code:

1. Passing the source code itself

2. Or, passing the tokens

Now that the lexer and parser are working together, the API

corresponding to (2) cannot exists. The final API is mentioned in this

PR description: https://github.com/astral-sh/ruff/pull/11494.

### Refactor the downstream tools (linter and formatter)

* https://github.com/astral-sh/ruff/pull/11511

* https://github.com/astral-sh/ruff/pull/11515

* https://github.com/astral-sh/ruff/pull/11529

* https://github.com/astral-sh/ruff/pull/11562

* https://github.com/astral-sh/ruff/pull/11592

And, the final set of changes involves updating all references of the

lexer and `Tok` enum. This was done in two-parts:

1. Update all the references in a way that doesn't require any changes

from this PR i.e., it can be done independently

* https://github.com/astral-sh/ruff/pull/11402

* https://github.com/astral-sh/ruff/pull/11406

* https://github.com/astral-sh/ruff/pull/11418

* https://github.com/astral-sh/ruff/pull/11419

* https://github.com/astral-sh/ruff/pull/11420

* https://github.com/astral-sh/ruff/pull/11424

2. Update all the remaining references to use the changes made in this

PR

For (2), there were various strategies used:

1. Introduce a new `Tokens` struct which wraps the token vector and add

methods to query a certain subset of tokens. These includes:

1. `up_to_first_unknown` which replaces the `tokenize` function

2. `in_range` and `after` which replaces the `lex_starts_at` function

where the former returns the tokens within the given range while the

latter returns all the tokens after the given offset

2. Introduce a new `TokenFlags` which is a set of flags to query certain

information from a token. Currently, this information is only limited to

any string type token but can be expanded to include other information

in the future as needed. https://github.com/astral-sh/ruff/pull/11578

3. Move the `CommentRanges` to the parsed output because this

information is common to both the linter and the formatter. This removes

the need for `tokens_and_ranges` function.

## Test Plan

- [x] Update and verify the test snapshots

- [x] Make sure the entire test suite is passing

- [x] Make sure there are no changes in the ecosystem checks

- [x] Run the fuzzer on the parser

- [x] Run this change on dozens of open-source projects

### Running this change on dozens of open-source projects

Refer to the PR description to get the list of open source projects used

for testing.

Now, the following tests were done between `main` and this branch:

1. Compare the output of `--select=E999` (syntax errors)

2. Compare the output of default rule selection

3. Compare the output of `--select=ALL`

**Conclusion: all output were same**

## What's next?

The next step is to introduce re-lexing logic and update the parser to

feed the recovery information to the lexer so that it can emit the

correct token. This moves us one step closer to having error resilience

in the parser and provides Ruff the possibility to lint even if the

source code contains syntax errors.

## Summary

Implement support for RDJson output for `ruff check`, as requested in

#8655.

## Test Plan

Tested using a snapshot test. Same approach as for e.g. the JSON output

formatter.

## Additional info

I tried to keep the implementation close to the JSON implementation.

I had to deviate a bit to make the `suggestions` key work: If there are

no suggestions, then setting `suggestions` to `null` is invalid

according to the JSONSchema. Therefore, I opted for a slightly more

complex implementation, that skips the `suggestions` key entirely if

there are no fixes available for the given diagnostic. Maybe it would

have been easier to set `"suggestions": []`, but I ended up doing it

this way.

I didn't consider notebooks, as I _think_ that RDJson doesn't work with

notebooks. This should be confirmed, and if so, there should be some

form of warning or error emitted when trying to output diagnostics for a

notebook.

I also didn't consider `ruff format`, as this comment:

https://github.com/astral-sh/ruff/issues/8655#issuecomment-1811446160

suggests that that wouldn't be compatible.

I'm new to Rust, any feedback is appreciated. 🙂 I

implemented this in order to have a productive rainy saturday afternoon,

I'm not knowledgeable about RDJson beyond the sources linked in the

issue.

## Summary

This PR implements the rule B901, which is part of the opinionated rules

of `flake8-bugbear`.

This rule seems to be desired in `ruff` as per

https://github.com/astral-sh/ruff/issues/3758 and

https://github.com/astral-sh/ruff/issues/2954#issuecomment-1441162976.

## Test Plan

As this PR was made closely following the

[CONTRIBUTING.md](8a25531a71/CONTRIBUTING.md),

it tests using the snapshot approach, that is described there.

## Sources

The implementation is inspired by [the original implementation in the

`flake8-bugbear`

repository](d1aec4cbef/bugbear.py (L1092)).

The error message and [test

file](d1aec4cbef/tests/b901.py)

where also copied from there.

The documentation I came up with on my own and needs improvement. Maybe

the example given in

https://github.com/astral-sh/ruff/issues/2954#issuecomment-1441162976

could be used, but maybe they are too complex, I'm not sure.

## Open Questions

- [ ] Documentation. (See above.)

- [x] Can I access the parent in a visitor?

The [original

implementation](d1aec4cbef/bugbear.py (L1100))

references the `yield` statement's parent to check if it is an

expression statement. I didn't find a way to do this in `ruff` and used

the `is_expresssion_statement` field on the visitor instead. What are

your thoughts on this? Is it possible and / or desired to access the

parent node here?

- [x] Is `Option::is_some(...)` -> `...unwrap()` the right thing to do?

Referring to [this piece of

code](9d5a280f71/crates/ruff_linter/src/rules/flake8_bugbear/rules/return_x_in_generator.rs?plain=1#L91-L96).

From my understanding, the `.unwrap()` is safe, because it is checked

that `return_` is not `None`. However, I feel like I missed a more

elegant solution that does both in one.

## Other

I don't know a lot about this rule, I just implemented it because I

found it in a

https://github.com/astral-sh/ruff/labels/good%20first%20issue.

I'm new to Rust, so any constructive critisism is appreciated.

---------

Co-authored-by: Charlie Marsh <charlie.r.marsh@gmail.com>

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

Introduces the skeleton of the flow graph. So far it doesn't actually

handle any non-linear control flow :) But it does show how we can go

from an expression that references a symbol, backward through the flow

graph, to find reachable definitions of that symbol.

Adding non-linear control flow will mean adding flow nodes with multiple

predecessors, which will introduce more complexity into

`ReachableDefinitionsIterator.next()`. But one step at a time.

## Test Plan

Added a (very basic) test.

## Summary

Give red-knot the ability to infer int literal types. This is quick and

easy, mostly because these types are a convenient way to observe

control-flow handling with simple assignments.

## Test Plan

Added test.

## Summary

In the [roadmap for `ruff

server`](https://github.com/astral-sh/ruff/discussions/10581) support

for vim and kate is listed. Therefore I added setup guides for them

based on the neovim guide. As I don't use pyright I wasn't able to

translate the corresponding part from the neovim guide.

## Test Plan

Doesn't apply.

* Potentially resolves#11619 (nondeterministic hashmap order across

different architectures) in F401 by replacing a hashmap with

nondeterministic traversal order with an ordered mapping.

I'm not sure how to test this with our CI/CD. I don't have an s390x

machine at home. Should I try it in Qemu?

## Summary

In an `__init__.py` file, it's not uncommon to lack a logical indent

(since it may just contain imports). In such cases, we were always

falling back to four-space indent. This PR adds detection for indents

within import groups.

Closes https://github.com/astral-sh/ruff/issues/11606.

## Summary

This PR aims to close#10095 by adding an option

`init-allow-undef-export` to the `pyflakes` settings. This option is

currently set to `true` such that behavior is kept identical.

But setting this option to `false` will lead to `F822` warnings to be

shown in all files, **including** `__init__.py` files.

As I've mentioned on #10095, I think `init-allow-undef-export=false`

would be the more user-friendly default option, as it creates fewer

surprises. @charliermarsh what do you think about making that the

default?

With this option in place, it's a single line fix for people that rely

on the old behavior.

And thinking longer term, for future major releases, one could probably

consider deprecating the option and eventually having people just `noqa`

these warnings if they are not wanted.

## Test Plan

I've added a `test_init_f822_enabled` test which repeats the test that

is done in the `init` test but this time with

`init-allow-undef-export=false` and the snap file correctly shows that

ruff will then trigger the otherwise suppressed F822 warning.

closes#10095

## Summary

Removed stray space in sample code snippet that is against ruff's own

default formatting rules.

This documentation appears on

https://docs.astral.sh/ruff/rules/unused-import/

## Test Plan

This is a trivially obvious change, verifiable with `ruff format

--check`

## Summary

Closes https://github.com/astral-sh/ruff/issues/11587.

## Test Plan

- Added a lint error to `test_server.py` in `vscode-ruff`.

- Validated that, prior to this change, diagnostics appeared in the

file.

- Validated that, with this change, no diagnostics were shown.

- Validated that, with this change, no diagnostics were fixed on-save.

## Summary

- Implements `Y066` from `flake8-pyi` as `PYI066`

- Fixes `PYI006` not being raised for `elif` clauses. This would have

conflicted with PYI006's implementation, so decided to do it in the same

PR.

## Test Plan

`cargo test` / `cargo insta review`

* Add a module type, `ModuleTypeId`

* Add an attribute lookup method `get_member` for `Type`

* Only implemented for `ModuleTypeId` and `ClassTypeId`

* [x] Should this be a trait?

*Answer: no*

* [x] Uses `unwrap`, but we should remove that. Maybe add a new variant

to `QueryError`?

*Answer: Return `Option<Type>` as is done elsewhere*

* Add `infer_definition_type` case for `Import`

* Add `infer_expr_type` case for `Attribute`

* Add a test to exercise these

* [x] remove all NOTE/FIXME/TODO after discussing with reviewers

## Summary

This PR ensures that if a variable is bound via `global`, and then the

`global` is read, the originating variable is also marked as read. It's

not perfect, in that it won't detect _rebindings_, like:

```python

from app import redis_connection

def func():

global redis_connection

redis_connection = 1

redis_connection()

```

So, above, `redis_connection` is still marked as unused.

But it does avoid flagging `redis_connection` as unused in:

```python

from app import redis_connection

def func():

global redis_connection

redis_connection()

```

Closes https://github.com/astral-sh/ruff/issues/11518.

## Summary

Follow up to https://github.com/astral-sh/ruff/pull/11521

Removes the extra added complexity for catch all match cases. This

matches the implementation of plain `else` statements.

## Test Plan

Added new test cases.

---------

Co-authored-by: Dhruv Manilawala <dhruvmanila@gmail.com>

## Summary

This PR fixes the bug to avoid flattening the global-only settings for

the new server.

This was added in https://github.com/astral-sh/ruff/pull/11497, possibly

to correctly de-serialize an empty value (`{}`). But, this lead to a bug

where the configuration under the `settings` key was not being read for

global-only variant.

By using #[serde(default)], we ensure that the settings field in the

`GlobalOnly` variant is optional and that an empty JSON object `{}` is

correctly deserialized into `GlobalOnly` with a default `ClientSettings`

instance.

fixes: #11507

## Test Plan

Update the snapshot and existing test case. Also, verify the following

settings in Neovim:

1. Nothing

```lua

ruff = {

cmd = {

'/Users/dhruv/work/astral/ruff/target/debug/ruff',

'server',

'--preview',

},

}

```

2. Empty dictionary

```lua

ruff = {

cmd = {

'/Users/dhruv/work/astral/ruff/target/debug/ruff',

'server',

'--preview',

},

init_options = vim.empty_dict(),

}

```

3. Empty `settings`

```lua

ruff = {

cmd = {

'/Users/dhruv/work/astral/ruff/target/debug/ruff',

'server',

'--preview',

},

init_options = {

settings = vim.empty_dict(),

},

}

```

4. With some configuration:

```lua

ruff = {

cmd = {

'/Users/dhruv/work/astral/ruff/target/debug/ruff',

'server',

'--preview',

},

init_options = {

settings = {

configuration = '/tmp/ruff-repro/pyproject.toml',

},

},

}

```

## Summary

This PR brings back the functionality to remove empty strings when

converting to an f-string in `UP032`.

For context, https://github.com/astral-sh/ruff/pull/8712 added this

functionality to remove _trailing_ empty strings but it got removed in

https://github.com/astral-sh/ruff/pull/8697 possibly unexpectedly so.

There's one difference which is that this PR will remove _any_ empty

strings and not just trailing ones. For example,

```diff

--- /Users/dhruv/playground/ruff/src/UP032.py

+++ /Users/dhruv/playground/ruff/src/UP032.py

@@ -1,7 +1,5 @@

(

- "{a}"

- ""

- "{b}"

- ""

-).format(a=1, b=1)

+ f"{1}"

+ f"{1}"

+)

```

## Test Plan

Run `cargo insta test` and update the snapshots.

## Summary

This PR updates the sequence sorting (`RUF022` and `RUF023`) to avoid

using the owned data from the string token. Instead, we will directly

use the reference to the data on the AST. This does introduce a lot of

lifetimes but that's required.

The main motivation for this is to allow removing the `lex_starts_at`

usage easily.

### Alternatives

1. Extract the raw string content (stripping the prefix and quotes)

using the `Locator` and use that for comparison

2. Build up an

[`IndexVec`](3e30962077/crates/ruff_index/src/vec.rs)

and use the newtype index in place of the string value itself. This also

does require lifetimes so we might as well just use the method in this

PR.

## Test Plan

`cargo insta test` and no ecosystem changes

## Summary

Fixes#11506.

`RuffSettingsIndex::new` now searches for configuration files in parent

directories.

## Test Plan

I confirmed that the original test case described in the issue worked as

expected.

## Summary

Concurrent GitLab runners clone projects into separate directories, e.g.

`{builds_dir}/$RUNNER_TOKEN_KEY/$CONCURRENT_ID/$NAMESPACE/$PROJECT_NAME`.

Since the fingerprint uses the full path to the file, the fingerprints

calculated by Ruff are different depending on which concurrent runner it

executes on, so often an MR will appear to remove all existing issues

and add them with new fingerprints.

I've adjusted the fingerprint function to use the project relative path,

which fixes this. Unfortunately this will have a breaking change for any

current users of this output - the fingerprints will change and appear

in GitLab as all linting messages having been fixed and then created.

## Test Plan

`cargo nextest run`

Running `ruff check --output-format gitlab` in a git repo, moving the

repo and running again, verifying no diffs between the outputs

## Summary

Fixes#11534.

`DocumentQuery::source_type` now returns `PySourceType::Stub` when the

document is a `.pyi` file.

## Test Plan

I confirmed that stub-specific rule violations appeared with a build

from this PR (they were not visible from a `main` build).

<img width="1066" alt="Screenshot 2024-05-24 at 2 15 38 PM"

src="https://github.com/astral-sh/ruff/assets/19577865/cd519b7e-21e4-41c8-bc30-43eb6d4d438e">

Hi!

I left out some of the functions in the migration rule which became

removed in NumPy 2.0:

- `np.alltrue`

- `np.anytrue`

- `np.cumproduct`

- `np.product`

Addressing: https://github.com/numpy/numpy/issues/26493

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

Current doc says `sys.version[0]` will select the first digit of a major

version number (correct) then as an example says

> e.g., `"3.10"` would evaluate to `"1"`

(would actually evaluate to `"3"`). Changed the example version to a

two-digit number to make the problem more clear.

## Test Plan

<!-- How was it tested? -->

ran the following:

- `cargo run -p ruff -- check

crates/ruff_linter/resources/test/fixtures/flake8_2020/YTT301.py

--no-cache`

- `cargo insta review`

- `cargo test`

which all passed.

## Summary

Rule `logging-warn` (`G010`) prescribes a change from `warn` to

`warning` and has a corresponding autofix, but the autofix is mistakenly

titled ```"Convert to `warn`"``` instead of ```"Convert to `warning`"```

(the latter is what the autofix actually does). Seems to be a plain

typo.

## Summary

Fixes#11516

`ruff server` was sending both regular source actions and notebook

source actions back when passed an empty action filter. This PR makes a

few small changes so that notebook source actions are not sent when

regular source actions are sent, which means that an empty filter will

only return regular source actions.

## Test Plan

I confirmed that duplicate code actions no longer appeared in Neovim,

using a configuration similar to the one from the original issue.

<img width="509" alt="Screenshot 2024-05-23 at 11 48 48 PM"

src="https://github.com/astral-sh/ruff/assets/19577865/9a5d6907-dd41-48bd-b015-8a344c5e0b3f">

## Summary

It turns out that `singledispatch` does end up evaluating all arguments,

even though only the first is used to dispatch.

Closes https://github.com/astral-sh/ruff/issues/11520.

## Summary

Addresses #8451 by implementing rule 116 to add an unsafe fix when sleep

is used with a >24 hour interval to instead consider sleeping forever.

This rule is added as async instead as I my understanding was that these

trio rules would be moved to async anyway.

There are a couple of TODOs, which address further extending the rule by

adding support for lookups and evaluations, and also supporting `anyio`.

## Summary

This PR updates the `FA102` rule logic to use the `Importer` which is

available on the `Checker`.

The main motivation is that this would make updating the `Importer` to

use the `Tokens` struct which will be required to remove the

`lex_starts_at` usage in `Insertion::start_of_block` method.

## Test Plan

`cargo insta test`

## Summary

Fixes https://github.com/astral-sh/ruff/issues/11236.

This PR fixes several issues, most of which relate to non-VS Code

editors (Helix and Neovim).

1. Global-only initialization options are now correctly deserialized

from Neovim and Helix

2. Empty diagnostics are now published correctly for Neovim and Helix.

3. A workspace folder is created at the current working directory if the

initialization parameters send an empty list of workspace folders.

4. The server now gracefully handles opening files outside of any known

workspace, and will use global fallback settings taken from client

editor settings and a user settings TOML, if it exists.

## Test Plan

I've tested to confirm that each issue has been fixed.

* Global-only initialization options are now correctly deserialized from

Neovim and Helix + the server gracefully handles opening files outside

of any known workspace

https://github.com/astral-sh/ruff/assets/19577865/4f33477f-20c8-4e50-8214-6608b1a1ea6b

* Empty diagnostics are now published correctly for Neovim and Helix

https://github.com/astral-sh/ruff/assets/19577865/c93f56a0-f75d-466f-9f40-d77f99cf0637

* A workspace folder is created at the current working directory if the

initialization parameters send an empty list of workspace folders.

https://github.com/astral-sh/ruff/assets/19577865/b4b2e818-4b0d-40ce-961d-5831478cc726

## Summary

Similar to #11414, this PR extends `UP037` to flag quoted annotations

that are located in positions that won't be evaluated at runtime.

For example, the quotes on `Tuple` are unnecessary in:

```python

from typing import TYPE_CHECKING

if TYPE_CHECKING:

from typing import Tuple

def foo():

x: "Tuple[int, int]" = (0, 0)

foo()

```

## Summary

Recent changes made in the [Jupyter Notebook feature

PR](https://github.com/astral-sh/ruff/pull/11206) caused automatic

configuration reloading to stop working. This was because we would check

for paths to reload using the changed path, when we should have been

using the parent path of the changed path (to get the directory it was

changed in).

Additionally, this PR fixes an issue where `ruff.toml` and `.ruff.toml`

files were not being automatically reloaded.

Finally, this PR improves configuration reloading by actively publishing

diagnostics for notebook documents (which won't be affected by the

workspace refresh since they don't use pull diagnostics). It will also

publish diagnostics for text documents if pull diagnostics aren't

supported.

## Test Plan

To test this, open an existing configuration file in a codebase, and

make modifications that will affect one or more open Python / Jupyter

Notebook files. You should observe that the diagnostics for both kinds

of files update automatically when the file changes are saved.

Here's a test video showing what a successful test should look like:

https://github.com/astral-sh/ruff/assets/19577865/7172b598-d6de-4965-b33c-6cb8b911ef6c

## Summary