## Summary

Fixes https://github.com/astral-sh/ruff/issues/11236.

This PR fixes several issues, most of which relate to non-VS Code

editors (Helix and Neovim).

1. Global-only initialization options are now correctly deserialized

from Neovim and Helix

2. Empty diagnostics are now published correctly for Neovim and Helix.

3. A workspace folder is created at the current working directory if the

initialization parameters send an empty list of workspace folders.

4. The server now gracefully handles opening files outside of any known

workspace, and will use global fallback settings taken from client

editor settings and a user settings TOML, if it exists.

## Test Plan

I've tested to confirm that each issue has been fixed.

* Global-only initialization options are now correctly deserialized from

Neovim and Helix + the server gracefully handles opening files outside

of any known workspace

https://github.com/astral-sh/ruff/assets/19577865/4f33477f-20c8-4e50-8214-6608b1a1ea6b

* Empty diagnostics are now published correctly for Neovim and Helix

https://github.com/astral-sh/ruff/assets/19577865/c93f56a0-f75d-466f-9f40-d77f99cf0637

* A workspace folder is created at the current working directory if the

initialization parameters send an empty list of workspace folders.

https://github.com/astral-sh/ruff/assets/19577865/b4b2e818-4b0d-40ce-961d-5831478cc726

## Summary

Similar to #11414, this PR extends `UP037` to flag quoted annotations

that are located in positions that won't be evaluated at runtime.

For example, the quotes on `Tuple` are unnecessary in:

```python

from typing import TYPE_CHECKING

if TYPE_CHECKING:

from typing import Tuple

def foo():

x: "Tuple[int, int]" = (0, 0)

foo()

```

## Summary

Recent changes made in the [Jupyter Notebook feature

PR](https://github.com/astral-sh/ruff/pull/11206) caused automatic

configuration reloading to stop working. This was because we would check

for paths to reload using the changed path, when we should have been

using the parent path of the changed path (to get the directory it was

changed in).

Additionally, this PR fixes an issue where `ruff.toml` and `.ruff.toml`

files were not being automatically reloaded.

Finally, this PR improves configuration reloading by actively publishing

diagnostics for notebook documents (which won't be affected by the

workspace refresh since they don't use pull diagnostics). It will also

publish diagnostics for text documents if pull diagnostics aren't

supported.

## Test Plan

To test this, open an existing configuration file in a codebase, and

make modifications that will affect one or more open Python / Jupyter

Notebook files. You should observe that the diagnostics for both kinds

of files update automatically when the file changes are saved.

Here's a test video showing what a successful test should look like:

https://github.com/astral-sh/ruff/assets/19577865/7172b598-d6de-4965-b33c-6cb8b911ef6c

## Summary

Previously, `ruff.applyFormat`, seen in VS Code as the command `Ruff:

Format Document`, would only format the currently active notebook cell

inside a notebook document. This PR makes `ruff.applyFormat` format the

entire notebook document at once, operating on each code cell in order.

## Test Plan

1. Open a notebook document that has multiple unformatted code cells.

2. Run `Ruff: Format Document` through the Command Palette

(`Ctrl/Cmd+Shift+P` by default)

3. Observe that all code cells in the notebook have been formatted.

## Summary

This PR moves the `has_comments` function from `Indexer` to

`CommentRanges`. The main motivation is that the `CommentRanges` will

now be built by the parser which is shared between the linter and the

formatter. Thus, the `CommentRanges` will be removed from the `Indexer`.

## Test Plan

`cargo test`

## Summary

Matching Pylint, we now omit the `try` body itself from branch counting.

Each `except` counts as a branch, as does the `else` and the `finally`.

Closes https://github.com/astral-sh/ruff/issues/11205.

## Summary

Closes https://github.com/astral-sh/ruff/issues/10858.

`ruff server` now supports `*.ipynb` (aka Jupyter Notebook) files.

Extensive internal changes have been made to facilitate this, which I've

done some work to contextualize with documentation and an pre-review

that highlights notable sections of the code.

`*.ipynb` cells should behave similarly to `*.py` documents, with one

major exception. The format command `ruff.applyFormat` will only apply

to the currently selected notebook cell - if you want to format an

entire notebook document, use `Format Notebook` from the VS Code context

menu.

## Test Plan

The VS Code extension does not yet have Jupyter Notebook support

enabled, so you'll first need to enable it manually. To do this,

checkout the `pre-release` branch and modify `src/common/server.ts` as

follows:

Before:

After:

I recommend testing this PR with large, complicated notebook files. I

used notebook files from [this popular

repository](https://github.com/jakevdp/PythonDataScienceHandbook/tree/master/notebooks)

in my preliminary testing.

The main thing to test is ensuring that notebook cells behave the same

as Python documents, besides the aforementioned issue with

`ruff.applyFormat`. You should also test adding and deleting cells (in

particular, deleting all the code cells and ensure that doesn't break

anything), changing the kind of a cell (i.e. from markup -> code or vice

versa), and creating a new notebook file from scratch. Finally, you

should also test that source actions work as expected (and across the

entire notebook).

Note: `ruff.applyAutofix` and `ruff.applyOrganizeImports` are currently

broken for notebook files, and I suspect it has something to do with

https://github.com/astral-sh/ruff/issues/11248. Once this is fixed, I

will update the test plan accordingly.

---------

Co-authored-by: nolan <nolan.king90@gmail.com>

The wording 'negative comparison' is a rather vague description of the

'is not' operation and does not describe what the 'not in' operation

does (potentially copied from 'is not'). This was replaced with more

precise language to describe the operators taken from the official

python docs[1].

Both rules didn't have a strong reasoning besides 'it's bad, use the

other'. The origin of these rules seems to be PEP8[2] which prefers 'is

not' over 'not ... is' for readability. This is now reflected in the

description.

[1]:

https://docs.python.org/3/reference/expressions.html#membership-test-operations

[2]: https://peps.python.org/pep-0008/#programming-recommendations

## Summary

If an annotation won't be evaluated at runtime, we don't need to flag

`from __future__ import annotations` as required. This applies both to

quoted annotations and annotations outside of runtime-evaluated

positions, like:

```python

def main() -> None:

a_list: list[str] | None = []

a_list.append("hello")

```

Closes https://github.com/astral-sh/ruff/issues/11397.

## Summary

* Update documentation for F401 following recent PRs

* #11168

* #11314

* Deprecate `ignore_init_module_imports`

* Add a deprecation pragma to the option and a "warn user once" message

when the option is used.

* Restore the old behavior for stable (non-preview) mode:

* When `ignore_init_module_imports` is set to `true` (default) there are

no `__init_.py` fixes (but we get nice fix titles!).

* When `ignore_init_module_imports` is set to `false` there are unsafe

`__init__.py` fixes to remove unused imports.

* When preview mode is enabled, it overrides

`ignore_init_module_imports`.

* Fixed a bug in fix titles where `import foo as bar` would recommend

reexporting `bar as bar`. It now says to reexport `foo as foo`. (In this

case we don't issue a fix, fwiw; it was just a fix title bug.)

## Test plan

Added new fixture tests that reuse the existing fixtures for

`__init__.py` files. Each of the three situations listed above has

fixture tests. The F401 "stable" tests cover:

> * When `ignore_init_module_imports` is set to `true` (default) there

are no `__init_.py` fixes (but we get nice fix titles!).

The F401 "deprecated option" tests cover:

> * When `ignore_init_module_imports` is set to `false` there are unsafe

`__init__.py` fixes to remove unused imports.

These complement existing "preview" tests that show the new behavior

which recommends fixes in `__init__.py` according to whether the import

is 1st party and other circumstances (for more on that behavior see:

#11314).

## Summary

This is a follow-up PR to #11445 update the `E27` rules to consider soft

keywords as well.

## Test Plan

Add test cases consisting of soft keywords and update the snapshot.

## Summary

We weren't treating the escaped newline as a valid condition to trigger

the safer fix (add an extra backslash before each invalid escape

sequence).

Closes https://github.com/astral-sh/ruff/issues/11461.

## Summary

This PR updates the `TokenKind::is_keyword` check to include soft

keywords. To account for this change, it adds a new

`is_non_soft_keyword` method.

The usage in logical line rules were updated to use the

`is_non_soft_keyword` method but it'll be updated to use `is_keyword` in

a follow-up PR (#11446).

While, the parser usages were kept as is. And because of that, the

snapshots for two test cases were updated in a better direction.

## Test Plan

`cargo insta test`

## Summary

We already have handling for "references that get quoted within our

quoted references", but we were assuming a specific ordering in the way

edits were generated.

Closes https://github.com/astral-sh/ruff/issues/11449.

This is useful for extracting the defaults in order to construct

equivalent configs by external scripts. This is my first non-hello-world

rust code, comments and suggested tests appreciated.

## Summary

We already have `ruff linter --output-format json`, this provides `ruff

config x --output-format json` as well. I plan to use this to construct

an equivalent config snippet to include in some managed repos, so when

we update their version of ruff and it adds new lints, they get a PR

that includes the commented-out new lints.

Note that the no-args form of `ruff config` ignores output-format

currently, but probably should obey it (although array-of-strings

doesn't seem that useful, looking for input on format).

## Test Plan

I could use a hand coming up with a typical way to write automated tests

for this.

```sh-session

(.venv) [timhatch:ruff ]$ ./target/debug/ruff config lint.select

A list of rule codes or prefixes to enable. Prefixes can specify exact

rules (like `F841`), entire categories (like `F`), or anything in

between.

When breaking ties between enabled and disabled rules (via `select` and

`ignore`, respectively), more specific prefixes override less

specific prefixes.

Default value: ["E4", "E7", "E9", "F"]

Type: list[RuleSelector]

Example usage:

``toml

# On top of the defaults (`E4`, E7`, `E9`, and `F`), enable flake8-bugbear (`B`) and flake8-quotes (`Q`).

select = ["E4", "E7", "E9", "F", "B", "Q"]

``

(.venv) [timhatch:ruff ]$ ./target/debug/ruff config lint.select --output-format json

{

"Field": {

"doc": "A list of rule codes or prefixes to enable. Prefixes can specify exact\nrules (like `F841`), entire categories (like `F`), or anything in\nbetween.\n\nWhen breaking ties between enabled and disabled rules (via `select` and\n`ignore`, respectively), more specific prefixes override less\nspecific prefixes.",

"default": "[\"E4\", \"E7\", \"E9\", \"F\"]",

"value_type": "list[RuleSelector]",

"scope": null,

"example": "# On top of the defaults (`E4`, E7`, `E9`, and `F`), enable flake8-bugbear (`B`) and flake8-quotes (`Q`).\nselect = [\"E4\", \"E7\", \"E9\", \"F\", \"B\", \"Q\"]",

"deprecated": null

}

}

```

## Summary

As discussed in issue #11408, PLR0912 has a broader definition of

"branches" than I expected. This updates the documentation to include

this definition.

I also updated the example to include several different types of

branches, while still maintaining dictionary lookup as an alternative

solution. (Crafting a realistic example was quite a challenge 😅).

Closes https://github.com/astral-sh/ruff/issues/11408.

## Summary

This moves the string-prefix enumerations in `ruff_python_ast` to a

separate submodule. I think this helps clarify that these prefixes are

purely abstract: they only depend on each other, and do not depend on

any of the other code in `nodes.rs` in any way. Moreover, while various

AST nodes _use_ them, they're not really nodes themselves, so they feel

slightly out of place in `nodes.rs`.

I considered moving all of them to `str.rs`, but it felt like enough

code that it could be a separate submodule.

## Test Plan

`cargo test`

Followup on #11168 and resolve#10391

# User facing changes

* F401 now recommends a fix to add unused import bindings to to

`__all__` if a single `__all__` list or tuple is found in `__init__.py`.

* If there are no `__all__` found in the file, fall back to recommending

redundant-aliases.

* If there are multiple `__all__` or only one but of the wrong type (non

list or tuple) then diagnostics are generated without fixes.

* `fix_title` is updated to reflect what the fix/recommendation is.

Subtlety: For a renamed import such as `import foo as bees`, we can

generate a fix to add `bees` to `__all__` but cannot generate a fix to

produce a redundant import (because that would break uses of the binding

`bees`).

# Implementation changes

* Add `name` field to `ImportBinding` to contain the name of the

_binding_ we want to add to `__all__` (important for the `import foo as

bees` case). It previously only contained the `AnyImport` which can give

us information about the import but not the binding.

* Add `binding` field to `UnusedImport` to contain the same. (Naming

note: the field `name` field already existed on `UnusedImport` and

contains the qualified name of the imported symbol/module)

* Change `fix_by_reexporting` to branch on the size of `dunder_all:

Vec<&Expr>`

* For length 0 call the edit-producing function `make_redundant_alias`.

* For length 1 call edit-producing function `add_to_dunder_all`.

* Otherwise, produce no fix.

* Implement the edit-producing function `add_to_dunder_all` and add unit

tests.

* Implement several fixture tests: empty `__all__ = []`, nonempty

`__all__ = ["foo"]`, mis-typed `__all__ = None`, plus-eq `__all__ +=

["foo"]`

* `UnusedImportContext::Init` variant now has two fields: whether the

fix is in `__init__.py` and how many `__all__` were found.

# Other changes

* Remove a spurious pattern match and instead use field lookups b/c the

addition of a field would have required changing the unrelated pattern.

* Tweak input type of `make_redundant_alias`

---------

Co-authored-by: Alex Waygood <Alex.Waygood@Gmail.com>

## Summary

This PR follows up from #11420 to move `UP034` to use `TokenKind`

instead of `Tok`.

The main reason to have a separate PR is so that the reviewing is easy.

This required a lot more updates because the rule used an index (`i`) to

keep track of the current position in the token vector. Now, as it's

just an iterator, we just use `next` to move the iterator forward and

extract the relevant information.

This is part of https://github.com/astral-sh/ruff/issues/11401

## Test Plan

`cargo test`

## Summary

This PR moves the following rules to use `TokenKind` instead of `Tok`:

* `PLE2510`, `PLE2512`, `PLE2513`, `PLE2514`, `PLE2515`

* `E701`, `E702`, `E703`

* `ISC001`, `ISC002`

* `COM812`, `COM818`, `COM819`

* `W391`

I've paused here because the next set of rules

(`pyupgrade::rules::extraneous_parentheses`) indexes into the token

slice but we only have an iterator implementation. So, I want to isolate

that change to make sure the logic is still the same when I move to

using the iterator approach.

This is part of #11401

## Test Plan

`cargo test`

## Summary

Alternative to #11237

This PR adds a new `Tokens` struct which is a newtype wrapper around a

vector of lexer output. This allows us to add a `kinds` method which

returns an iterator over the corresponding `TokenKind`. This iterator is

implemented as a separate `TokenKindIter` struct to allow using the type

and provide additional methods like `peek` directly on the iterator.

This exposes the linter to access the stream of `TokenKind` instead of

`Tok`.

Edit: I've made the necessary downstream changes and plan to merge the

entire stack at once.

## Summary

This PR updates the linter benchmark to use the `tokenize` function

instead of the lexer.

The linter expects the token list to be up to and including the first

error which is what the `ruff_python_parser::tokenize` function returns.

This was not a problem before because the benchmarks only uses valid

Python code.

## Summary

This PR adds a newtype wrapper around `Vec<FStringElement>` that derefs

to a `&Vec<FStringElement>`.

Both f-string and format specifier are made up of `Vec<FStringElement>`.

By creating a newtype wrapper around it, we can share the methods for

both parent types.

## Summary

This PR adds support to iterate over each part of a string-like

expression.

This similar to the one in the formatter:

128414cd95/crates/ruff_python_formatter/src/string/any.rs (L121-L125)

Although I don't think it's a 1-1 replacement in the formatter because

the one implemented in the formatter has another information for certain

variants (as can be seen for `FString`).

The main motivation for this is to avoid duplication for rules which

work only on the parts of the string and doesn't require any information

from the parent node. Here, the parent node being the expression node

which could be an implicitly concatenated string.

This PR also updates certain rule implementation to make use of this and

avoids logic duplication.

## Summary

This PR renames `AnyStringKind` to `AnyStringFlags` and `AnyStringFlags`

to `AnyStringFlagsInner`.

The main motivation is to have consistent usage of "kind" and "flags".

For each string kind, it's "flags" like `StringLiteralFlags`,

`BytesLiteralFlags`, and `FStringFlags` but it was `AnyStringKind` for

the "any" variant.

## Summary

Changes `future-rewritable-type-annotation` (`FA100`) message to be less

confusing. Uses phrasing from the rule documentation to be consistent.

For example,

```

from_typing_import.py:5:13: FA100 Add `from __future__ import annotations` to rewrite `typing.List` more succinctly

```

Closes#10573.

## Test Plan

`cargo nextest run`

## Summary

Should this consider the decorator only if the name is actually a

property or is the logic in this PR correct?

fixes: #11358

## Test Plan

Add test case.

## Summary

This PR fixes a bug where the auto-fix for `TCH005` would delete the

entire `if` statement.

The fix in this PR is to not consider it a violation if there are any

`elif`/`else` blocks. This also matches the behavior of the original

plugin.

fixes: #11368

## Test plan

Add test cases.

## Summary

Fixes https://github.com/astral-sh/ruff/issues/10594.

Code actions to disable a diagnostic via `noqa` comment are now

available.

https://github.com/astral-sh/ruff/assets/19577865/6d3bcf11-a9d9-499b-8c7f-a10cd39cfbba

`DiagnosticFix` has been changed so that `noqa` code actions appear even

for diagnostics with no available quick fix. It can contain quick fix

edits, `noqa` comment edits, or both.

## Test Plan

The scenarios that need to be tested are as follows:

* A code action to disable a diagnostic should be available for every

diagnostic.

* Using this code action should append to the appropriate line with the

diagnostic, or modify an existing `noqa` comment.

* Adding a `noqa` comment manually should make a diagnostic disappear

* `Fix all auto-fixable problems` should not add `noqa` comments

* Removing a code from a `noqa` comment should make the diagnostic

re-appear

## Summary

`--add-noqa` now runs in two stages: first, the linter finds all

diagnostics that need noqa comments and generate edits on a per-line

basis. Second, these edits are applied, in order, to the document.

A public-facing function, `generate_noqa_edits`, has also been

introduced, which returns noqa edits generated on a per-diagnostic

basis. This will be used by `ruff server` for noqa comment quick-fixes.

## Test Plan

Unit tests have been updated.

## Summary

This PR adds updates the semantic model to detect attribute docstring.

Refer to [PEP 258](https://peps.python.org/pep-0258/#attribute-docstrings)

for the definition of an attribute docstring.

This PR doesn't add full support for it but only considers string

literals as attribute docstring for the following cases:

1. A string literal following an assignment statement in the **global

scope**.

2. A global class attribute

For an assignment statement, it's considered an attribute docstring only

if the target expression is a name expression (`x = 1`). So, chained

assignment, multiple assignment or unpacking, and starred expression,

which are all valid in the target position, aren't considered here.

In `__init__` method, an assignment to the `self` variable like `self.x = 1`

is also a candidate for an attribute docstring. **This PR does not

support this position.**

## Test Plan

I used the following source code along with a print statement to verify

that the attribute docstring detection is correct.

Refer to the PR description for the code snippet.

I'll add this in the follow-up PR

(https://github.com/astral-sh/ruff/pull/11302) which uses this method.

## Summary

Lots of TODOs and things to clean up here, but it demonstrates the

working lint rule.

## Test Plan

```

➜ cat main.py

from typing import override

from base import B

class C(B):

@override

def method(self): pass

➜ cat base.py

class B: pass

➜ cat typing.py

def override(func):

return func

```

(We provide our own `typing.py` since we don't have typeshed vendored or

type stub support yet.)

```

➜ ./target/debug/red_knot main.py

...

1 0.012086s TRACE red_knot Main Loop: Tick

[crates/red_knot/src/main.rs:157:21] diagnostics = [

"Method C.method is decorated with `typing.override` but does not override any base class method",

]

```

If we add `def method(self): pass` to class `B` in `base.py` and run

red_knot again, there is no lint error.

---------

Co-authored-by: Micha Reiser <micha@reiser.io>

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

Resolves#11263

Detect `pathlib.Path.open` calls which do not specify a file encoding.

## Test Plan

Test cases added to fixture.

---------

Co-authored-by: Dhruv Manilawala <dhruvmanila@gmail.com>

This PR vendors typeshed!

- The first commit vendors the stdlib directory from typeshed into a new crates/red_knot/vendored_typeshed directory.

- The second commit adjusts various linting config files to make sure that the vendored code is excluded from typo checks, formatting checks, etc.

- The LICENSE and README.md files are also vendored, but all other directories and files (stubs, scripts, tests, test_cases, etc.) are excluded. We should have no need for them (except possibly stubs/, discussed in more depth below).

- Similar to the way pyright has a commit.txt file in its vendored copy of typeshed, to indicate which typeshed commit the vendored code corresponds to, I've also added a crates/red_knot/vendored_typeshed/source_commit.txt file in the third commit of this PR.

One open question is: should we vendor the stdlib and stubs directories, or just the stdlib directory? The stubs/ directory contains stubs for 162 third-party packages outside the stdlib. Mypy and typeshed_client1 only vendor the stdlib directory; pyright and pyre vendor both the stdlib and stubs directories; pytype vendors the entire typeshed repo (scripts/, tests/ and all).

In this PR, I've chosen to copy mypy and typeshed_client. Unlike vendoring the stdlib, which is unavoidable if we want to do typechecking of the stdlib, it's not strictly necessary to vendor the stubs directory: each subdirectory in stubs is published to PyPI as a standalone stubs distribution that can be (uv)-pip-installed into a virtual environment. It might be useful for our users if we vendored those stubs anyway, but there are costs as well as benefits to doing so (apart from just the sheer amount of vendored code in the ruff repository), so I'd rather consider it separately.

Resolves https://github.com/astral-sh/ruff/issues/11313

## Summary

PLR0912(too-many-branches) did not count branches inside with: blocks.

With this fix, the branches inside with statements are also counted.

## Test Plan

Added a new test case.

## Summary

This PR removes the cyclic dev dependency some of the crates had with

the parser crate.

The cyclic dependencies are:

* `ruff_python_ast` has a **dev dependency** on `ruff_python_parser` and

`ruff_python_parser` directly depends on `ruff_python_ast`

* `ruff_python_trivia` has a **dev dependency** on `ruff_python_parser`

and `ruff_python_parser` has an indirect dependency on

`ruff_python_trivia` (`ruff_python_parser` - `ruff_python_ast` -

`ruff_python_trivia`)

Specifically, this PR does the following:

* Introduce two new crates

* `ruff_python_ast_integration_tests` and move the tests from the

`ruff_python_ast` crate which uses the parser in this crate

* `ruff_python_trivia_integration_tests` and move the tests from the

`ruff_python_trivia` crate which uses the parser in this crate

### Motivation

The main motivation for this PR is to help development. Before this PR,

`rust-analyzer` wouldn't provide any intellisense in the

`ruff_python_parser` crate regarding the symbols in `ruff_python_ast`

crate.

```

[ERROR][2024-05-03 13:47:06] .../vim/lsp/rpc.lua:770 "rpc" "/Users/dhruv/.cargo/bin/rust-analyzer" "stderr" "[ERROR project_model::workspace] cyclic deps: ruff_python_parser(Idx::<CrateData>(50)) -> ruff_python_ast(Idx::<CrateData>(37)), alternative path: ruff_python_ast(Idx::<CrateData>(37)) -> ruff_python_parser(Idx::<CrateData>(50))\n"

```

## Test Plan

Check the logs of `rust-analyzer` to not see any signs of cyclic

dependency.

## Summary

While I was here, I also updated the rule to use

`function_type::classify` rather than hard-coding `staticmethod` and

friends.

Per Carl:

> Enum instances are already referred to by the class, forming a cycle

that won't get collected until the class itself does. At which point the

`lru_cache` itself would be collected, too.

Closes https://github.com/astral-sh/ruff/issues/9912.

## Summary

Historically, we only ignored `flake8-blind-except` if you re-raised or

logged the exception as a _direct_ child statement; but it could be

nested somewhere. This was just a known limitation at the time of adding

the previous logic.

Closes https://github.com/astral-sh/ruff/issues/11289.

## Summary

A follow-up to https://github.com/astral-sh/ruff/pull/11222. `ruff

server` stalls during shutdown with Neovim because after it receives an

exit notification and closes the I/O thread, it attempts to log a

success message to `stderr`. Removing this log statement fixes this

issue.

## Test Plan

Track the instances of `ruff` in the OS task manager as you open and

close Neovim. A new instance should appear when Neovim starts and it

should disappear once Neovim is closed.

## Summary

Fixes https://github.com/astral-sh/ruff/issues/11258.

This PR fixes the settings resolver to match the expected behavior when

file-based configuration is not available.

## Test Plan

In a workspace with no file-based configuration, set a setting in your

editor and confirm that this setting is used instead of the default.

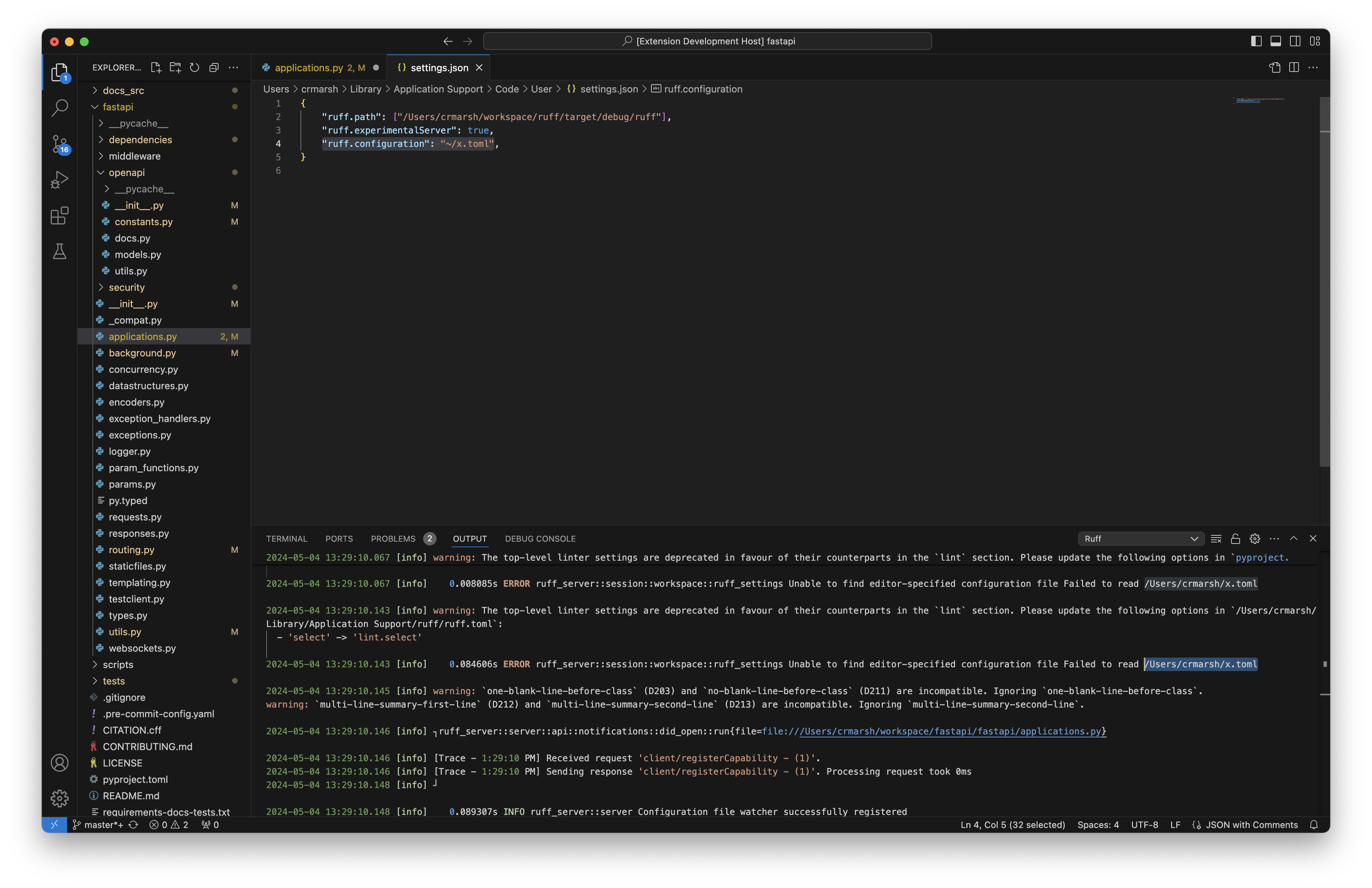

## Summary

Users can now include tildes and environment variables in the provided

path, just like with `--config`.

Closes#11277.

## Test Plan

Set the configuration path to `"ruff.configuration": "~/x.toml"`;

verified that the server attempted to read from `/Users/crmarsh/x.toml`.

## Summary

Change `hardcoded-tmp-directory-extend` example to follow the schema:

1e91a09918/ruff.schema.json (L896-L901)

<!-- What's the purpose of the change? What does it do, and why? -->

## Summary

In #9218 `Rule::NeverUnion` was partially removed from a

`checker.any_enabled` call. This makes the change consistent.

## Test Plan

`cargo test`

## Summary

Fixes https://github.com/astral-sh/ruff/issues/11207.

The server would hang after handling a shutdown request on

`IoThreads::join()` because a global sender (`MESSENGER`, used to send

`window/showMessage` notifications) would remain allocated even after

the event loop finished, which kept the writer I/O thread channel open.

To fix this, I've made a few structural changes to `ruff server`. I've

wrapped the send/receive channels and thread join handle behind a new

struct, `Connection`, which facilitates message sending and receiving,

and also runs `IoThreads::join()` after the event loop finishes. To

control the number of sender channels, the `Connection` wraps the sender

channel in an `Arc` and only allows the creation of a wrapper type,

`ClientSender`, which hold a weak reference to this `Arc` instead of

direct channel access. The wrapper type implements the channel methods

directly to prevent access to the inner channel (which would allow the

channel to be cloned). ClientSender's function is analogous to

[`WeakSender` in

`tokio`](https://docs.rs/tokio/latest/tokio/sync/mpsc/struct.WeakSender.html).

Additionally, the receiver channel cannot be accessed directly - the

`Connection` only exposes an iterator over it.

These changes will guarantee that all channels are closed before the I/O

threads are joined.

## Test Plan

Repeatedly open and close an editor utilizing `ruff server` while

observing the task monitor. The net total amount of open `ruff`

instances should be zero once all editor windows have closed.

The following logs should also appear after the server is shut down:

<img width="835" alt="Screenshot 2024-04-30 at 3 56 22 PM"

src="https://github.com/astral-sh/ruff/assets/19577865/404b74f5-ef08-4bb4-9fa2-72e72b946695">

This can be tested on VS Code by changing the settings and then checking

`Output`.

* Add `decorators: Vec<Type>` to `FunctionType` struct

* Thread decorators through two `add_function` definitions

* Populate decorators at the callsite in `infer_symbol_type`

* Small test

Resolves#10390 and starts to address #10391

# Changes to behavior

* In `__init__.py` we now offer some fixes for unused imports.

* If the import binding is first-party this PR suggests a fix to turn it

into a redundant alias.

* If the import binding is not first-party, this PR suggests a fix to

remove it from the `__init__.py`.

* The fix-titles are specific to these new suggested fixes.

* `checker.settings.ignore_init_module_imports` setting is

deprecated/ignored. There is probably a documentation change to make

that complete which I haven't done.

---

<details><summary>Old description of implementation changes</summary>

# Changes to the implementation

* In the body of the loop over import statements that contain unused

bindings, the bindings are partitioned into `to_reexport` and

`to_remove` (according to how we want to resolve the fact they're

unused) with the following predicate:

```rust

in_init && is_first_party(checker, &import.qualified_name().to_string())

// true means make it a reexport

```

* Instead of generating a single fix per import statement, we now

generate up to two fixes per import statement:

```rust

(fix_by_removing_imports(checker, node_id, &to_remove, in_init).ok(),

fix_by_reexporting(checker, node_id, &to_reexport, dunder_all).ok())

```

* The `to_remove` fixes are unsafe when `in_init`.

* The `to_explicit` fixes are safe. Currently, until a future PR, we

make them redundant aliases (e.g. `import a` would become `import a as

a`).

## Other changes

* `checker.settings.ignore_init_module_imports` is deprecated/ignored.

Instead, all fixes are gated on `checker.settings.preview.is_enabled()`.

* Got rid of the pattern match on the import-binding bound by the inner

loop because it seemed less readable than referencing fields on the

binding.

* [x] `// FIXME: rename "imports" to "bindings"` if reviewer agrees (see

code)

* [x] `// FIXME: rename "node_id" to "import_statement"` if reviewer

agrees (see code)

<details>

<summary><h2>Scope cut until a future PR</h2></summary>

* (Not implemented) The `to_explicit` fixes will be added to `__all__`

unless it doesn't exist. When `__all__` doesn't exist they're resolved

by converting to redundant aliases (e.g. `import a` would become `import

a as a`).

---

</details>

# Test plan

* [x] `crates/ruff_linter/resources/test/fixtures/pyflakes/F401_24`

contains an `__init__.py` with*out* `__all__` that exercises the

features in this PR, but it doesn't pass.

* [x]

`crates/ruff_linter/resources/test/fixtures/pyflakes/F401_25_dunder_all`

contains an `__init__.py` *with* `__all__` that exercises the features

in this PR, but it doesn't pass.

* [x] Write unit tests for the new edit functions in

`fix::edits::make_redundant_alias`.

</details>

---------

Co-authored-by: Micha Reiser <micha@reiser.io>