## Summary

Largely based on rustup's implementation (linked in the source).

Closes#5027.

## Test Plan

- Changed the executable directory to `uv/foo`.

- Ran script; verified that I could access executables in `foo`.

## Summary

I'll open follow-up tickets for Windows support.

Closes https://github.com/astral-sh/uv/issues/4953.

## Test Plan

```

❯ cargo run tool install flask

Resolved 7 packages in 353ms

Prepared 7 packages in 392ms

Installed 7 packages in 17ms

+ blinker==1.8.2

+ click==8.1.7

+ flask==3.0.3

+ itsdangerous==2.2.0

+ jinja2==3.1.4

+ markupsafe==2.1.5

+ werkzeug==3.0.3

Installed 1 executable: flask

warning: /Users/crmarsh/.local/bin is not on your PATH. To use installed tools, run:

export PATH="/Users/crmarsh/.local/bin:$PATH"

```

Then:

```

❯ which flask

flask not found

```

Then:

```

❯ cargo run tool ensurepath

warning: `uv tool ensurepath` is experimental and may change without warning.

Updated configuration file: /Users/crmarsh/workspace/puffin/bar

Restart your shell for the changes to take effect.

```

Then:

```

❯ which flask

/Users/crmarsh/.local/bin/flask

```

## Summary

Move completely off tokio's multi-threaded runtime. We've slowly been

making changes to be smarter about scheduling in various places instead

of depending on tokio's general purpose work-stealing, notably

https://github.com/astral-sh/uv/pull/3627 and

https://github.com/astral-sh/uv/pull/4004. We now no longer benefit from

the multi-threaded runtime, as we run on all I/O on the main thread.

There's one remaining instance of `block_in_place` that can be swapped

for `rayon::spawn`.

This change is a small performance improvement due to removing some

unnecessary overhead of the multi-threaded runtime (e.g. spawning

threads), but nothing major. It also removes some noise from profiles.

## Test Plan

```

Benchmark 1: ./target/profiling/uv (resolve-warm)

Time (mean ± σ): 14.9 ms ± 0.3 ms [User: 3.0 ms, System: 17.3 ms]

Range (min … max): 14.1 ms … 15.8 ms 169 runs

Benchmark 2: ./target/profiling/baseline (resolve-warm)

Time (mean ± σ): 16.1 ms ± 0.3 ms [User: 3.9 ms, System: 18.7 ms]

Range (min … max): 15.1 ms … 17.3 ms 162 runs

Summary

./target/profiling/uv (resolve-warm) ran

1.08 ± 0.03 times faster than ./target/profiling/baseline (resolve-warm)

```

Whew this is a lot.

The user-facing changes are:

- `uv toolchain` to `uv python` e.g. `uv python find`, `uv python

install`, ...

- `UV_TOOLCHAIN_DIR` to` UV_PYTHON_INSTALL_DIR`

- `<UV_STATE_DIR>/toolchains` to `<UV_STATE_DIR>/python` (with

[automatic

migration](https://github.com/astral-sh/uv/pull/4735/files#r1663029330))

- User-facing messages no longer refer to toolchains, instead using

"Python", "Python versions" or "Python installations"

The internal changes are:

- `uv-toolchain` crate to `uv-python`

- `Toolchain` no longer referenced in type names

- Dropped unused `SystemPython` type (previously replaced)

- Clarified the type names for "managed Python installations"

- (more little things)

In #3514 and #2755, users had intermittent network errors, but it was

not always clear whether we had already retried these requests or not.

Building upon https://github.com/TrueLayer/reqwest-middleware/pull/159,

this PR adds the number of retries to the error message, so we can see

at first glance where we're missing retries and where we might need to

change retry settings.

Example error trace:

```

Could not connect, are you offline?

Caused by: Request failed after 3 retries

Caused by: error sending request for url (https://pypi.org/simple/uv/)

Caused by: client error (Connect)

Caused by: dns error: failed to lookup address information: Name or service not known

Caused by: failed to lookup address information: Name or service not known

```

This code is ugly since i'm missing a better pattern for attaching

context to reqwest middleware errors in

https://github.com/TrueLayer/reqwest-middleware/pull/159.

## Summary

See: https://github.com/axodotdev/cargo-dist/releases/tag/v0.17.0.

Relevant:

> The only reason you might want to override this setting is if you're

using [dispatch-releases =

true](https://opensource.axo.dev/cargo-dist/book/reference/config.html#dispatch-releases)

and you really want your git tag to be the last operation in your

release process (because creating a GitHub Release necessarily creates

the git tag if it doesn't yet exist, and many organizations really don't

like when you delete/change git tags). In this case setting

github-release = "announce" will accomplish that, but the above race

conditions would then apply.

We _do_ use `dispatch-releases = true`, and we _do_ want the git tag to

be the last operation, so we need to set `github-release = "announce"`

to preserve our current behavior.

This is the minimal "working" implementation. In summary, we:

- Resolve the requested requirements

- Create an environment at `$UV_STATE_DIR/tools/$name`

- Inspect the `dist-info` for the main requirement to determine its

entry points scripts

- Link the entry points from a user-executable directory

(`$XDG_BIN_HOME`) to the environment bin

- Create an entry at `$UV_STATE_DIR/tools/tools.toml` tracking the

user's request

The idea with `tools.toml` is that it allows us to perform upgrades and

syncs, retaining the original user request (similar to declarations in a

`pyproject.toml`). I imagine using a similar schema in the

`pyproject.toml` in the future if/when we add project-levle tools. I'm

also considering exposing `tools.toml` in the standard uv configuration

directory instead of the state directory, but it seems nice to tuck it

away for now while we iterate on it. Installing a tool won't perform a

sync of other tool environments, we'll probably have an explicit `uv

tool sync` command for that?

I've split out todos into follow-up pull requests:

- #4509 (failing on Windows)

- #4501

- #4504Closes#4485

## Summary

This PR moves all the CLI code into its own crate, separate from the

`uv` crate. The `uv` crate is iterated on frequently, and the CLI code

comprises a significant portion of it but rarely changes. Removing the

CLI code reduces the `uv` crate size from 1.4MiB to 1.0MiB.

To support diverging urls, we have to check urls when adding

dependencies (after forking). To prepare for this, i've moved adding

dependencies for the current version to

`SolveState::add_package_version_dependencies` and removed the

duplication when checking for self-dependencies.

This changed is joined with a change in pubgrub

(https://github.com/astral-sh/pubgrub/pull/27) that simplifies the same

code path.

## Summary

In a workspace, we now read configuration from the workspace root.

Previously, we read configuration from the first `pyproject.toml` or

`uv.toml` file in path -- but in a workspace, that would often be the

_project_ rather than the workspace configuration.

We need to read configuration from the workspace root, rather than its

members, because we lock the workspace globally, so all configuration

applies to the workspace globally.

As part of this change, the `uv-workspace` crate has been renamed to

`uv-settings` and its purpose has been narrowed significantly (it no

longer discovers a workspace; instead, it just reads the settings from a

directory).

If a user has a `uv.toml` in their directory or in a parent directory

but is _not_ in a workspace, we will still respect that use-case as

before.

Closes#4249.

Closes https://github.com/astral-sh/uv/issues/3857

Instead of using custom `Arch`, `Os`, and `Libc` types I just use

`target-lexicon`'s which enumerate way more variants and implement

display and parsing. We use a wrapper type to represent a couple special

cases to support the "x86" alias for "i686" and "macos" for "darwin".

Alternatively we could try to use our `platform-tags` types but those

capture more information (like operating system versions) that we don't

have for downloads.

As discussed in https://github.com/astral-sh/uv/pull/4160, this is not

sufficient for proper libc detection but that work is larger and will be

handled separately.

This PR re-adds the `aarch64-unknown-linux-gnu` binary, which will also

add the `manylinux_2_28` wheel for `aarch64` (in addition to the now

dual-tagged `musllinux_1_1` and `manylinux_2_217` wheel for `aarch64`).

We can consider dropping that _wheel_, but in my assessment removing a

release asset should now be treated as a breaking change -- so removing

it in a patch release was incorrect.

Closes https://github.com/astral-sh/uv/issues/4122.

We had previously changed the signature of

`DependencyProvider::get_dependencies` to return an iterator instead of

a hashmap to avoid the conversion cost from our dependencies `Vec` to

the pubgrub's hashmap. These changes are difficult to make in pubgrub

since they complicate the public api. But we don't actually use

`DependencyProvider::get_dependencies`, so we rolled those

customizations back in https://github.com/pubgrub-rs/pubgrub/pull/226

and instead opted to change only the internal

`add_incompatibility_from_dependencies` method that we exposed in our

fork. This aligns us closer with upstream, removes the design questions

about `DependencyProvider` from our concerns and reduces our diff (not

counting the github action) to +36 -12.

Fixes these two warnings on nightly:

```

warning: unexpected `cfg` condition name: `codspeed`

--> crates/bench/src/lib.rs:5:15

|

5 | #[cfg(not(codspeed))]

| ^^^^^^^^ help: found config with similar value: `feature = "codspeed"`

|

= help: expected names are: `clippy`, `debug_assertions`, `doc`, `docsrs`, `doctest`, `feature`, `miri`, `overflow_checks`, `panic`, `proc_macro`, `relocation_model`, `rustfmt`, `sanitize`, `sanitizer_cfi_generalize_pointers`, `sanitizer_cfi_normalize_integers`, `target_abi`, `target_arch`, `target_endian`, `target_env`, `target_family`, `target_feature`, `target_has_atomic`, `target_has_atomic_equal_alignment`, `target_has_atomic_load_store`, `target_os`, `target_pointer_width`, `target_thread_local`, `target_vendor`, `test`, `ub_checks`, `unix`, and `windows`

= help: consider using a Cargo feature instead

= help: or consider adding in `Cargo.toml` the `check-cfg` lint config for the lint:

[lints.rust]

unexpected_cfgs = { level = "warn", check-cfg = ['cfg(codspeed)'] }

= help: or consider adding `println!("cargo::rustc-check-cfg=cfg(codspeed)");` to the top of the `build.rs`

= note: see <https://doc.rust-lang.org/nightly/rustc/check-cfg/cargo-specifics.html> for more information about checking conditional configuration

= note: `#[warn(unexpected_cfgs)]` on by default

warning: unexpected `cfg` condition name: `codspeed`

--> crates/bench/src/lib.rs:8:11

|

8 | #[cfg(codspeed)]

| ^^^^^^^^ help: found config with similar value: `feature = "codspeed"`

|

= help: consider using a Cargo feature instead

= help: or consider adding in `Cargo.toml` the `check-cfg` lint config for the lint:

[lints.rust]

unexpected_cfgs = { level = "warn", check-cfg = ['cfg(codspeed)'] }

= help: or consider adding `println!("cargo::rustc-check-cfg=cfg(codspeed)");` to the top of the `build.rs`

= note: see <https://doc.rust-lang.org/nightly/rustc/check-cfg/cargo-specifics.html> for more information about checking conditional configuration

```

```

warning: unexpected `cfg` condition value: `unix`

--> crates/uv-extract/src/tar.rs:6:16

|

6 | #[cfg_attr(not(target_os = "unix"), allow(dead_code))]

| ^^^^^^^^^^^^^^^^^^

|

= note: expected values for `target_os` are: `aix`, `android`, `cuda`, `dragonfly`, `emscripten`, `espidf`, `freebsd`, `fuchsia`, `haiku`, `hermit`, `horizon`, `hurd`, `illumos`, `ios`, `l4re`, `linux`, `macos`, `netbsd`, `none`, `nto`, `openbsd`, `psp`, `redox`, `solaris`, `solid_asp3`, `teeos`, `tvos`, `uefi`, `unknown`, `visionos`, `vita`, `vxworks`, `wasi`, `watchos`, and `windows` and 2 more

= note: see <https://doc.rust-lang.org/nightly/rustc/check-cfg/cargo-specifics.html> for more information about checking conditional configuration

= note: requested on the command line with `-W unexpected-cfgs`

```

<!--

Thank you for contributing to uv! To help us out with reviewing, please

consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

We currently rely on libgit2 for most git-related functionality.

However, libgit2 has long-standing performance issues, as well as lags

significantly behind git in terms of new features. For these reasons we

now use the git CLI by default for fetching repositories

(https://github.com/astral-sh/uv/pull/1781). This PR completely drops

libgit2 in favor of the git CLI for all git-related functionality, which

should allow us to use features such as partial clones and sparse

checkouts in the future for performance.

There is also a lot of technical debt in the current git code as it's

mostly taken from Cargo. Switching to the git CLI *vastly* simplifies

the `uv-git` codebase.

Eventually we might want to look into switching to

[`gitoxide`](https://github.com/Byron/gitoxide), but it's currently too

immature for our use case.

## Summary

This PR changes the lock-file format to use inline tables for wheels and

source distributions, which currently use separate tables that make the

file harder to follow.

```diff

[[distribution]]

name = "typing-extensions"

version = "4.10.0"

source = "registry+https://pypi.org/simple"

- [distribution.sdist]

- url = "0d26ce356c7c323176620b7b483e44/typing_extensions-4.10.0.tar.gz"

- hash = "sha256:b0abd7c89e8fb96f98db18d86106ff1d90ab692004eb746cf6eda2682f91b3cb"

- size = 77558

-

- [[distribution.wheel]]

- url = "dc04a3ea60b9bc8074b5d6b7ab90bb/typing_extensions-4.10.0-py3-none-any.whl"

- hash = "sha256:69b1a937c3a517342112fb4c6df7e72fc39a38e7891a5730ed4985b5214b5475"

- size = 33926

+ sdist = { url = "0d26ce356c7c323176620b7b483e44/typing_extensions-4.10.0.tar.gz", hash = "sha256:b0abd7c89e8fb96f98db18d86106ff1d90ab692004eb746cf6eda2682f91b3cb", size = 77558 }

+ wheel = [{ url = "dc04a3ea60b9bc8074b5d6b7ab90bb/typing_extensions-4.10.0-py3-none-any.whl", hash = "sha256:69b1a937c3a517342112fb4c6df7e72fc39a38e7891a5730ed4985b5214b5475", size = 33926 }]

```

The downside is that the inline-tables end up quite long and TOML

doesn't support line breaks in inline tables, yet.

Part of https://github.com/astral-sh/uv/issues/3611.

Extends #3726

Moves toolchain storage out of `UV_BOOTSTRAP_DIR` (`./bin`) into the

proper user data directory as defined by #3726.

Replaces `UV_BOOTSTRAP_DIR` with `UV_TOOLCHAIN_DIR` for customization.

Installed toolchains will be discovered without opt-in, but the idea is

still that these are not yet user-facing.

## Summary

I haven't tested on Windows yet, but the idea here is that we should use

a portable representation when printing paths.

I decided to limit the scope here to paths that we write to output

files.

Closes https://github.com/astral-sh/uv/issues/3800.

## Summary

Restore API-compatibility with pre-1.1.0 versions of the `zip` crate,

and pin the dependency to the 0.6 series, due to concerns discussed in

https://github.com/astral-sh/uv/issues/3642.

## Test Plan

```

cargo run -p uv-dev -- fetch-python

cargo test

```

Pubgrub got a new feature where all unavailability is a custom, instead

of the reasonless `UnavailableDependencies` and our custom `String` type

previously (https://github.com/pubgrub-rs/pubgrub/pull/208). This PR

introduces a `UnavailableReason` that tracks either an entire version

being unusable, or a specific version. The error messages now also track

this difference properly.

The pubgrub commit is our main rebased onto the merged

https://github.com/pubgrub-rs/pubgrub/pull/208, i'll push

`konsti/main-rebase-generic-reason` to `main` after checking for rebase

problems.

## Introduction

PEP 621 is limited. Specifically, it lacks

* Relative path support

* Editable support

* Workspace support

* Index pinning or any sort of index specification

The semantics of urls are a custom extension, PEP 440 does not specify

how to use git references or subdirectories, instead pip has a custom

stringly format. We need to somehow support these while still stying

compatible with PEP 621.

## `tool.uv.source`

Drawing inspiration from cargo, poetry and rye, we add `tool.uv.sources`

or (for now stub only) `tool.uv.workspace`:

```toml

[project]

name = "albatross"

version = "0.1.0"

dependencies = [

"tqdm >=4.66.2,<5",

"torch ==2.2.2",

"transformers[torch] >=4.39.3,<5",

"importlib_metadata >=7.1.0,<8; python_version < '3.10'",

"mollymawk ==0.1.0"

]

[tool.uv.sources]

tqdm = { git = "https://github.com/tqdm/tqdm", rev = "cc372d09dcd5a5eabdc6ed4cf365bdb0be004d44" }

importlib_metadata = { url = "https://github.com/python/importlib_metadata/archive/refs/tags/v7.1.0.zip" }

torch = { index = "torch-cu118" }

mollymawk = { workspace = true }

[tool.uv.workspace]

include = [

"packages/mollymawk"

]

[tool.uv.indexes]

torch-cu118 = "https://download.pytorch.org/whl/cu118"

```

See `docs/specifying_dependencies.md` for a detailed explanation of the

format. The basic gist is that `project.dependencies` is what ends up on

pypi, while `tool.uv.sources` are your non-published additions. We do

support the full range or PEP 508, we just hide it in the docs and

prefer the exploded table for easier readability and less confusing with

actual url parts.

This format should eventually be able to subsume requirements.txt's

current use cases. While we will continue to support the legacy `uv pip`

interface, this is a piece of the uv's own top level interface. Together

with `uv run` and a lockfile format, you should only need to write

`pyproject.toml` and do `uv run`, which generates/uses/updates your

lockfile behind the scenes, no more pip-style requirements involved. It

also lays the groundwork for implementing index pinning.

## Changes

This PR implements:

* Reading and lowering `project.dependencies`,

`project.optional-dependencies` and `tool.uv.sources` into a new

requirements format, including:

* Git dependencies

* Url dependencies

* Path dependencies, including relative and editable

* `pip install` integration

* Error reporting for invalid `tool.uv.sources`

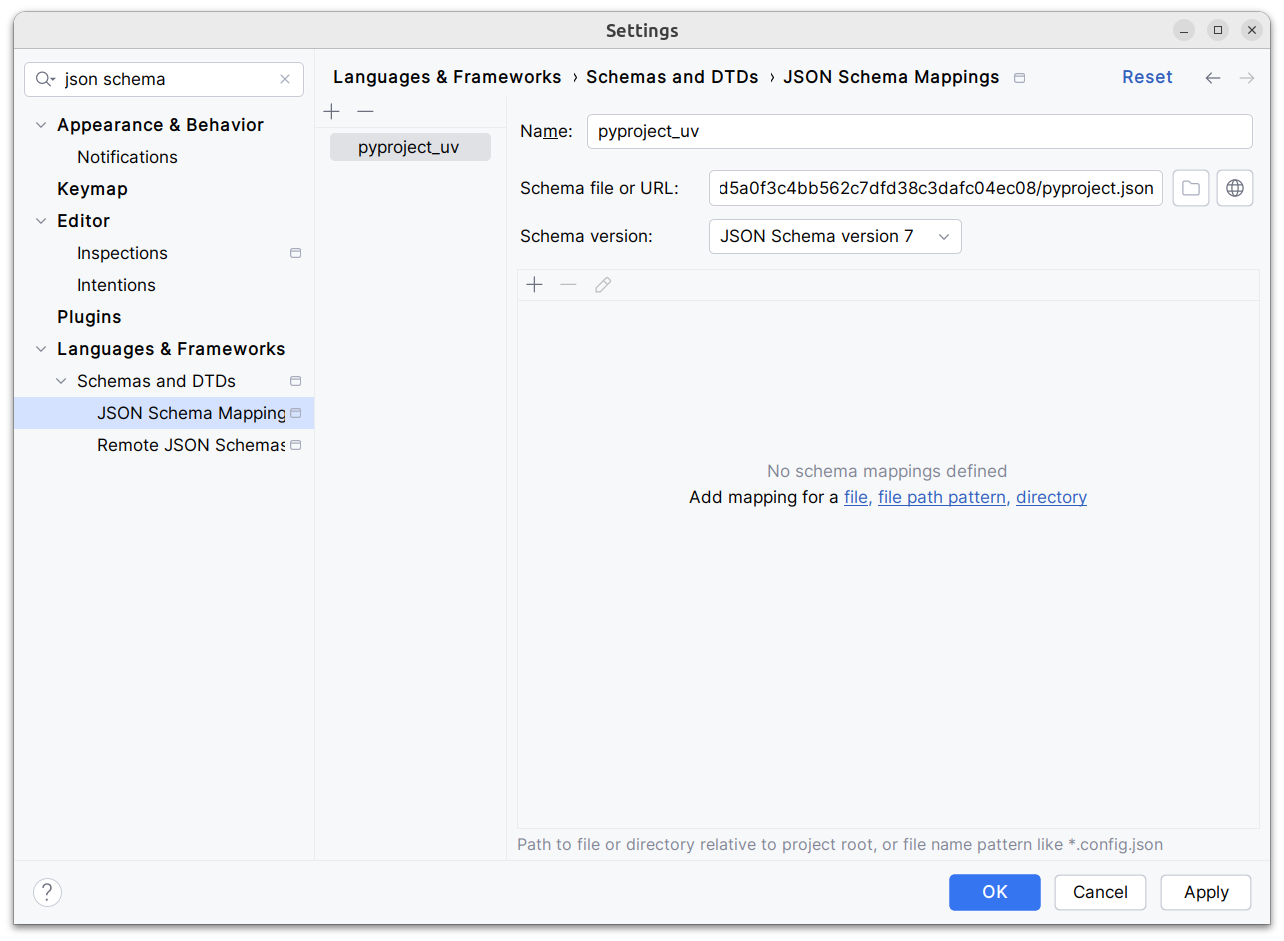

* Json schema integration (works in pycharm, see below)

* Draft user-level docs (see `docs/specifying_dependencies.md`)

It does not implement:

* No `pip compile` testing, deprioritizing towards our own lockfile

* Index pinning (stub definitions only)

* Development dependencies

* Workspace support (stub definitions only)

* Overrides in pyproject.toml

* Patching/replacing dependencies

One technically breaking change is that we now require user provided

pyproject.toml to be valid wrt to PEP 621. Included files still fall

back to PEP 517. That means `pip install -r requirements.txt` requires

it to be valid while `pip install -r requirements.txt` with `-e .` as

content falls back to PEP 517 as before.

## Implementation

The `pep508` requirement is replaced by a new `UvRequirement` (name up

for bikeshedding, not particularly attached to the uv prefix). The still

existing `pep508_rs::Requirement` type is a url format copied from pip's

requirements.txt and doesn't appropriately capture all features we

want/need to support. The bulk of the diff is changing the requirement

type throughout the codebase.

We still use `VerbatimUrl` in many places, where we would expect a

parsed/decomposed url type, specifically:

* Reading core metadata except top level pyproject.toml files, we fail a

step later instead if the url isn't supported.

* Allowed `Urls`.

* `PackageId` with a custom `CanonicalUrl` comparison, instead of

canonicalizing urls eagerly.

* `PubGrubPackage`: We eventually convert the `VerbatimUrl` back to a

`Dist` (`Dist::from_url`), instead of remembering the url.

* Source dist types: We use verbatim url even though we know and require

that these are supported urls we can and have parsed.

I tried to make improve the situation be replacing `VerbatimUrl`, but

these changes would require massive invasive changes (see e.g.

https://github.com/astral-sh/uv/pull/3253). A main problem is the ref

`VersionOrUrl` and applying overrides, which assume the same

requirement/url type everywhere. In its current form, this PR increases

this tech debt.

I've tried to split off PRs and commits, but the main refactoring is

still a single monolith commit to make it compile and the tests pass.

## Demo

Adding

d1ae3b85d5/pyproject.json

as json schema (v7) to pycharm for `pyproject.toml`, you can try the IDE

support already:

[dove.webm](https://github.com/astral-sh/uv/assets/6826232/c293c272-c80b-459d-8c95-8c46a8d198a1)

Moves all of `uv-toolchain` into `uv-interpreter`. We may split these

out in the future, but the refactoring I want to do for interpreter

discovery is easier if I don't have to deal with entanglement. Includes

some restructuring of `uv-interpreter`.

Part of #2386

Previously, uv-auth would fail to compile due to a missing process

feature. I chose to make all tokio features we use top level features,

so we can share the tokio cache between all test invocations.

## Summary

Enables `uv` to read configuration from (e.g.)

`/Users/crmarsh/.config/uv/uv.toml` on macOS and Linux, and

`C:\Users\Charlie\AppData\Roaming\uv\uv.toml` on Windows.

This differs slightly from Ruff, which uses the `Application Support`

directory on macOS. But I've deviated here. based on the preferences

expressed in https://github.com/astral-sh/ruff/issues/10739.

## Summary

This PR adds basic struct definitions along with a "workspace" concept

for discovering settings. (The "workspace" terminology is used to match

Ruff; I did not invent it.)

A few notes:

- We discover any `pyproject.toml` or `uv.toml` file in any parent

directory of the current working directory. (We could adjust this to

look at the directories of the input files.)

- We don't actually do anything with the configuration yet; but those

PRs are large and I want this to be reviewed in isolation.

## Summary

It turns out that `normalize_path` (sourced from Cargo) has a subtle

bug. If you pass it a relative path that traverses beyond the root, it

silently drops components. So, e.g., passing `../foo/bar`, it will just

drop the leading `..` and return `foo/bar`.

This PR encodes that behavior as a `Result` and avoids using it in such

cases.

Closes https://github.com/astral-sh/uv/issues/3012.

## Summary

This PR adds support for hash-checking mode in `pip install` and `pip

sync`. It's a large change, both in terms of the size of the diff and

the modifications in behavior, but it's also one that's hard to merge in

pieces (at least, with any test coverage) since it needs to work

end-to-end to be useful and testable.

Here are some of the most important highlights:

- We store hashes in the cache. Where we previously stored pointers to

unzipped wheels in the `archives` directory, we now store pointers with

a set of known hashes. So every pointer to an unzipped wheel also

includes its known hashes.

- By default, we don't compute any hashes. If the user runs with

`--require-hashes`, and the cache doesn't contain those hashes, we

invalidate the cache, redownload the wheel, and compute the hashes as we

go. For users that don't run with `--require-hashes`, there will be no

change in performance. For users that _do_, the only change will be if

they don't run with `--generate-hashes` -- then they may see some

repeated work between resolution and installation, if they use `pip

compile` then `pip sync`.

- Many of the distribution types now include a `hashes` field, like

`CachedDist` and `LocalWheel`.

- Our behavior is similar to pip, in that we enforce hashes when pulling

any remote distributions, and when pulling from our own cache. Like pip,

though, we _don't_ enforce hashes if a distribution is _already_

installed.

- Hash validity is enforced in a few different places:

1. During resolution, we enforce hash validity based on the hashes

reported by the registry. If we need to access a source distribution,

though, we then enforce hash validity at that point too, prior to

running any untrusted code. (This is enforced in the distribution

database.)

2. In the install plan, we _only_ add cached distributions that have

matching hashes. If a cached distribution is missing any hashes, or the

hashes don't match, we don't return them from the install plan.

3. In the downloader, we _only_ return distributions with matching

hashes.

4. The final combination of "things we install" are: (1) the wheels from

the cache, and (2) the downloaded wheels. So this ensures that we never

install any mismatching distributions.

- Like pip, if `--require-hashes` is provided, we require that _all_

distributions are pinned with either `==` or a direct URL. We also

require that _all_ distributions have hashes.

There are a few notable TODOs:

- We don't support hash-checking mode for unnamed requirements. These

should be _somewhat_ rare, though? Since `pip compile` never outputs

unnamed requirements. I can fix this, it's just some additional work.

- We don't automatically enable `--require-hashes` with a hash exists in

the requirements file. We require `--require-hashes`.

Closes#474.

## Test Plan

I'd like to add some tests for registries that report incorrect hashes,

but otherwise: `cargo test`

See https://github.com/astral-sh/uv/issues/2617

Note this also includes:

- #2918

- #2931 (pending)

A first step towards Python toolchain management in Rust.

First, we add a new crate to manage Python download metadata:

- Adds a new `uv-toolchain` crate

- Adds Rust structs for Python version download metadata

- Duplicates the script which downloads Python version metadata

- Adds a script to generate Rust code from the JSON metadata

- Adds a utility to download and extract the Python version

I explored some alternatives like a build script using things like

`serde` and `uneval` to automatically construct the code from our

structs but deemed it to heavy. Unlike Rye, I don't generate the Rust

directly from the web requests and have an intermediate JSON layer to

speed up iteration on the Rust types.

Next, we add add a `uv-dev` command `fetch-python` to download Python

versions per the bootstrapping script.

- Downloads a requested version or reads from `.python-versions`

- Extracts to `UV_BOOTSTRAP_DIR`

- Links executables for path extension

This command is not really intended to be user facing, but it's a good

PoC for the `uv-toolchain` API. Hash checking (via the sha256) isn't

implemented yet, we can do that in a follow-up.

Finally, we remove the `scripts/bootstrap` directory, update CI to use

the new command, and update the CONTRIBUTING docs.

<img width="1023" alt="Screenshot 2024-04-08 at 17 12 15"

src="https://github.com/astral-sh/uv/assets/2586601/57bd3cf1-7477-4bb8-a8e9-802a00d772cb">

To get more insights into test performance, allow instrumenting tests

with tracing-durations-export.

Usage:

```shell

# A single test

TRACING_DURATIONS_TEST_ROOT=$(pwd)/target/test-traces cargo test --features tracing-durations-export --test pip_install_scenarios no_binary -- --exact

# All tests

TRACING_DURATIONS_TEST_ROOT=$(pwd)/target/test-traces cargo nextest run --features tracing-durations-export

```

Then we can e.g. look at

`target/test-traces/pip_install_scenarios::no_binary.svg` and see the

builds it performs:

## Summary

This updates to the version of axoupdater used in cargo-dist 0.13.0's

own selfupdate command, with all relevant fixes for platforms. It also

tentatively introduces a mildly dangerous self-runtest that runs `uv

self update` and checks that the binary is installed and executable.

I *believe* some adjustments need to be made to your CI to have this new

test run, because it requires the `self-update` feature to be enabled,

and I didn't want to just start messing with how you do feature coverage

in your CI. **As a result I haven't yet had a chance to actually fully

run this in CI**, though I've locally tested it on windows (with the

guard disabled).

## Test Plan

Most of the machinery here is provided by axoupdater itself (cargo-dist

also includes a variant of these tests in its codebase). This initial

implementation has a couple major limitations:

* This is For Reals modifying the system that runs the test (so it's off

unless it detects it's running in CI, and if you want variations on this

test they'll need to be [run in

serial](5e7826f7b0/cargo-dist/tests/cli-tests.rs (L235))).

Since many of the testing issues were surrounding precise details of

Actual Deployed Executions, this seemed worth the tradeoff.

* The actual installer *script* it's ultimately invoking is the one you

last published, and *not* the one that cargo-dist will make when you

next publish.

We're already working on implementing some logic for "get cargo-dist to

generate a fresh installer script too", which is in fact the basis of a

huge amount of cargo-dist's own testsuite. Now that we're dogfooding

this stuff, it should be quite hard for this stuff to break without

cargo-dist's own codebase noticing it first.

<!-- How was it tested? -->

Needed to prevent circular dependencies in my toolchain work (#2931). I

think this is probably a reasonable change as we move towards persistent

configuration too?

Unfortunately `BuildIsolation` needs to be in `uv-types` to avoid

circular dependencies still. We might be able to resolve that in the

future.

## Summary

Upgrading `rs-async-zip` enables us to support data descriptors in

streaming. This both greatly improves performance for indexes that use

data descriptors _and_ ensures that we support them in a few other

places (e.g., zipped source distributions created in Finder).

Closes#2808.

This is driving me a little crazy and is becoming a larger problem in

#2596 where I need to move more types (like `Upgrade` and `Reinstall`)

into this crate. Anything that's shared across our core resolver,

install, and build crates needs to be defined in this crate to avoid

cyclic dependencies. We've outgrown it being a single file with some

shared traits.

There are no behavioral changes here.

Add a compile option `-p fast-build` for a 16% incremental compile

speedup (linux) at the cost of having no debuginfo.

After trying various rust compiler speedup suggestions, setting mold as

my default linker and removing debuginfo are the only ones showing a

speedup.

```

hyperfine --warmup 1 --runs 3 --prepare "touch crates/uv-resolver/src/resolver/mod.rs" \

"cargo +nightly build --bin uv" \

"cargo +nightly build --bin uv --profile fast-build"

Benchmark 1: cargo +nightly build --bin uv

Time (mean ± σ): 1.569 s ± 0.008 s [User: 1.179 s, System: 0.369 s]

Range (min … max): 1.560 s … 1.576 s 3 runs

Benchmark 2: cargo +nightly build --bin uv --profile fast-build

Time (mean ± σ): 1.353 s ± 0.020 s [User: 1.109 s, System: 0.301 s]

Range (min … max): 1.338 s … 1.375 s 3 runs

Summary

cargo +nightly build --bin uv --profile fast-build ran

1.16 ± 0.02 times faster than cargo +nightly build --bin uv

```

With https://github.com/pubgrub-rs/pubgrub/pull/190, pubgrub attaches

all types to a dependency provider to reduce the number of generics. We

need a dummy dependency provider now to emulate this. On the plus side,

pep440_rs drops its pubgrub dependency.

This should address (fix?) #2442, it blocks building with a version that

doesn't support return type impl trait.

```

$ cargo +1.74 check

error: package `pep508_rs v0.4.2 (/home/konsti/projects/uv/crates/pep508-rs)` cannot be built because it requires rustc 1.76 or newer, while the currently active rustc version is 1.74.1

```

While we should encourage our dependencies to set a msrv, if we set our

rust-toolchain.toml version as cargo msrv our users on no-wheel

no-installer platforms will also be fine (or at least get helpful error

messages).

Scott schafer got me the idea: We can avoid repeating the path for

workspaces dependencies everywhere if we declare them in the virtual

package once and treat them as workspace dependencies from there on.

## Summary

I tried out `cargo shear` to see if there are any unused dependencies

that `cargo udeps` isn't reporting. It turned out, there are a few. This

PR removes those dependencies.

## Test Plan

`cargo build`

Bumps [clap](https://github.com/clap-rs/clap) from 4.5.2 to 4.5.3.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/clap-rs/clap/releases">clap's

releases</a>.</em></p>

<blockquote>

<h2>v4.5.3</h2>

<h2>[4.5.3] - 2024-03-15</h2>

<h3>Internal</h3>

<ul>

<li><em>(derive)</em> Update <code>heck</code></li>

</ul>

</blockquote>

</details>

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/clap-rs/clap/blob/master/CHANGELOG.md">clap's

changelog</a>.</em></p>

<blockquote>

<h2>[4.5.3] - 2024-03-15</h2>

<h3>Internal</h3>

<ul>

<li><em>(derive)</em> Update <code>heck</code></li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="4e07b43858"><code>4e07b43</code></a>

chore: Release</li>

<li><a

href="8247c7ddf0"><code>8247c7d</code></a>

docs: Update changelog</li>

<li><a

href="677c52ce08"><code>677c52c</code></a>

chore: Update <code>heck</code> requirement (<a

href="https://redirect.github.com/clap-rs/clap/issues/5396">#5396</a>)</li>

<li>See full diff in <a

href="https://github.com/clap-rs/clap/compare/v4.5.2...v4.5.3">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

## Summary

Closes#1958

This adds linehaul metadata to uv's user-agent when pep 508 markers are

provided to the RegistryClientBuilder. Thanks to #2381, we were able to

leverage most information from markers and avoid inconsistency.

Linehaul is meant to be accompanying metadata pip sends in it's user

agent when talking to registries. You can see this output by running

something like `python -c 'from pip._internal.network.session import

user_agent; print(user_agent())'`.

In PyPI, this metadata processed by the

[linehaul-cloud-function](https://github.com/pypi/linehaul-cloud-function).

More info about linehaul can be found in #1958.

Below are some examples from pip:

* Linux GHA: `pip/24.0

{"ci":true,"cpu":"x86_64","distro":{"id":"jammy","libc":{"lib":"glibc","version":"2.35"},"name":"Ubuntu","version":"22.04"},"implementation":{"name":"CPython","version":"3.12.2"},"installer":{"name":"pip","version":"24.0"},"openssl_version":"OpenSSL

3.0.2 15 Mar

2022","python":"3.12.2","rustc_version":"1.76.0","system":{"name":"Linux","release":"6.5.0-1016-azure"}}`

* Windows GHA: `pip/24.0

{"ci":true,"cpu":"AMD64","implementation":{"name":"CPython","version":"3.12.2"},"installer":{"name":"pip","version":"24.0"},"openssl_version":"OpenSSL

3.0.13 30 Jan

2024","python":"3.12.2","rustc_version":"1.76.0","system":{"name":"Windows","release":"2022Server"}}`

* OSX GHA: `pip/24.0

{"ci":true,"cpu":"arm64","distro":{"name":"macOS","version":"14.2.1"},"implementation":{"name":"CPython","version":"3.12.2"},"installer":{"name":"pip","version":"24.0"},"openssl_version":"OpenSSL

3.0.13 30 Jan

2024","python":"3.12.2","rustc_version":"1.76.0","system":{"name":"Darwin","release":"23.2.0"}}`

Here's how uv results look like (sorry for the keys not having the same

order):

* Linux GHA: `uv/0.1.21

{"installer":{"name":"uv","version":"0.1.21"},"python":"3.12.2","implementation":{"name":"CPython","version":"3.12.2"},"distro":{"name":"Ubuntu","version":"22.04","id":"jammy","libc":null},"system":{"name":"Linux","release":"6.5.0-1016-azure"},"cpu":"x86_64","openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}`

* Windows GHA: `uv/0.1.21

{"installer":{"name":"uv","version":"0.1.21"},"python":"3.12.2","implementation":{"name":"CPython","version":"3.12.2"},"distro":null,"system":{"name":"Windows","release":"2022Server"},"cpu":"AMD64","openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}`

* OSX GHA: `uv/0.1.21

{"installer":{"name":"uv","version":"0.1.21"},"python":"3.12.2","implementation":{"name":"CPython","version":"3.12.2"},"distro":{"name":"macOS","version":"14.2.1","id":null,"libc":null},"system":{"name":"Darwin","release":"23.2.0"},"cpu":"arm64","openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}`

Distro information (such as the one pip uses `from pip._vendor import

distro` to retrieve instead of `platform` module) was not retrieved from

markers. Instead, the linux release codename/name/version uses

`sys-info` crate, adding about 50us of extra overhead on linux. The

distro osx version re-used the [mac_os version

implementation](99c992e38b/crates/platform-host/src/mac_os.rs)

from #2381 which adds about 20us of overhead on osx. I tried to use

other crates to avoid re-introducing `mac_os.rs` but most of them didn't

yield satisfactory performance (40ms-60ms~) or had the wrong values

needed (e.g. darwin version vs osx version).

I also didn't add libc retrieval or rustc retrieval as those seem to add

substantial overhead due to querying `ldd` or `rustc`. PyPy version

detection was also not added to avoid adding extra overhead to [support

PyPy for

linehaul](https://github.com/pypa/pip/blob/24.0/src/pip/_internal/network/session.py#L123).

All other behavior was kept 1-1 to match what pip's linehaul

implementation does (as of 24.0). This also aligns with what was

discussed in #1958.

## Test Plan

Added new integration test to uv-client.

---------

Co-authored-by: konstin <konstin@mailbox.org>

This update pulls in https://github.com/pubgrub-rs/pubgrub/pull/177,

optimizing common range operations in pubgrub. Please refer to this PR

for a more extensive description and discussion of the changes.

The changes optimize that last remaining pathological case,

`bio_embeddings[all]` on python 3.12, which has to try 100k versions,

from 12s to 3s in the cached case. It should also enable smarter

prefetching in batches (https://github.com/astral-sh/uv/issues/170),

even though a naive attempt didn't show better network usage.

**before** 12s

**after** 3s

```

$ taskset -c 0 hyperfine --warmup 1 "../uv/target/profiling/main-uv pip compile ../uv/scripts/requirements/bio_embeddings.in" "../uv/target/profiling/branch-uv pip compile ../uv/scripts/requirements/bio_embeddings.in"

Benchmark 1: ../uv/target/profiling/main-uv pip compile ../uv/scripts/requirements/bio_embeddings.in

Time (mean ± σ): 12.321 s ± 0.064 s [User: 12.014 s, System: 0.300 s]

Range (min … max): 12.224 s … 12.406 s 10 runs

Benchmark 2: ../uv/target/profiling/branch-uv pip compile ../uv/scripts/requirements/bio_embeddings.in

Time (mean ± σ): 3.109 s ± 0.004 s [User: 2.782 s, System: 0.321 s]

Range (min … max): 3.103 s … 3.116 s 10 runs

Summary

../uv/target/profiling/branch-uv pip compile ../uv/scripts/requirements/bio_embeddings.in ran

3.96 ± 0.02 times faster than ../uv/target/profiling/main-uv pip compile ../uv/scripts/requirements/bio_embeddings.in

```

It also adds `bio_embeddings[all]` as a requirements test case.

<!--

Thank you for contributing to uv! To help us out with reviewing, please

consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

Adds basic keyring auth support for `uv` commands. Adds clone of `pip`'s

`--keyring-provider subprocess` argument (using CLI `keyring` tool).

See issue: https://github.com/astral-sh/uv/issues/1520

## Test Plan

<!-- How was it tested? -->

Hard to write full-suite unit tests due to reliance on

`process::Command` for `keyring` cli

Manually tested end-to-end in a project with GCP artifact registry using

keyring password:

```bash

➜ uv pip uninstall watchdog

Uninstalled 1 package in 46ms

- watchdog==4.0.0

➜ cargo run -- pip install --index-url https://<redacted>/python/simple/ --extra-index-url https://<redacted>/pypi-mirror/simple/ watchdog

Finished dev [unoptimized + debuginfo] target(s) in 0.18s

Running `target/debug/uv pip install --index-url 'https://<redacted>/python/simple/' --extra-index-url 'https://<redacted>/pypi-mirror/simple/' watchdog`

error: HTTP status client error (401 Unauthorized) for url (https://<redacted>/pypi-mirror/simple/watchdog/)

➜ cargo run -- pip install --keyring-provider subprocess --index-url https://<redacted>/python/simple/ --extra-index-url https://<redacted>/pypi-mirror/simple/ watchdog

Finished dev [unoptimized + debuginfo] target(s) in 0.17s

Running `target/debug/uv pip install --keyring-provider subprocess --index-url 'https://<redacted>/python/simple/' --extra-index-url 'https://<redacted>/pypi-mirror/simple/' watchdog`

Resolved 1 package in 2.34s

Installed 1 package in 27ms

+ watchdog==4.0.0

```

`requirements.txt`

```

#

# This file is autogenerated by pip-compile with Python 3.10

# by the following command:

#

# .bin/generate-requirements

#

--index-url https://<redacted>/python/simple/

--extra-index-url https://<redacted>/pypi-mirror/simple/

...

```

```bash

➜ cargo run -- pip install --keyring-provider subprocess -r requirements.txt

Finished dev [unoptimized + debuginfo] target(s) in 0.19s

Running `target/debug/uv pip install --keyring-provider subprocess -r requirements.txt`

Resolved 205 packages in 23.52s

Built <redacted>

...

Downloaded 47 packages in 19.32s

Installed 195 packages in 276ms

+ <redacted>

...

```

---------

Co-authored-by: Thomas Gilgenast <thomas@vant.ai>

Co-authored-by: Zanie Blue <contact@zanie.dev>

## Summary

This may be required elsewhere, but all the traces in that issue are

related to persisting the temporary directory to our persistent cache,

so lets start there.

See: https://github.com/astral-sh/uv/issues/1491.

The architecture of uv does not necessarily match that of the python

interpreter (#2326). In cross compiling/testing scenarios the operating

system can also mismatch. To solve this, we move arch and os detection

to python, vendoring the relevant pypa/packaging code, preventing

mismatches between what the python interpreter was compiled for and what

uv was compiled for.

To make the scripts more manageable, they are now a directory in a

tempdir and we run them with `python -m` . I've simplified the

pypa/packaging code since we're still building the tags in rust. A

`Platform` is now instantiated by querying the python interpreter for

its platform. The pypa/packaging files are copied verbatim for easier

updates except a `lru_cache()` python 3.7 backport.

Error handling is done by a `"result": "success|error"` field that allow

passing error details to rust:

```console

$ uv venv --no-cache

× Can't use Python at `/home/konsti/projects/uv/.venv/bin/python3`

╰─▶ Unknown operation system `linux`

```

I've used the [maturin sysconfig

collection](855f6d2cb1/sysconfig)

as reference. I'm unsure how to test these changes across the wide

variety of platforms.

Fixes#2326

## Summary

It turns out that on macOS, reading the native certificates can add

hundreds of milliseconds to client initialization. This PR makes

`--native-tls` a command-line flag, to toggle (at runtime) the choice of

the `webpki` roots or the native system roots.

You can't accomplish this kind of configuration with the `reqwest`

builder API, so instead, I pulled out the heart of that logic from the

crate

(e319263851/src/async_impl/client.rs (L498)),

and modified it to allow toggling a choice of root.

Note that there's an open PR for this in reqwest

(https://github.com/seanmonstar/reqwest/pull/1848), along with an issue

(https://github.com/seanmonstar/reqwest/issues/1843), which I may ping,

but it's been around for a while and I believe reqwest is focused on its

next major release.

Closes https://github.com/astral-sh/uv/issues/2346.

Bumps [walkdir](https://github.com/BurntSushi/walkdir) from 2.4.0 to

2.5.0.

<details>

<summary>Commits</summary>

<ul>

<li><a

href="4f26be4d45"><code>4f26be4</code></a>

2.5.0</li>

<li><a

href="3be5734033"><code>3be5734</code></a>

api: implement <code>FusedIterator</code></li>

<li><a

href="b0d16b759a"><code>b0d16b7</code></a>

ci: fix it</li>

<li>See full diff in <a

href="https://github.com/BurntSushi/walkdir/compare/2.4.0...2.5.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

## Summary

The netrc middleware we added in

https://github.com/astral-sh/uv/pull/2241 has a slight problem. If you

include credentials in your index URL, _and_ in the netrc file, the

crate blindly adds the netrc credentials as a header. And given the

`ReqwestBuilder` API, this means you end up with _two_ `Authorization`

headers, which always leads to an invalid request, though the exact

failure can take different forms.

This PR removes the middleware crate in favor of our own middleware.

Instead of using the `RequestInitialiser` API, we have to use the

`Middleware` API, so that we can remove the header on the request

itself.

Closes https://github.com/astral-sh/uv/issues/2323.

## Test Plan

- Verified that running against a private index with credentials in the

URL (but no netrc file) worked without error.

- Verified that running against a private index with credentials in the

netrc file (but not the URL) worked without error.

- Verified that running against a private index with a mix of

credentials in both _also_ worked without error.

This PR tweaks uv to support reading `requirements.txt` regardless of

whether it is encoded as UTF-8 or UTF-16. This is particularly relevant

on Windows where `uv pip freeze > requirements.txt` will likely write a

UTF-16 encoded `requirements.txt` file.

There is some discussion on #1666 where it's suggested that perhaps

we should explicitly not support this. I didn't see that until I

had already put this PR together, but even so, I think it's worth

considering this. UTF-16 is predominant on Windows. It is very easy

to produce a UTF-16 encoded file. Moreover, there is an easy and well

specified way to recognize and transcode UTF-16 encoded data to UTF-8.

I think the downside of this is that it could encourage the use UTF-16

encoded `requirements.txt` files *in addition* to UTF-8 encoded

files, and it would probably be nice to converge and standardize on

one encoding. One possible alternative to this PR is that we provide

a better error message. Another alternative is to ensure that a

`-o/--output` flag exists for all commands (neither `uv pip freeze` nor

`pip freeze` have such a flag) so that users can always write output

to a file without relying on their environment's piping behavior.

(Although this last alternative seems a little sad to me.)

It's also worth noting the [PEP-0508] doesn't seem to mention file

encoding at all. So I think from a "do the standards allow this"

perspective, this change is OK.

Finally, `pip` itself seems to work with UTF-16 encoded

`requirements.txt` files.

I think I personally overall lean towards supporting UTF-16 for

`requirements.txt` files. In part because I think it smoothes out the

UX a little bit, in part because there is no obvious specification

(that I'm aware of) that mandates that these files are UTF-8, and

finally in part because `pip` supports it too.

Fixes#1666, Fixes#2276

[PEP-0508]: https://peps.python.org/pep-0508/

## Summary

This PR enables use of the Windows Store Pythons even with `py` is not

installed. Specifically, we need to ensure that the `python.exe` and

`python3.exe` executables installed into the

`C:\Users\crmar\AppData\Local\Microsoft\WindowsApp` directory _are_ used

when they're not "App execution aliases" (which merely open the Windows

Store, to help you install Python).

When `py` is installed, this isn't strictly necessary, since the

"resolved" executables are discovered via `py`. These look like

`C:\Users\crmar\AppData\Local\Microsoft\WindowsApps\PythonSoftwareFoundation.Python.3.11_qbs5n2kfra8p0\python.exe`.

Closes https://github.com/astral-sh/uv/issues/2264.

## Test Plan

- Removed all Python installations from my Windows machine.

- Uninstalled `py`.

- Enabled "App execution aliases".

- Verified that for both `cargo run venv --python python.exe` and `cargo

run venv --python python3.exe`, `uv` exited with a failure that no

Python could be found.

- Installed Python 3.10 via the Windows Store.

- Verified that the above commands succeeded without error.

- Verified that `cargo run venv --python python3.10.exe` _also_

succeeded.

## Summary

Add netrc support to the uv-client.

closes#1405

## Test Plan

I've added a corresponding test case to validate the correct header.

Furthermore a tested it against a real world private repository.

## Summary

Allow using http(s) urls for constraints and requirements files handed

to the CLI, by handling paths starting with `http://` or `https://`

differently. This allows commands for such as: `uv pip install -c

https://raw.githubusercontent.com/apache/airflow/constraints-2.8.1/constraints-3.8.txt

requests`.

closes#1332

## Test Plan

Testing install using a `constraints.txt` file hosted on github in the

airflow repository:

fbdc2eba8e/crates/uv/tests/pip_install.rs (L1440-L1484)

## Advice Needed

- filesystem/http dispatch is implemented at a relatively low level (at

`crates/uv-fs/src/lib.rs#read_to_string`). Should I change some naming

here so it is obvious that the function is able to dispatch?

- I kept the CLI argument for -c and -r as a PathBuf, even though now it

is technically either a path or a url. We could either keep this as is

for now, or implement a new enum for this case? The enum could then

handle dispatch to files/http.

- Using another abstraction layer like

https://docs.rs/object_store/latest/object_store/ for the

files/urls/[s3] could work as well, though I ran into a bug during

testing which I couldn't debug

Add a `--compile` option to `pip install` and `pip sync`.

I chose to implement this as a separate pass over the entire venv. If we

wanted to compile during installation, we'd have to make sure that

writing is exclusive, to avoid concurrent processes writing broken

`.pyc` files. Additionally, this ensures that the entire site-packages

are bytecode compiled, even if there are packages that aren't from this

`uv` invocation. The disadvantage is that we do not update RECORD and

rely on this comment from [PEP 491](https://peps.python.org/pep-0491/):

> Uninstallers should be smart enough to remove .pyc even if it is not

mentioned in RECORD.

If this is a problem we can change it to run during installation and

write RECORD entries.

Internally, this is implemented as an async work-stealing subprocess

worker pool. The producer is a directory traversal over site-packages,

sending each `.py` file to a bounded async FIFO queue/channel. Each

worker has a long-running python process. It pops the queue to get a

single path (or exists if the channel is closed), then sends it to

stdin, waits until it's informed that the compilation is done through a

line on stdout, and repeat. This is fast, e.g. installing `jupyter

plotly` on Python 3.12 it processes 15876 files in 319ms with 32 threads

(vs. 3.8s with a single core). The python processes internally calls

`compileall.compile_file`, the same as pip.

Like pip, we ignore and silence all compilation errors

(https://github.com/astral-sh/uv/issues/1559). There is a 10s timeout to

handle the case when the workers got stuck. For the reviewers, please

check if i missed any spots where we could deadlock, this is the hardest

part of this PR.

I've added `uv-dev compile <dir>` and `uv-dev clear-compile <dir>`

commands, mainly for my own benchmarking. I don't want to expose them in

`uv`, they almost certainly not the correct workflow and we don't want

to support them.

Fixes#1788Closes#1559Closes#1928

## Summary

I think Camino is nice but it makes it much harder to work in

`uv-virtualenv`, since it's the _only_ crate that uses it. If we want to

use Camino, we should use it everywhere IMO.

## Summary

This PR adds a `--python` flag that allows users to provide a specific

Python interpreter into which `uv` should install packages. This would

replace the `VIRTUAL_ENV=` workaround that folks have been using to

install into arbitrary, system environments, while _also_ actually being

correct for installing into non-virtual environments, where the bin and

site-packages paths can differ.

The approach taken here is to use `sysconfig.get_paths()` to get the

correct paths from the interpreter, and then use those for determining

the `bin` and `site-packages` directories, rather than constructing them

based on hard-coded expectations for each platform.

Closes https://github.com/astral-sh/uv/issues/1396.

Closes https://github.com/astral-sh/uv/issues/1779.

Closes https://github.com/astral-sh/uv/issues/1988.

## Test Plan

- Verified that, on my Windows machine, I was able to install `requests`

into a global environment with: `cargo run pip install requests --python

'C:\\Users\\crmarsh\\AppData\\Local\\Programs\\Python\\Python3.12\\python.exe`,

then `python` and `import requests`.

- Verified that, on macOS, I was able to install `requests` into a

global environment installed via Homebrew with: `cargo run pip install

requests --python $(which python3.8)`.

## Summary

When a `pyproject.toml` is provided directly to `uv pip compile`, we

were failing to resolve recursive extras. The solution I settled on here

is to flatten them recursively when determining the requirements

upfront.

Closes https://github.com/astral-sh/uv/issues/1987.

## Test Plan

`cargo test`

## Summary

Closes#1943

Makes sure `build-binaries` and `publish-pypi` workflows are compatible

with `actions/{download,upload}-artifact@v4`. In nature, this PR is very

similar to the changes in https://github.com/astral-sh/ruff/pull/10105.

This PR also updates cargo-dist.

## Test Plan

I ran a small non-dry-run [smoke

test](https://github.com/samypr100/uv/actions/runs/8027864059) on my own

fork CI with only linux builds (for speed) and those jobs seem to work

at a glance.

## Summary

This revives a PR from long ago

(https://github.com/astral-sh/uv/pull/383 and

https://github.com/zanieb/pubgrub/pull/24) that modifies how we deal

with dependencies that are declared multiple times within a single

package.

To quote from the originating PR:

> Uses an experimental pubgrub branch (#370) that allows us to handle

multiple version ranges for a single dependency to the solver which

results in better error messages because the derivation tree contains

all of the relevant versions. Previously, the version ranges were merged

(by us) in the resolver before handing them to pubgrub since only one

range could be provided per package. Since we don't merge the versions

anymore, we no longer give the solver an empty range for conflicting

requirements; instead the solver comes to that conclusion from the

provided versions. You can see the improved error message for direct

dependencies in [this

snapshot](https://github.com/astral-sh/puffin/pull/383/files#diff-a0437f2c20cde5e2f15199a3bf81a102b92580063268417847ec9c793a115bd0).

The main issue with that PR was around its handling of URL dependencies,

so this PR _also_ refactors how we handle those. Previously, we stored

URL dependencies on `PubGrubPackage`, but they were omitted from the

hash and equality implementations of `PubGrubPackage`. This led to some

really careful codepaths wherein we had to ensure that we always visited

URLs before non-URL packages, so that the URL-inclusive versions were

included in any hashmaps, etc. I considered preserving this approach,

but it would require us to rely on lots of internal details of PubGrub

(since we'd now be relying on PubGrub to merge those packages in the

"right" order).

So, instead, we now _always_ set the URL on a given package, whenever

that package was _given_ a URL upfront. I think this is easier to reason

about: if the user provided a URL for `flask`, then we should just

always add the URL for `flask`. If we see some other URL for `flask`, we

error, like before. If we see some unknown URL for `flask`, we error,

like before.

Closes https://github.com/astral-sh/uv/issues/1522.

Closes https://github.com/astral-sh/uv/issues/1821.

Closes https://github.com/astral-sh/uv/issues/1615.

## Summary

We currently maintain separate untar methods for sync and async, but we

only use the sync version when the user provides a local source

distribution. (Otherwise, we untar as we download the distribution.) In

my testing, this is actually slower anyway:

```

❯ python -m scripts.bench \

--uv-path ./target/release/main \

--uv-path ./target/release/uv \

./requirements.in --benchmark resolve-cold --min-runs 50

Benchmark 1: ./target/release/main (resolve-cold)

Time (mean ± σ): 835.2 ms ± 107.4 ms [User: 346.0 ms, System: 151.3 ms]

Range (min … max): 639.2 ms … 1051.0 ms 50 runs

Benchmark 2: ./target/release/uv (resolve-cold)

Time (mean ± σ): 750.7 ms ± 91.9 ms [User: 345.7 ms, System: 149.4 ms]

Range (min … max): 637.9 ms … 905.7 ms 50 runs

Summary

'./target/release/uv (resolve-cold)' ran

1.11 ± 0.20 times faster than './target/release/main (resolve-cold)'

```

<!--

Thank you for contributing to uv! To help us out with reviewing, please

consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

Adds cli command / flag (`generate-shell-completion <SHELL>` /

`--generate-shell-completion <SHELL>`) to generate the completion script

for the given shell. Implemented in exactly the same way as it is done

in ruff

(https://github.com/astral-sh/ruff/blob/main/crates/ruff/src/lib.rs#L197)

Closes https://github.com/astral-sh/uv/issues/1654

## Test Plan

I've normally tested the generated script manually only for bash shell

on Ubuntu 22.04.3

```bash

$ uv --generate-shell-completion bash > /usr/share/bash-completion/completions/uv

$ uv # <TAB>

-q -h --verbose --no-cache --version clean

-v -V --no-color --cache-dir pip generate-shell-completion

-n --quiet --color --help venv help

$ uv pip # <TAB>

-q -n -V --verbose --color --cache-dir --version sync uninstall help

-v -h --quiet --no-color --no-cache --help compile install freeze

```

First, replace all usages in files in-place. I used my editor for this.

If someone wants to add a one-liner that'd be fun.

Then, update directory and file names:

```

# Run twice for nested directories

find . -type d -print0 | xargs -0 rename s/puffin/uv/g

find . -type d -print0 | xargs -0 rename s/puffin/uv/g

# Update files

find . -type f -print0 | xargs -0 rename s/puffin/uv/g

```

Then add all the files again

```

# Add all the files again

git add crates

git add python/uv

# This one needs a force-add

git add -f crates/uv-trampoline

```

That is, a `PrioritizedDistribution` for a specific version of a

package is not actually materialized in memory until a corresponding

`VersionMap::get` call is made for that version. Similarly, iteration

lazily materializes distributions as it moves through the map. It

specifically does not materialize everything first.

The main reason why this is effective is that an

`OwnedArchive<SimpleMetadata>` represents a zero-copy (other than

reading the source file) version of `SimpleMetadata` that is really just

a `Vec<u8>` internally. The problem with `VersionMap` construction

previously is that it had to eagerly materialize a `SimpleMetadata` in

memory before anything else, which defeats a large part of the purpose

of zero-copy deserialization. By making more of `VersionMap`

construction itself lazy, we permit doing some parts of resolution

without necessarily fully deserializing a `SimpleMetadata` into memory.

Indeed, with this commit, in the warm cached case, a `SimpleMetadata` is

itself never materialized fully in memory.

This does not completely and totally fully realize the benefits of

zero-copy deserialization. For example, we are likely still building

lots of distributions in memory that we don't actually need in some

cases. Perhaps in cases where no resolution exists, or when one needs to

iterate over large portions of the total versions published for a

package.

## Summary

This PR adds an `--offline` flag to Puffin that disables network

requests (implemented as a Reqwest middleware on our registry client).

When `--offline` is provided, we also allow the HTTP cache to return

stale data.

Closes#942.

## Summary

These add and remove dependencies from a `pyproject.toml` -- but they're

currently hidden, and don't match the rest of the workflow. We can

re-add them when the time is right.

(Please review this PR commit by commit.)

This PR closes an initial loop on zero-copy deserialization. That

is, provides a way to get a `Archived<SimpleMetadata>` (spelled

`OwnedArchive<SimpleMetadata>` in the code) from a `CachedClient`. The

main benefit of zero-copy deserialization is that we can read bytes

from a file, cast those bytes to a structured representation without

cost, and then start using that type as any other Rust type. The

"catch" is that the structured representation is not the actual type

you started with, but the "archived" version of it.

In order to make all this work, we ended up needing to shave a rather

large yak: we had to re-implement HTTP cache semantics. Previously,

we were using the `http-cache-semantics` crate. While it does support

Serde, it doesn't support `rkyv`. Moreover, even simple support for

`rkyv` wouldn't be enough. What we actually want is for the HTTP cache

semantics to be implemented on the *archived* type so that we can

decide whether our cached response is stale or not without needing to

do a full deserialization into the unarchived type. This is why, in

this PR, you'll see `impl ArchivedCachePolicy { ... }` instead of

`impl CachePolicy { ... }`. (The `derive(rkyv::Archive)` macro

automatically introduces the `ArchivedCachePolicy` type into the

current namespace.)

Unfortunately, this PR does not fully realize the dream that is

zero-copy deserialization. Namely, while a `CachedClient` can now

provide an `OwnedArchive<SimpleMetadata>`, the rest of our code

doesn't really make use of it. Indeed, as soon as we go to build a

`VersionMap`, we eagerly convert our archived metadata into an owned

`SimpleMetadata` via deserialization (that *isn't* zero-copy). After

this change, a lot of the work now shifts to `rkyv` deserialization

and `VersionMap` construction. More precisely, the main thing we drop

here is `CachePolicy` deserialization (which is now truly zero-copy)

and the parsing of the MessagePack format for `SimpleMetadata`. But we

are still paying for deserialization. We're just paying for it in a

different place.

This PR does seem to bring a speed-up, but it is somewhat underwhelming.

My measurements have been pretty noisy, but I get a 1.1x speedup fairly

often:

```

$ hyperfine -w5 "puffin-main pip compile --cache-dir ~/astral/tmp/cache-main ~/astral/tmp/reqs/home-assistant-reduced.in -o /dev/null" "puffin-test pip compile --cache-dir ~/astral/tmp/cache-test ~/astral/tmp/reqs/home-assistant-reduced.in -o /dev/null" ; A kang

Benchmark 1: puffin-main pip compile --cache-dir ~/astral/tmp/cache-main ~/astral/tmp/reqs/home-assistant-reduced.in -o /dev/null

Time (mean ± σ): 164.4 ms ± 18.8 ms [User: 427.1 ms, System: 348.6 ms]

Range (min … max): 131.1 ms … 190.5 ms 18 runs

Benchmark 2: puffin-test pip compile --cache-dir ~/astral/tmp/cache-test ~/astral/tmp/reqs/home-assistant-reduced.in -o /dev/null

Time (mean ± σ): 148.3 ms ± 10.2 ms [User: 357.1 ms, System: 319.4 ms]

Range (min … max): 136.8 ms … 184.4 ms 19 runs

Summary

puffin-test pip compile --cache-dir ~/astral/tmp/cache-test ~/astral/tmp/reqs/home-assistant-reduced.in -o /dev/null ran

1.11 ± 0.15 times faster than puffin-main pip compile --cache-dir ~/astral/tmp/cache-main ~/astral/tmp/reqs/home-assistant-reduced.in -o /dev/null

```

One downside is that this does increase cache size (`rkyv`'s

serialization format is not as compact as MessagePack). On disk size

increases by about 1.8x for our `simple-v0` cache.

```

$ sort-filesize cache-main

4.0K cache-main/CACHEDIR.TAG

4.0K cache-main/.gitignore

8.0K cache-main/interpreter-v0

8.7M cache-main/wheels-v0

18M cache-main/archive-v0

59M cache-main/simple-v0

109M cache-main/built-wheels-v0

193M cache-main

193M total

$ sort-filesize cache-test

4.0K cache-test/CACHEDIR.TAG

4.0K cache-test/.gitignore

8.0K cache-test/interpreter-v0

8.7M cache-test/wheels-v0

18M cache-test/archive-v0

107M cache-test/simple-v0

109M cache-test/built-wheels-v0

242M cache-test

242M total

```

Also, while I initially intended to do a simplistic implementation of

HTTP cache semantics, I found that everything was somewhat

inter-connected. I could have wrote code that _specifically_ only worked

with the present behavior of PyPI, but then it would need to be special

cased and everything else would need to continue to use

`http-cache-sematics`. By implementing what we need based on what Puffin

actually is (which is still less than what `http-cache-semantics` does),

we can avoid special casing and use zero-copy deserialization for our

cache policy in _all_ cases.

Previously, whenever we encountered a missing package we would throw an

error without information about why the package was requested. This

meant that if a transitive dependency required a missing package, the

user would have no idea why it was even selected. Here, we track

`NotFound` and `NoIndex` errors as `NoVersions` incompatibilities with

an attached reason. Improves our test coverage for `--no-index` without

`--find-links`.

The

[snapshots](https://github.com/astral-sh/puffin/pull/1241/files#diff-3eea1658f165476252f1f061d0aa9f915aabdceafac21611cdf45019447f60ec)

show a nice improvement.

I think this will also enable backtracking to another version if some

version of transitive dependency has a missing dependency. I'll write a

scenario for that next.

Requires https://github.com/zanieb/pubgrub/pull/22

## Summary

Previously, we were blocking operations that could run in parallel. We

would send request through our main requests channel, but not yield so

that the receiver could only start processing requests much later than

necessary. We solve this by switching to the async

`tokio::sync::mpsc::channel`, where send is an async functions that

yields.

Due to the increased parallelism cache deserialization and the

conversion from simple api request to version map became bottlenecks, so

i moved them to `spawn_blocking`. Together these result in a 30-60%

speedup for larger warm cache resolution. Small cases such as black

already resolve in 5.7 ms on my machine so there's no speedup to be

gained, refresh and no cache were to noisy to get signal from.

Note for the future: Revisit the bounded channel if we want to produce

requests from `process_request`, too, (this would be good for

prefetching) to avoid deadlocks.

## Details

We can look at the behavior change through the spans:

```

RUST_LOG=puffin=info TRACING_DURATIONS_FILE=target/traces/jupyter-warm-branch.ndjson cargo run --features tracing-durations-export --bin puffin-dev --profile profiling -- resolve jupyter 2> /dev/null

```

Below, you can see how on main, we have discrete phases: All (cached)

simple api requests in parallel, then all (cached) metadata requests in

parallel, repeat until done. The solver is mostly waiting until it has

it's version map from the simple API query to be able to choose a

version. The main thread is blocked by process requests.

In the PR branch, the simple api requests succeeds much earlier,

allowing the solver to advance and also to schedule more prefetching.

Due to that `parse_cache` and `from_metadata` became bottlenecks, so i

moved them off the main thread (green color, and their spans can now

overlap because they can run on multiple threads in parallel). The main