Add support for declared types to the semantic index. This involves a

lot of renaming to clarify the distinction between bindings and

declarations. The Definition (or more specifically, the DefinitionKind)

becomes responsible for determining which definitions are bindings,

which are declarations, and which are both, and the symbol table

building is refactored a bit so that the `IS_BOUND` (renamed from

`IS_DEFINED` for consistent terminology) flag is always set when a

binding is added, rather than being set separately (and requiring us to

ensure it is set properly).

The `SymbolState` is split into two parts, `SymbolBindings` and

`SymbolDeclarations`, because we need to store live bindings for every

declaration and live declarations for every binding; the split lets us

do this without storing more than we need.

The massive doc comment in `use_def.rs` is updated to reflect bindings

vs declarations.

The `UseDefMap` gains some new APIs which are allow-unused for now,

since this PR doesn't yet update type inference to take declarations

into account.

## Summary

Follow-up from #13268, this PR updates the test case to use

`assert_snapshot` now that the output is limited to only include the

rules with diagnostics.

## Test Plan

`cargo insta test`

Add `::is_empty` and `::union` methods to the `BitSet` implementation.

Allowing unused for now, until these methods become used later with the

declared-types implementation.

---------

Co-authored-by: Alex Waygood <Alex.Waygood@Gmail.com>

These are quite incomplete, but I needed to start stubbing them out in

order to build and test declared-types.

Allowing unused for now, until they are used later in the declared-types

PR.

---------

Co-authored-by: Alex Waygood <Alex.Waygood@Gmail.com>

## Summary

This PR adds a new `Type` variant called `TupleType` which is used for

heterogeneous elements.

### Display notes

* For an empty tuple, I'm using `tuple[()]` as described in the docs:

https://docs.python.org/3/library/typing.html#annotating-tuples

* For nested elements, it'll use the literal type instead of builtin

type unlike Pyright which does `tuple[Literal[1], tuple[int, int]]`

instead of `tuple[Literal[1], tuple[Literal[2], Literal[3]]]`. Also,

mypy would give `tuple[builtins.int, builtins.int]` instead of

`tuple[Literal[1], Literal[2]]`

## Test Plan

Update test case to account for the display change and add cases for

multiple elements and nested tuple elements.

---------

Co-authored-by: Alex Waygood <Alex.Waygood@Gmail.com>

Co-authored-by: Carl Meyer <carl@astral.sh>

## Summary

This PR adds support for control flow for match statement.

It also adds the necessary infrastructure required for narrowing

constraints in case blocks and implements the logic for

`PatternMatchSingleton` which is either `None` / `True` / `False`. Even

after this the inferred type doesn't get simplified completely, there's

a TODO for that in the test code.

## Test Plan

Add test cases for control flow for (a) when there's a wildcard pattern

and (b) when there isn't. There's also a test case to verify the

narrowing logic.

---------

Co-authored-by: Carl Meyer <carl@astral.sh>

When a type of the form `Literal["..."]` would be constructed with too

large of a string, this PR converts it to `LiteralString` instead.

We also extend inference for binary operations to include the case where

one of the operands is `LiteralString`.

Closes#13224

Pull the tests from `types.rs` into `infer.rs`.

All of these are integration tests with the same basic form: create a

code sample, run type inference or check on it, and make some assertions

about types and/or diagnostics. These are the sort of tests we will want

to move into a test framework with a low-boilerplate custom textual

format. In the meantime, having them together (and more importantly,

their helper utilities together) means that it's easy to keep tests for

related language features together (iterable tests with other iterable

tests, callable tests with other callable tests), without an artificial

split based on tests which test diagnostics vs tests which test

inference. And it allows a single test to more easily test both

diagnostics and inference. (Ultimately in the test framework, they will

likely all test diagnostics, just in some cases the diagnostics will

come from `reveal_type()`.)

My plan for handling declared types is to introduce a `Declaration` in

addition to `Definition`. A `Declaration` is an annotation of a name

with a type; a `Definition` is an actual runtime assignment of a value

to a name. A few things (an annotated function parameter, an

annotated-assignment with an RHS) are both a `Definition` and a

`Declaration`.

This more cleanly separates type inference (only cares about

`Definition`) from declared types (only impacted by a `Declaration`),

and I think it will work out better than trying to squeeze everything

into `Definition`. One of the tests in this PR

(`annotation_only_assignment_transparent_to_local_inference`)

demonstrates one reason why. The statement `x: int` should have no

effect on local inference of the type of `x`; whatever the locally

inferred type of `x` was before `x: int` should still be the inferred

type after `x: int`. This is actually quite hard to do if `x: int` is

considered a `Definition`, because a core assumption of the use-def map

is that a `Definition` replaces the previous value. To achieve this

would require some hackery to effectively treat `x: int` sort of as if

it were `x: int = x`, but it's not really even equivalent to that, so

this approach gets quite ugly.

As a first step in this plan, this PR stops treating AnnAssign with no

RHS as a `Definition`, which fixes behavior in a couple added tests.

This actually makes things temporarily worse for the ellipsis-type test,

since it is defined in typeshed only using annotated assignments with no

RHS. This will be fixed properly by the upcoming addition of

declarations, which should also treat a declared type as sufficient to

import a name, at least from a stub.

Initially I had deferred annotation name lookups reuse the "public

symbol type", since that gives the correct "from end of scope" view of

reaching definitions that we want. But there is a key difference; public

symbol types are based only on definitions in the queried scope (or

"name in the given namespace" in runtime terms), they don't ever look up

a name in nonlocal/global/builtin scopes. Deferred annotation resolution

should do this lookup.

Add a test, and fix deferred name resolution to support

nonlocal/global/builtin names.

Fixes#13176

## Summary

Part of #13085, this PR updates the comprehension definition to handle

multiple targets.

## Test Plan

Update existing semantic index test case for comprehension with multiple

targets. Running corpus tests shouldn't panic.

Add support for non-local name lookups.

There's one TODO around annotated assignments without a RHS; these need

a fair amount of attention, which they'll get in an upcoming PR about

declared vs inferred types.

Fixes#11663

Test coverage for #13131 wasn't as good as I thought it was, because

although we infer a lot of types in stubs in typeshed, we don't check

typeshed, and therefore we don't do scope-level inference and pull all

types for a scope. So we didn't really have good test coverage for

scope-level inference in a stub. And because of this, I got the code for

supporting that wrong, meaning that if we did scope-level inference with

deferred types, we'd end up never populating the deferred types in the

scope's `TypeInference`, which causes panics like #13160.

Here I both add test coverage by running the corpus tests both as `.py`

and as `.pyi` (which reveals the panic), and I fix the code to support

deferred types in scope inference.

This also revealed a problem with deferred types in generic functions,

which effectively span two scopes. That problem will require a bit more

thought, and I don't want to block this PR on it, so for now I just

don't defer annotations on generic functions.

Fixes#13160.

## Summary

Follow-up to #13147, this PR implements the `AstNode` for `Identifier`.

This makes it easier to create the `NodeKey` in red knot because it uses

a generic method to construct the key from `AnyNodeRef` and is important

for definitions that are created only on identifiers instead of

`ExprName`.

## Test Plan

`cargo test` and `cargo clippy`

## Summary

This PR adds definition for match patterns.

## Test Plan

Update the existing test case for match statement symbols to verify that

the definitions are added as well.

This PR contains the following updates:

| Package | Type | Update | Change |

|---|---|---|---|

| [quick-junit](https://redirect.github.com/nextest-rs/quick-junit) |

workspace.dependencies | minor | `0.4.0` -> `0.5.0` |

---

### Release Notes

<details>

<summary>nextest-rs/quick-junit (quick-junit)</summary>

###

[`v0.5.0`](https://redirect.github.com/nextest-rs/quick-junit/blob/HEAD/CHANGELOG.md#050---2024-09-01)

[Compare

Source](https://redirect.github.com/nextest-rs/quick-junit/compare/quick-junit-0.4.0...quick-junit-0.5.0)

##### Changed

- The `Output` type, which strips invalid XML characters from a string,

has been renamed to

`XmlString`.

- All internal storage now uses `XmlString` rather than `String`.

</details>

---

### Configuration

📅 **Schedule**: Branch creation - "before 4am on Monday" (UTC),

Automerge - At any time (no schedule defined).

🚦 **Automerge**: Disabled by config. Please merge this manually once you

are satisfied.

♻ **Rebasing**: Whenever PR becomes conflicted, or you tick the

rebase/retry checkbox.

🔕 **Ignore**: Close this PR and you won't be reminded about this update

again.

---

- [ ] <!-- rebase-check -->If you want to rebase/retry this PR, check

this box

---

This PR was generated by [Mend Renovate](https://mend.io/renovate/).

View the [repository job

log](https://developer.mend.io/github/astral-sh/ruff).

<!--renovate-debug:eyJjcmVhdGVkSW5WZXIiOiIzOC41Ni4wIiwidXBkYXRlZEluVmVyIjoiMzguNTkuMiIsInRhcmdldEJyYW5jaCI6Im1haW4iLCJsYWJlbHMiOlsiaW50ZXJuYWwiXX0=-->

---------

Co-authored-by: renovate[bot] <29139614+renovate[bot]@users.noreply.github.com>

Co-authored-by: Dhruv Manilawala <dhruvmanila@gmail.com>

## Summary

The `SequenceIndexVisitor` currently does not recurse into

subexpressions of subscripts when searching for subscript accesses that

would trigger this rule. That means that we don't currently detect

violations of the rule on snippets like this:

```py

data = {"a": 1, "b": 2}

column_names = ["a", "b"]

for index, column_name in enumerate(column_names):

_ = data[column_names[index]]

```

Fixes#13183

## Test Plan

`cargo test -p ruff_linter`

The `UnionBuilder` builds `builtins.bool` when handed `Literal[True]`

and `Literal[False]`.

Caveat: If the builtins module is unfindable somehow, the builder falls

back to the union type of these two literals.

First task from #12694

---------

Co-authored-by: Carl Meyer <carl@astral.sh>

## Summary

Adds basic support for inferring the type resulting from a call

expression. This only works for the *result* of call expressions; it

performs no inference on parameters. It also intentionally does nothing

with class instantiation, `__call__` implementors, or lambdas.

## Test Plan

Adds a test that it infers the right thing!

---------

Co-authored-by: Carl Meyer <carl@astral.sh>

## Summary

- Introduce methods for inferring annotation and type expressions.

- Correctly infer explicit return types from functions where they are

simple names that can be resolved in scope.

Contributes to #12701 by way of helping unlock call expressions (this

does not remotely finish that, as it stands, but it gets us moving that

direction).

## Test Plan

Added a test for function return types which use the name form of an

annotation expression, since this is aiming toward call expressions.

When we extend this to working for other annotation and type expression

positions, we should add explicit tests for those as well.

---------

Co-authored-by: Alex Waygood <alex.waygood@gmail.com>

Co-authored-by: Carl Meyer <carl@astral.sh>

## Summary

Extends deletions for RUF100, deleting trailing text from noqa

directives, while preserving upcoming comments on the same line if any.

In cases where it deletes a comment up to another comment on the same

line, the whitespace between them is now shown to be in the autofix in

the diagnostic as well. Leading whitespace before the removed comment is

not, though.

Fixes#12251

## Test Plan

`cargo test`

Prototype deferred evaluation of type expressions by deferring

evaluation of class bases in a stub file. This allows self-referential

class definitions, as occur with the definition of `str` in typeshed

(which inherits `Sequence[str]`).

---------

Co-authored-by: Alex Waygood <Alex.Waygood@Gmail.com>

## Summary

Just what it says on the tin: adds basic `EllipsisType` inference for

any time `...` appears in the AST.

## Test Plan

Test that `x = ...` produces exactly what we would expect.

---------

Co-authored-by: Carl Meyer <carl@oddbird.net>

## Summary

The resulting type when multiplying a string literal by an integer

literal is one of two types:

- `StringLiteral`, in the case where it is a reasonably small resulting

string (arbitrarily bounded here to 4096 bytes, roughly a page on many

operating systems), including the fully expanded string.

- `LiteralString`, matching Pyright etc., for strings larger than that.

Additionally:

- Switch to using `Box<str>` instead of `String` for the internal value

of `StringLiteral`, saving some non-trivial byte overhead (and keeping

the total number of allocations the same).

- Be clearer and more accurate about which types we ought to defer to in

`StringLiteral` and `LiteralString` member lookup.

## Test Plan

Added a test case covering multiplication times integers: positive,

negative, zero, and in and out of bounds.

---------

Co-authored-by: Alex Waygood <alex.waygood@gmail.com>

Co-authored-by: Carl Meyer <carl@astral.sh>

## Summary

This fixes the outstanding TODO and make it easier to work with new

cases. (Tidy first, *then* implement, basically!)

## Test Plan

After making this change all the existing tests still pass. A classic

refactor win. 🎉

# Summary

Add support for the first unary operator: negating integer literals. The

resulting type is another integer literal, with the value being the

negated value of the literal. All other types continue to return

`Type::Unknown` for the present, but this is designed to make it easy to

extend easily with other combinations of operator and operand.

Contributes to #12701.

## Test Plan

Add tests with basic negation, including of very large integers and

double negation.

## Summary

Introduce a `StringLiteralType` with corresponding `Display` type and a

relatively basic test that the resulting representation is as expected.

Note: we currently always allocate for `StringLiteral` types. This may

end up being a perf issue later, at which point we may want to look at

other ways of representing `value` here, i.e. with some kind of smarter

string structure which can reuse types. That is most likely to show up

with e.g. concatenation.

Contributes to #12701.

## Test Plan

Added a test for individual strings with both single and double quotes

as well as concatenated strings with both forms.

## Summary

Now that Ruff provides a formatter, there is no need to rely on Black to

check that the docs are formatted correctly in

`check_docs_formatted.py`. This PR swaps out Black for the Ruff

formatter and updates inconsistencies between the two.

This PR will be a precursor to another PR

([branch](https://github.com/calumy/ruff/tree/format-pyi-in-docs)),

updating the `check_docs_formatted.py` script to check for pyi files,

fixing #11568.

## Test Plan

- CI to check that the docs are formatted correctly using the updated

script.

This PR has the `SemanticIndexBuilder` visit function definition

annotations before adding the function symbol/name to the builder.

For example, the following snippet no longer causes a panic:

```python

def bool(x) -> bool:

Return True

```

Note: This fix changes the ordering of the global symbol table.

Closes#13069

## Summary

This PR adds symbols introduced by `for` loops to red-knot:

- `x` in `for x in range(10): pass`

- `x` and `y` in `for x, y in d.items(): pass`

- `a`, `b`, `c` and `d` in `for [((a,), b), (c, d)] in foo: pass`

## Test Plan

Several tests added, and the assertion in the benchmarks has been

updated.

---------

Co-authored-by: Micha Reiser <micha@reiser.io>

## Summary

This PR simplifies the virtual file support in the red knot core,

specifically:

* Update `File::add_virtual_file` method to `File::virtual_file` which

will always create a new virtual file and override the existing entry in

the lookup table

* Add `VirtualFile` which is a wrapper around `File` and provides

methods to increment the file revision / close the virtual file

* Add a new `File::try_virtual_file` to lookup the `VirtualFile` from

`Files`

* Add `File::sync_virtual_path` which takes in the `SystemVirtualPath`,

looks up the `VirtualFile` for it and calls the `sync` method to

increment the file revision

* Removes the `virtual_path_metadata` method on `System` trait

## Test Plan

- [x] Make sure the existing red knot tests pass

- [x] Updated code works well with the LSP

## Summary

This PR adds support for `textDocument/didChange` notification.

There seems to be a bug (probably in Salsa) where it panics with:

```

2024-08-22 15:33:38.802 [info] panicked at /Users/dhruv/.cargo/git/checkouts/salsa-61760caba2b17ca5/f608ff8/src/tracked_struct.rs:377:9:

two concurrent writers to Id(4800), should not be possible

```

## Test Plan

https://github.com/user-attachments/assets/81055feb-ba8e-4acf-ad2f-94084a3efead

## Summary

This PR adds basic support for files outside of any workspace in the red

knot server.

This also limits the red knot server to only work in a single workspace.

The server will not start if there are multiple workspaces.

## Test Plan

https://github.com/user-attachments/assets/de601387-0ad5-433c-9d2c-7b6ae5137654

## Summary

This PR adds the `bytes` type to red-knot:

- Added the `bytes` type

- Added support for bytes literals

- Support for the `+` operator

Improves on #12701

Big TODO on supporting and normalizing r-prefixed bytestrings

(`rb"hello\n"`)

## Test Plan

Added a test for a bytes literals, concatenation, and corner values

The `SemanticIndexBuilder` was causing a cycle in a salsa query by

attempting to resolve the target before the value in a named expression

(e.g. `x := x+1`). This PR swaps the order, avoiding a panic.

Closes#13012.

## Summary

This PR removes notebook sync support from server capabilities because

it isn't tested, it'll be added back once we actually add full support

for notebook.

## Summary

This PR adds symbols and definitions introduced by `with` statements.

The symbols and definitions are introduced for each with item. The type

inference is updated to call the definition region type inference

instead.

## Test Plan

Add test case to check for symbol table and definitions.

## Summary

This PR adds symbols introduced by `match` statements.

There are three patterns that introduces new symbols:

* `as` pattern

* Sequence pattern

* Mapping pattern

The recursive nature of the visitor makes sure that all symbols are

added.

## Test Plan

Add test case for all types of patterns that introduces a symbol.

## Summary

This PR adds definition for augmented assignment. This is similar to

annotated assignment in terms of implementation.

An augmented assignment should also record a use of the variable but

that's a TODO for now.

## Test Plan

Add test case to validate that a definition is added.

## Summary

As suggested by @MichaReiser in

https://github.com/astral-sh/ruff/pull/12886#pullrequestreview-2237679793,

this adds an exemption to `RUF027` for `fastAPI` paths, which require

template strings rather than eagerly evaluated f-strings.

## Test Plan

I added a fixture that causes Ruff to emit a false-positive error on

`main` but no longer does with this PR.

Extend the `UseDefMap` to also track which constraints (provided by e.g.

`if` tests) apply to each visible definition.

Uses a custom `BitSet` and `BitSetArray` to track which constraints

apply to which definitions, while keeping data inline as much as

possible.

## Summary

This PR is a pure refactor to simplify some of the logic for `RUF027`.

This will make it easier to file some followup PRs to help reduce the

false positives from this rule. I'm separating the refactor out into a

separate PR so it's easier to review, and so I can double-check from the

ecosystem report that this doesn't have any user-facing impact.

## Test Plan

`cargo test -p ruff_linter --lib`

## Summary

This PR adds support for adding symbols and definitions for function and

lambda parameters to the semantic index.

### Notes

* The default expression of a parameter is evaluated in the enclosing

scope (not the type parameter or function scope).

* The annotation expression of a parameter is evaluated in the type

parameter scope if they're present other in the enclosing scope.

* The symbols and definitions are added in the function parameter scope.

### Type Inference

There are two definitions `Parameter` and `ParameterWithDefault` and

their respective `*_definition` methods on the type inference builder.

These methods are preferred and are re-used when checking from a

different region.

## Test Plan

Add test case for validating that the parameters are defined in the

function / lambda scope.

### Benchmark update

Validated the difference in diagnostics for benchmark code between

`main` and this branch. All of them are either directly or indirectly

referencing one of the function parameters. The diff is in the PR description.

This adds the `fast-api-unused-path-parameter` lint rule, as described

in #12632.

I'm still pretty new to rust, so the code can probably be improved, feel

free to tell me if there's any changes i should make.

Also, i needed to add the `add_parameter` edit function, not sure if it

was in the scope of the PR or if i should've made another one.

If a builtin is conditionally shadowed by a global, we didn't correctly

fall back to builtins for the not-defined-in-globals path (see added

test for an example.)

List and set comprehensions using `async for` cannot be replaced with

underlying generators; this PR modifies C419 to skip such

comprehensions.

Closes#12891.

## Summary

Occasionally, we receive bug reports that imports in `src` directories

aren't correctly detected. The root of the problem is that we default to

`src = ["."]`, so users have to set `src = ["src"]` explicitly. This PR

extends the default to cover _both_ of them: `src = [".", "src"]`.

Closes https://github.com/astral-sh/ruff/issues/12454.

## Test Plan

I replicated the structure described in

https://github.com/astral-sh/ruff/issues/12453, and verified that the

imports were considered sorted, but that adding `src = ["."]` showed an

error.

## Summary

This PR adds very basic support for using the line / column information

from the diagnostic message. This makes it easier to validate

diagnostics in an editor as oppose to going through the diff one

diagnostic at a time and confirming it at the location.

## Summary

This PR adds a fallback logic for `is_python_notebook` to check the

`kernelspec.language` field.

Reference implementation in VS Code:

1c31e75898/extensions/ipynb/src/deserializers.ts (L20-L22)

It's also required for the kernel to provide the `language` they're

implementing based on

https://jupyter-client.readthedocs.io/en/stable/kernels.html#kernel-specs

reference although that's for the `kernel.json` file but is also

included in the notebook metadata.

Closes: #12281

## Test Plan

Add a test case for `is_python_notebook` and include the test notebook

for round trip validation.

The test notebook contains two cells, one is JavaScript (denoted via the

`vscode.languageId` metadata) and the other is Python (no metadata). The

notebook metadata only contains `kernelspec` and the `language_info` is

absent.

I also verified that this is a valid notebook by opening it in Jupyter

Lab, VS Code and using `nbformat` validator.

## Summary

This PR adds support for VS Code specific cell metadata to consider when

collecting valid code cells.

For context, Ruff only runs on valid code cells. These are the code

cells that doesn't contain cell magics. Previously, Ruff only used the

notebook's metadata to determine whether it's a Python notebook. But, in

VS Code, a notebook's preferred language might be Python but it could

still contain code cells for other languages. This can be determined

with the `metadata.vscode.languageId` field.

### References:

* https://code.visualstudio.com/docs/languages/identifiers

* e6c009a3d4/extensions/ipynb/src/serializers.ts (L104-L107)

*

e6c009a3d4/extensions/ipynb/src/serializers.ts (L117-L122)

This brings us one step closer to fixing #12281.

## Test Plan

Add test cases for `is_valid_python_code_cell` and an integration test

case which showcase running it end to end. The test notebook contains a

JavaScript code cell and a Python code cell.

## Summary

This PR fixes a bug in the semantic model where it would evaluate the

default parameter value in the type parameter scope. For example,

```py

def foo[T1: int](a = T1):

pass

```

Here, the `T1` in `a = T1` is undefined but Ruff doesn't flag it

(https://play.ruff.rs/ba2f7c2f-4da6-417e-aa2a-104aa63e6d5e).

The fix here is to evaluate the default parameter value in the

_enclosing_ scope instead.

## Test Plan

Add a test case which includes the above code under `F821`

(`undefined-name`) and validate the snapshot.

## Summary

See #12703. This only addresses the first bullet point, adding a space

after the comma in the suggested fix from list/tuple to string.

## Test Plan

Updated the snapshots and compared.

## Summary

This PR adds scope and definition for comprehension nodes. This includes

the following nodes:

* List comprehension

* Dictionary comprehension

* Set comprehension

* Generator expression

### Scope

Each expression here adds it's own scope with one caveat - the `iter`

expression of the first generator is part of the parent scope. For

example, in the following code snippet the `iter1` variable is evaluated

in the outer scope.

```py

[x for x in iter1]

```

> The iterable expression in the leftmost for clause is evaluated

directly in the enclosing scope and then passed as an argument to the

implicitly nested scope.

>

> Reference:

https://docs.python.org/3/reference/expressions.html#displays-for-lists-sets-and-dictionaries

There's another special case for assignment expressions:

> There is one special case: an assignment expression occurring in a

list, set or dict comprehension or in a generator expression (below

collectively referred to as “comprehensions”) binds the target in the

containing scope, honoring a nonlocal or global declaration for the

target in that scope, if one exists.

>

> Reference: https://peps.python.org/pep-0572/#scope-of-the-target

For example, in the following code snippet, the variables `a` and `b`

are available after the comprehension while `x` isn't:

```py

[a := 1 for x in range(2) if (b := 2)]

```

### Definition

Each comprehension node adds a single definition, the "target" variable

(`[_ for target in iter]`). This has been accounted for and a new

variant has been added to `DefinitionKind`.

### Type Inference

Currently, type inference is limited to a single scope. It doesn't

_enter_ in another scope to infer the types of the remaining expressions

of a node. To accommodate this, the type inference for a **scope**

requires new methods which _doesn't_ infer the type of the `iter`

expression of the leftmost outer generator (that's defined in the

enclosing scope).

The type inference for the scope region is split into two parts:

* `infer_generator_expression` (similarly for comprehensions) infers the

type of the `iter` expression of the leftmost outer generator

* `infer_generator_expression_scope` (similarly for comprehension)

infers the type of the remaining expressions except for the one

mentioned in the previous point

The type inference for the **definition** also needs to account for this

special case of leftmost generator. This is done by defining a `first`

boolean parameter which indicates whether this comprehension definition

occurs first in the enclosing expression.

## Test Plan

New test cases were added to validate multiple scenarios. Refer to the

documentation for each test case which explains what is being tested.

Make `cargo doc -p red_knot_python_semantic --document-private-items`

run warning-free. I'd still like to do this for all of ruff and start

enforcing it in CI (https://github.com/astral-sh/ruff/issues/12372) but

haven't gotten to it yet. But in the meantime I'm trying to maintain it

for at least `red_knot_python_semantic`, as it helps to ensure our doc

comments stay up to date.

A few of the comments I just removed or shortened, as their continued

relevance wasn't clear to me; please object in review if you think some

of them are important to keep!

Also remove a no-longer-needed `allow` attribute.

For type narrowing, we'll need intersections (since applying type

narrowing is just a type intersection.)

Add `IntersectionBuilder`, along with some tests for it and

`UnionBuilder` (renamed from `UnionTypeBuilder`).

We use smart builders to ensure that we always keep these types in

disjunctive normal form (DNF). That means that we never have deeply

nested trees of unions and intersections: unions flatten into unions,

intersections flatten into intersections, and intersections distribute

over unions, so the most complex tree we can ever have is a union of

intersections. We also never have a single-element union or a

single-positive-element intersection; these both just simplify to the

contained type.

Maintaining these invariants means that `UnionBuilder` doesn't

necessarily end up building a `Type::Union` (e.g. if you only add a

single type to the union, it'll just return that type instead), and

`IntersectionBuilder` doesn't necessarily build a `Type::Intersection`

(if you add a union to the intersection, we distribute the intersection

over that union, and `IntersectionBuilder` will end up returning a

`Type::Union` of intersections).

We also simplify intersections by ensuring that if a type and its

negation are both in an intersection, they simplify out. (In future this

should also respect subtyping, not just type identity, but we don't have

subtyping yet.) We do implement subtyping of `Never` as a special case

for now.

Most of this PR is unused for now until type narrowing lands; I'm just

breaking it out to reduce the review fatigue of a single massive PR.

## Summary

I'm not sure if this is useful but this is a hacky implementation to add

the filename and row / column numbers to the current Red Knot

diagnostics.

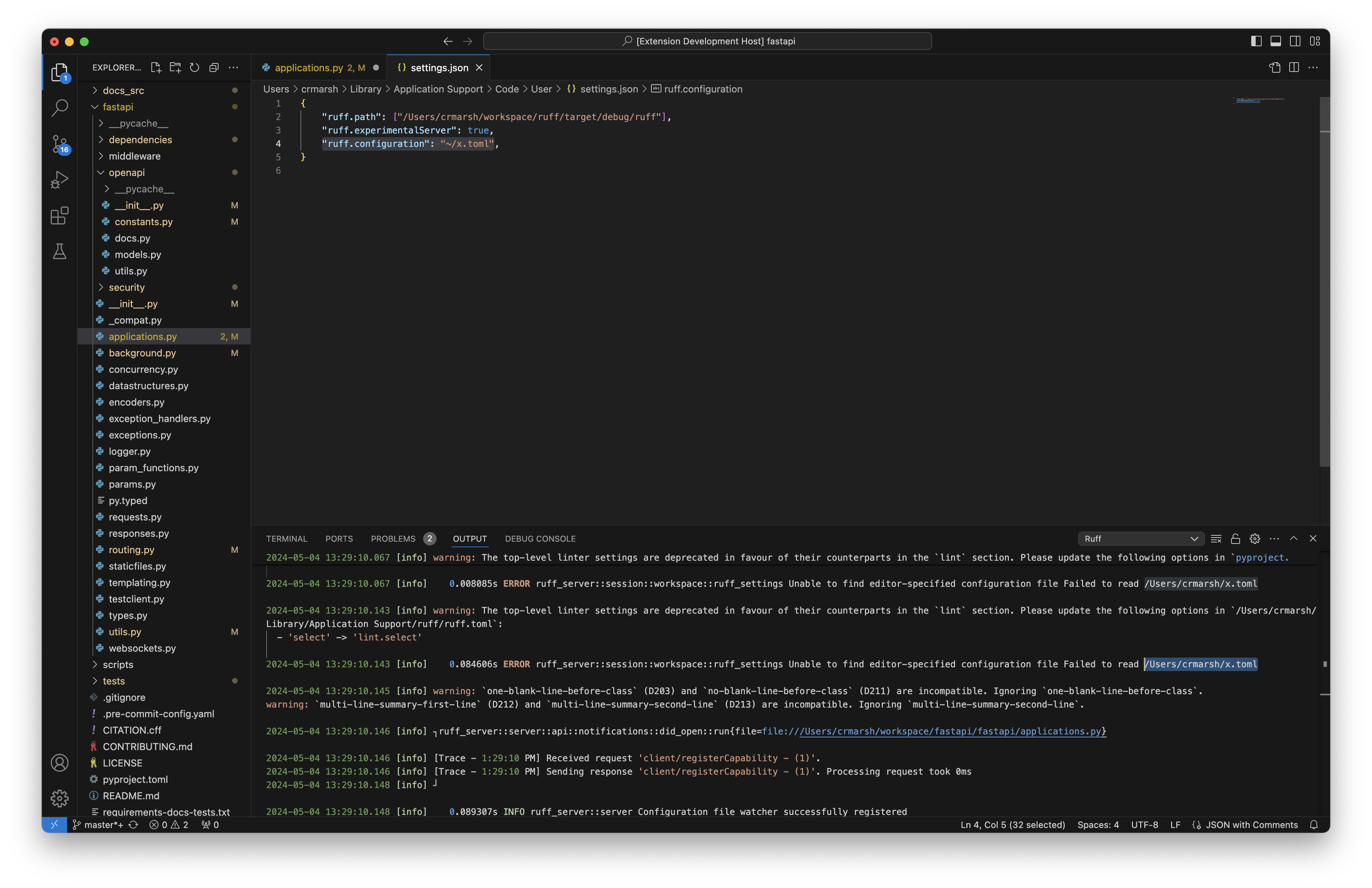

## Summary

Related to https://github.com/astral-sh/ruff-vscode/issues/571, this PR

updates the settings index builder to trace all the errors it

encountered. Without this, there's no way for user to know that

something failed and some of the capability might not work as expected.

For example, in the linked PR, the settings were invalid which means

notebooks weren't included and there were no log messages for it.

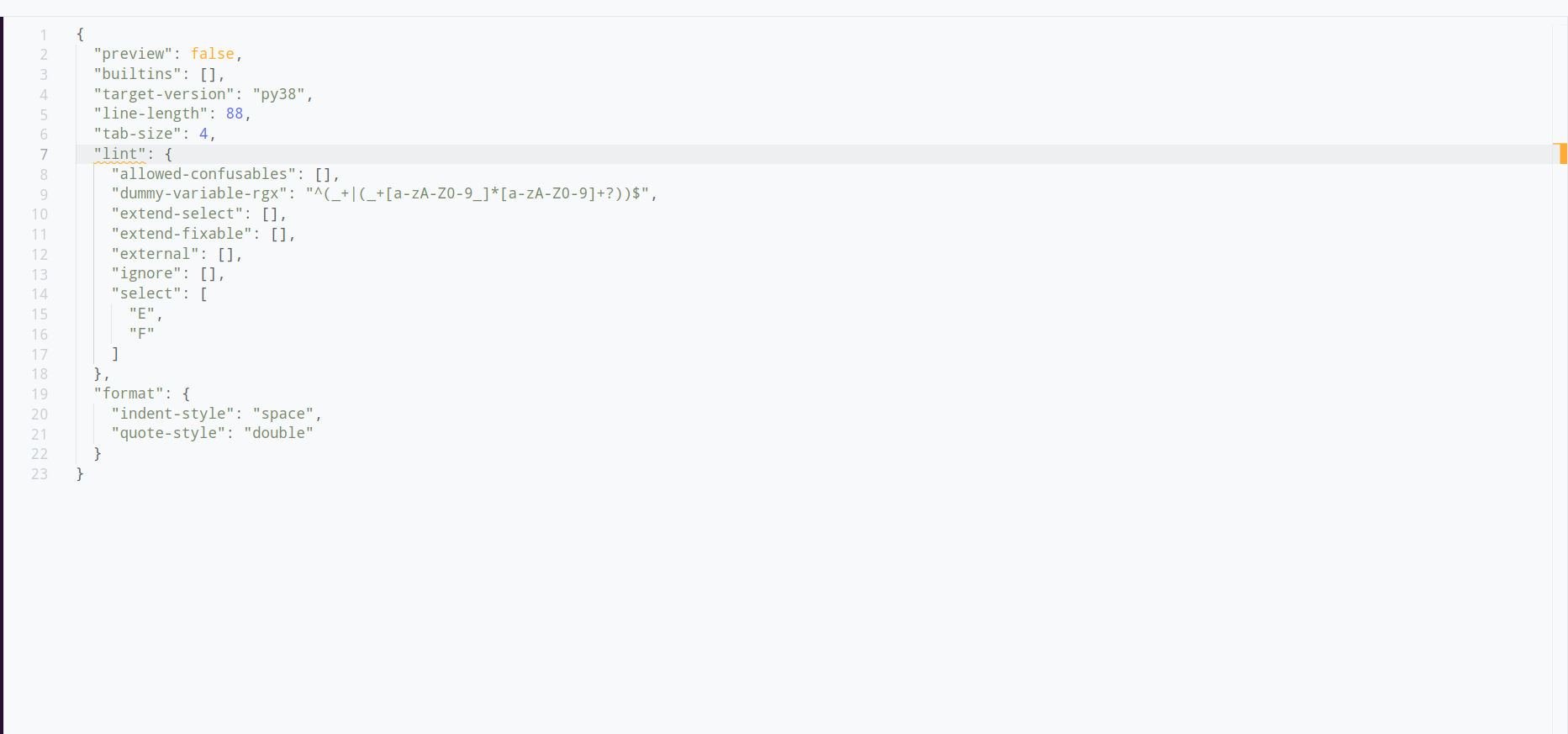

## Test Plan

Create an invalid `ruff.toml` file:

```toml

[tool.ruff]

extend-exclude = ["*.ipynb"]

```

Logs:

```

2024-08-12 18:33:09.873 [info] [Trace - 6:33:09 PM] 12.217043000s ERROR ruff:main ruff_server::session::index::ruff_settings: Failed to parse /Users/dhruv/playground/ruff/pyproject.toml

```

Notification Preview:

<img width="483" alt="Screenshot 2024-08-12 at 18 33 20"

src="https://github.com/user-attachments/assets/a4f303e5-f073-454f-bdcd-ba6af511e232">

Another way to trigger is to provide an invalid `cache-dir` value:

```toml

[tool.ruff]

cache-dir = "$UNKNOWN"

```

Same notification preview but different log message:

```

2024-08-12 18:41:37.571 [info] [Trace - 6:41:37 PM] 21.700112208s ERROR ThreadId(30) ruff_server::session::index::ruff_settings: Error while resolving settings from /Users/dhruv/playground/ruff/pyproject.toml: Invalid `cache-dir` value: error looking key 'UNKNOWN' up: environment variable not found

```

With multiple `pyproject.toml` file:

```

2024-08-12 18:41:15.887 [info] [Trace - 6:41:15 PM] 0.016636833s ERROR ThreadId(04) ruff_server::session::index::ruff_settings: Error while resolving settings from /Users/dhruv/playground/ruff/pyproject.toml: Invalid `cache-dir` value: error looking key 'UNKNOWN' up: environment variable not found

2024-08-12 18:41:15.888 [info] [Trace - 6:41:15 PM] 0.017378833s ERROR ThreadId(13) ruff_server::session::index::ruff_settings: Failed to parse /Users/dhruv/playground/ruff/tools/pyproject.toml

```

In most cases we should suggest a ternary operator, but there are three

edge cases where a binary operator is more appropriate.

Given an if-else block of the form

```python

if test:

target_var = body_value

else:

target_var = else_value

```

This PR updates the check for SIM108 to the following:

- If `test == body_value` and preview enabled, suggest to replace with

`target_var = test or else_value`

- If `test == not body_value` and preview enabled, suggest to replace

with `target_var = body_value and else_value`

- If `not test == body_value` and preview enabled, suggest to replace

with `target_var = body_value and else_value`

- Otherwise, suggest to replace with `target_var = body_value if test

else else_value`

Closes#12189.

## Summary

Adding parentheses to a tuple in a subscript with elements that include

slice expressions causes a syntax error. For example, `d[(1,2,:)]` is a

syntax error.

So, when `lint.ruff.parenthesize-tuple-in-subscript = true` and the

tuple includes a slice expression, we skip this check and fix.

Closes#12766.

> ~Builtins are also more efficient than `for` loops.~

Let's not promise performance because this code transformation does not

deliver.

Benchmark written by @dcbaker

> `any()` seems to be about 1/3 as fast (Python 3.11.9, NixOS):

```python

loop = 'abcdef'.split()

found = 'f'

nfound = 'g'

def test1():

for x in loop:

if x == found:

return True

return False

def test2():

return any(x == found for x in loop)

def test3():

for x in loop:

if x == nfound:

return True

return False

def test4():

return any(x == nfound for x in loop)

if __name__ == "__main__":

import timeit

print('for loop (found) :', timeit.timeit(test1))

print('for loop (not found):', timeit.timeit(test3))

print('any() (found) :', timeit.timeit(test2))

print('any() (not found) :', timeit.timeit(test4))

```

```

for loop (found) : 0.051076093994197436

for loop (not found): 0.04388196699437685

any() (found) : 0.15422860698890872

any() (not found) : 0.15568504799739458

```

I have retested with longer lists and on multiple Python versions with

similar results.

Implements the new fixable lint rule `RUF031` which checks for the use or omission of parentheses around tuples in subscripts, depending on the setting `lint.ruff.parenthesize-tuple-in-getitem`. By default, the use of parentheses is considered a violation.

## Summary

Follow-up from https://github.com/astral-sh/ruff/pull/12725, this is

just a small refactor to use a wrapper struct instead of type alias for

workspace settings index. This avoids the need to have the

`register_workspace_settings` as a static method on `Index` and instead

is a method on the new struct itself.

## Summary

This PR updates the server to ignore non-file workspace URL.

This is to avoid crashing the server if the URL scheme is not "file".

We'd still raise an error if the URL to file path conversion fails.

Also, as per the docs of

[`to_file_path`](https://docs.rs/url/2.5.2/url/struct.Url.html#method.to_file_path):

> Note: This does not actually check the URL’s scheme, and may give

nonsensical results for other schemes. It is the user’s responsibility

to check the URL’s scheme before calling this.

resolves: #12660

## Test Plan

I'm not sure how to test this locally but the change is small enough to

validate on its own.

## Summary

This PR updates the `red_knot` CLI to make the subcommand optional.

## Test Plan

Run the following commands:

* `cargo run --bin red_knot --

--current-directory=~/playground/ruff/type_inference` (no subcommand

requirement)

* `cargo run --bin red_knot -- server` (should start the server)

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

Resolves#12636

Consider docstrings which begin with the word "Returns" as having

satisfactorily documented they're returns. For example

```python

def f():

"""Returns 1."""

return 1

```

is valid.

## Test Plan

Added example to test fixture.

---------

Co-authored-by: Dhruv Manilawala <dhruvmanila@gmail.com>

## Summary

Removes set comprehension as a violation for `sum` when checking `C419`,

because set comprehension may de-duplicate entries in a generator,

thereby modifying the value of the sum.

Closes#12690.

## Summary

Make it a violation of `C409` to call `tuple` with a list or set

comprehension, and

implement the (unsafe) fix of calling the `tuple` with the underlying

generator instead.

Closes#12648.

## Test Plan

Test fixture updated, cargo test, docs checked for updated description.

## Summary

Adds autofix for `RUF007`

## Test Plan

`cargo test`, however I get errors for `test resolver::tests::symlink

... FAILED` which seems to not be my fault

## Summary

Fixes#12630.

DOC501 and DOC502 now understand functions with constructs like this to

be explicitly raising `TypeError` (which should be documented in a

function's docstring):

```py

try:

foo():

except TypeError:

...

raise

```

I made an exception for `Exception` and `BaseException`, however.

Constructs like this are reasonably common, and I don't think anybody

would say that it's worth putting in the docstring that it raises "some

kind of generic exception":

```py

try:

foo()

except BaseException:

do_some_logging()

raise

```

## Test Plan

`cargo test -p ruff_linter --lib`

## Summary

Please see

https://github.com/astral-sh/ruff/pull/12605#discussion_r1699957443 for

a description of the issue.

They way I fixed it is to get the *last* timeout item in the `with`, and

if it's an `async with` and there are items after it, then don't trigger

the lint.

## Test Plan

Updated the fixture with some more cases.

Changes the red-knot benchmark to run on the stdlib "tomllib" library

(which is self-contained, four files, uses type annotations) instead of

on very small bits of handwritten code.

Also remove the `without_parse` benchmark: now that we are running on

real code that uses typeshed, we'd either have to pre-parse all of

typeshed (slow) or find some way to determine which typeshed modules

will be used by the benchmark (not feasible with reasonable complexity.)

## Test Plan

`cargo bench -p ruff_benchmark --bench red_knot`

## Summary

This PR separates the current `red_knot` crate into two crates:

1. `red_knot` - This will be similar to the `ruff` crate, it'll act as

the CLI crate

2. `red_knot_workspace` - This includes everything except for the CLI

functionality from the existing `red_knot` crate

Note that the code related to the file watcher is in

`red_knot_workspace` for now but might be required to extract it out in

the future.

The main motivation for this change is so that we can have a `red_knot

server` command. This makes it easier to test the server out without

making any changes in the VS Code extension. All we need is to specify

the `red_knot` executable path in `ruff.path` extension setting.

## Test Plan

- `cargo build`

- `cargo clippy --workspace --all-targets --all-features`

- `cargo shear --fix`

## Summary

There's still a problem here. Given:

```python

class Class():

pass

# comment

# another comment

a = 1

```

We only add one newline before `a = 1` on the first pass, because

`max_precedling_blank_lines` is 1... We then add the second newline on

the second pass, so it ends up in the right state, but the logic is

clearly wonky.

Closes https://github.com/astral-sh/ruff/issues/11508.

I hit this `todo!` trying to run type inference over some real modules.

Since it's a one-liner to implement it, I just did that rather than

changing to `Type::Unknown`.

## Summary

@zanieb noticed while we were discussing #12595 that this flag is now

unnecessary, so remove it and the flags which reference it.

## Test Plan

Question for maintainers: is there a test to add *or* remove here? (I’ve

opened this as a draft PR with that in view!)

## Summary

This pull request adds support for logging via `$/logTrace` RPC

messages. It also enables that code path for when a client is Zed editor

or VS Code (as there's no way for us to generically tell whether a client prefers

`$/logTrace` over stderr.

Related to: #12523

## Test Plan

I've built Ruff from this branch and tested it manually with Zed.

---------

Co-authored-by: Dhruv Manilawala <dhruvmanila@gmail.com>

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

Extend `flake8-builtins` to imports, lambda-arguments, and modules to be

consistent with original checker

[flake8_builtins](https://github.com/gforcada/flake8-builtins/blob/main/flake8_builtins.py).

closes#12540

## Details

- Implement builtin-import-shadowing (A004)

- Stop tracking imports shadowing in builtin-variable-shadowing (A001)

in preview mode.

- Implement builtin-lambda-argument-shadowing (A005)

- Implement builtin-module-shadowing (A006)

- Add new option `linter.flake8_builtins.builtins_allowed_modules`

## Test Plan

cargo test

## Summary

If an import is marked as "required", we should never flag it as unused.

In practice, this is rare, since required imports are typically used for

`__future__` annotations, which are always considered "used".

Closes https://github.com/astral-sh/ruff/issues/12458.

Now that we have builtins available, resolve some simple cases to the

right builtin type.

We should also adjust the display for types to include their module

name; that's not done yet here.

## Summary

This PR adds support for untitled files in the Red Knot project.

Refer to the [design

discussion](https://github.com/astral-sh/ruff/discussions/12336) for

more details.

### Changes

* The `parsed_module` always assumes that the `SystemVirtual` path is of

`PySourceType::Python`.

* For the module resolver, as suggested, I went ahead by adding a new

`SystemOrVendoredPath` enum and renamed `FilePathRef` to

`SystemOrVendoredPathRef` (happy to consider better names here).

* The `file_to_module` query would return if it's a

`FilePath::SystemVirtual` variant because a virtual file doesn't belong

to any module.

* The sync implementation for the system virtual path is basically the

same as that of system path except that it uses the

`virtual_path_metadata`. The reason for this is that the system

(language server) would provide the metadata on whether it still exists

or not and if it exists, the corresponding metadata.

For point (1), VS Code would use `Untitled-1` for Python files and

`Untitled-1.ipynb` for Jupyter Notebooks. We could use this distinction

to determine whether the source type is `Python` or `Ipynb`.

## Test Plan

Added test cases in #12526

Extend red-knot type inference to cover all syntax, so that inferring

types for a scope gives all expressions a type. This means we can run

the red-knot semantic lint on all Python code without panics. It also

means we can infer types for `builtins.pyi` without panics.

To keep things simple, this PR intentionally doesn't add any new type

inference capabilities: the expanded coverage is all achieved with

`Type::Unknown`. But this puts the skeleton in place for adding better

inference of all these language features.

I also had to add basic Salsa cycle recovery (with just `Type::Unknown`

for now), because some `builtins.pyi` definitions are cyclic.

To test this, I added a comprehensive corpus of test snippets sourced

from Cinder under [MIT

license](https://github.com/facebookincubator/cinder/blob/cinder/3.10/cinderx/LICENSE),

which matches Ruff's license. I also added to this corpus some

additional snippets for newer language features: all the

`27_func_generic_*` and `73_class_generic_*` files, as well as

`20_lambda_default_arg.py`, and added a test which runs semantic-lint

over all these files. (The test doesn't assert the test-corpus files are

lint-free; just that they are able to lint without a panic.)

## Summary

Right now, in the isort comment model, there's nowhere for trailing

comments on the _statement_ to go, as in:

```python

from mylib import (

MyClient,

MyMgmtClient,

) # some comment

```

If the comment is on the _alias_, we do preserve it, because we attach

it to the alias, as in:

```python

from mylib import (

MyClient,

MyMgmtClient, # some comment

)

```

Similarly, if the comment is trailing on an import statement

(non-`from`), we again attach it to the alias, because it can't be

parenthesized, as in:

```python

import foo # some comment

```

This PR adds logic to track and preserve those trailing comments.

We also no longer drop several other comments, like:

```python

from mylib import (

# some comment

MyClient

)

```

Closes https://github.com/astral-sh/ruff/issues/12487.

## Summary

When working on improving Ruff integration with Zed I noticed that it

errors out when we try to resolve a code action of a `QUICKFIX` kind;

apparently, per @dhruvmanila we shouldn't need to resolve it, as the

edit is provided in the initial response for the code action. However,

it's possible for the `resolve` call to fill out other fields (such as

`command`).

AFAICT Helix also tries to resolve the code actions unconditionally (as

in, when either `edit` or `command` is absent); so does VSC. They can

still apply the quickfixes though, as they do not error out on a failed

call to resolve code actions - Zed does. Following suit on Zed's side

does not cut it though, as we still get a log request from Ruff for that

failure (which is surfaced in the UI).

There are also other language servers (such as

[rust-analyzer](c1c9e10f72/crates/rust-analyzer/src/handlers/request.rs (L1257)))

that fill out both `command` and `edit` fields as a part of code action

resolution.

This PR makes the resolve calls for quickfix actions return the input

value.

## Test Plan

N/A

Add support for while-loop control flow.

This doesn't yet include general support for terminals and reachability;

that is wider than just while loops and belongs in its own PR.

This also doesn't yet add support for cyclic definitions in loops; that

comes with enough of its own complexity in Salsa that I want to handle

it separately.

Add a lint rule to detect if a name is definitely or possibly undefined

at a given usage.

If I create the file `undef/main.py` with contents:

```python

x = int

def foo():

z

return x

if flag:

y = x

y

```

And then run `cargo run --bin red_knot -- --current-directory

../ruff-examples/undef`, I get the output:

```

Name 'z' used when not defined.

Name 'flag' used when not defined.

Name 'y' used when possibly not defined.

```

If I modify the file to add `y = 0` at the top, red-knot re-checks it

and I get the new output:

```

Name 'z' used when not defined.

Name 'flag' used when not defined.

```

Note that `int` is not flagged, since it's a builtin, and `return x` in

the function scope is not flagged, since it refers to the global `x`.

## Summary

This PR fixes a bug to raise a syntax error when an unparenthesized

generator expression is used as an argument to a call when there are

more than one argument.

For reference, the grammar is:

```

primary:

| ...

| primary genexp

| primary '(' [arguments] ')'

| ...

genexp:

| '(' ( assignment_expression | expression !':=') for_if_clauses ')'

```

The `genexp` requires the parenthesis as mentioned in the grammar. So,

the grammar for a call expression is either a name followed by a

generator expression or a name followed by a list of argument. In the

former case, the parenthesis are excluded because the generator

expression provides them while in the later case, the parenthesis are

explicitly provided for a list of arguments which means that the

generator expression requires it's own parenthesis.

This was discovered in https://github.com/astral-sh/ruff/issues/12420.

## Test Plan

Add test cases for valid and invalid syntax.

Make sure that the parser from CPython also raises this at the parsing

step:

```console

$ python3.13 -m ast parser/_.py

File "parser/_.py", line 1

total(1, 2, x for x in range(5), 6)

^^^^^^^^^^^^^^^^^^^

SyntaxError: Generator expression must be parenthesized

$ python3.13 -m ast parser/_.py

File "parser/_.py", line 1

sum(x for x in range(10), 10)

^^^^^^^^^^^^^^^^^^^^

SyntaxError: Generator expression must be parenthesized

```

## Summary

Fix panic reported in #12428. Where a string would sometimes get split

within a character boundary. This bypasses the need to split the string.

This does not guarantee the correct formatting of the docstring, but

neither did the previous implementation.

Resolves#12428

## Test Plan

Test case added to fixture

## Summary

These are the first rules implemented as part of #458, but I plan to

implement more.

Specifically, this implements `docstring-missing-exception` which checks

for raised exceptions not documented in the docstring, and

`docstring-extraneous-exception` which checks for exceptions in the

docstring not present in the body.

## Test Plan

Test fixtures added for both google and numpy style.

When poring over traces, the ones that just include a definition or

symbol or expression ID aren't very useful, because you don't know which

file it comes from. This adds that information to the trace.

I guess the downside here is that if calling `.file(db)` on a

scope/definition/expression would execute other traced code, it would be

marked as outside the span? I don't think that's a concern, because I

don't think a simple field access on a tracked struct should ever

execute our code. If I'm wrong and this is a problem, it seems like the

tracing crate has this feature where you can record a field as

`tracing::field::Empty` and then fill in its value later with

`span.record(...)`, but when I tried this it wasn't working for me, not

sure why.

I think there's a lot more we can do to make our tracing output more

useful for debugging (e.g. record an event whenever a

definition/symbol/expression/use id is created with the details of that

definition/symbol/expression/use), this is just dipping my toes in the

water.

<!--

Thank you for contributing to Ruff! To help us out with reviewing,

please consider the following:

- Does this pull request include a summary of the change? (See below.)

- Does this pull request include a descriptive title?

- Does this pull request include references to any relevant issues?

-->

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

This PR updates D301 rule to allow inclduing escaped docstring, e.g.

`\"""Foo.\"""` or `\"\"\"Bar.\"\"\"`, within a docstring.

Related issue: #12152

## Test Plan

Add more test cases to D301.py and update the snapshot file.

<!-- How was it tested? -->

In preparation for supporting resolving builtins, simplify the benchmark

so it doesn't look up `str`, which is actually a complex builtin to deal

with because it inherits `Sequence[str]`.

Co-authored-by: Alex Waygood <alex.waygood@gmail.com>

Per comments in https://github.com/astral-sh/ruff/pull/12269, "module

global" is kind of long, and arguably redundant.

I tried just using "module" but there were too many cases where I felt

this was ambiguous. I like the way "global" works out better, though it

does require an understanding that in Python "global" generally means

"module global" not "globally global" (though in a sense module globals

are also globally global since modules are singletons).

Support falling back to a global name lookup if a name isn't defined in

the local scope, in the cases where that is correct according to Python

semantics.

In class scopes, a name lookup checks the local namespace first, and if

the name isn't found there, looks it up in globals.

In function scopes (and type parameter scopes, which are function-like),

if a name has any definitions in the local scope, it is a local, and

accessing it when none of those definitions have executed yet just

results in an `UnboundLocalError`, it does not fall back to a global. If

the name does not have any definitions in the local scope, then it is an

implicit global.

Public symbol type lookups never include such a fall back. For example,

if a name is not defined in a class scope, it is not available as a

member on that class, even if a name lookup within the class scope would

have fallen back to a global lookup.

This PR makes the `@override` lint rule work again.

Not yet included/supported in this PR:

* Support for free variables / closures: a free symbol in a nested

function-like scope referring to a symbol in an outer function-like

scope.

* Support for `global` and `nonlocal` statements, which force a symbol

to be treated as global or nonlocal even if it has definitions in the

local scope.

* Module-global lookups should fall back to builtins if the name isn't

found in the module scope.

I would like to expose nicer APIs for the various kinds of symbols

(explicit global, implicit global, free, etc), but this will also wait

for a later PR, when more kinds of symbols are supported.

Adds inference tests sufficient to give full test coverage of the

`UseDefMapBuilder::merge` method.

In the process I realized that we could implement visiting of if

statements in `SemanticBuilder` with fewer `snapshot`, `restore`, and

`merge` operations, so I restructured that visit a bit.

I also found one correctness bug in the `merge` method (it failed to

extend the given snapshot with "unbound" for any missing symbols,

meaning we would just lose the fact that the symbol could be unbound in

the merged-in path), and two efficiency bugs (if one of the ranges to

merge is empty, we can just use the other one, no need for copies, and

if the ranges are overlapping -- which can occur with nested branches --

we can still just merge them with no copies), and fixed all three.

## Summary

This PR allows us to fix both expressions in `foo == "a" or foo == "b"

or ("c" != bar and "d" != bar)`, but limits the rule to consecutive

comparisons, following https://github.com/astral-sh/ruff/issues/7797.

I think this logic was _probably_ added because of

https://github.com/astral-sh/ruff/pull/12368 -- the intent being that

we'd replace the _entire_ expression.

## Summary

This PR adds documentation for the Ruff language server.

It mainly does the following:

1. Combines various READMEs containing instructions for different editor

setup in their respective section on the online docs

2. Provide an enumerated list of server settings. Additionally, it also

provides a section for VS Code specific options.

3. Adds a "Features" section which enumerates all the current

capabilities of the native server

For (2), the settings documentation is done manually but a future

improvement (easier after `ruff-lsp` is deprecated) is to move the docs

in to Rust struct and generate the documentation from the code itself.

And, the VS Code extension specific options can be generated by diffing

against the `package.json` in `ruff-vscode` repository.

### Structure

1. Setup: This section contains the configuration for setting up the

language server for different editors

2. Features: This section contains a list of capabilities provided by

the server along with short GIF to showcase it

3. Settings: This section contains an enumerated list of settings in a

similar format to the one for the linter / formatter

4. Migrating from `ruff-lsp`

> [!NOTE]

>

> The settings page is manually written but could possibly be

auto-generated via a macro similar to `OptionsMetadata` on the

`ClientSettings` struct

resolves: #11217

## Test Plan

Generate and open the documentation locally using:

1. `python scripts/generate_mkdocs.py`

2. `mkdocs serve -f mkdocs.insiders.yml`

## Summary

This PR removes the requirement of `--preview` flag to run the `ruff

server` and instead considers it to be an indicator to turn on preview

mode for the linter and the formatter.

resolves: #12161

## Test Plan

Add test cases to assert the `preview` value is updated accordingly.

In an editor context, I used the local `ruff` executable in Neovim with

the `--preview` flag and verified that the preview-only violations are

being highlighted.

Running with:

```lua

require('lspconfig').ruff.setup({

cmd = {

'/Users/dhruv/work/astral/ruff/target/debug/ruff',

'server',

'--preview',

},

})

```

The screenshot shows that `E502` is highlighted with the below config in

`pyproject.toml`:

<img width="877" alt="Screenshot 2024-07-17 at 16 43 09"

src="https://github.com/user-attachments/assets/c7016ef3-55b1-4a14-bbd3-a07b1bcdd323">

## Summary

This PR updates the settings index building logic in the language server

to consider the fallback settings for applying ignore filters in

`WalkBuilder` and the exclusion via `exclude` / `extend-exclude`.

This flow matches the one in the `ruff` CLI where the root settings is

built by (1) finding the workspace setting in the ancestor directory (2)

finding the user configuration if that's missing and (3) fallback to

using the default configuration.

Previously, the index building logic was being executed before (2) and

(3). This PR reverses the logic so that the exclusion /

`respect_gitignore` is being considered from the default settings if

there's no workspace / user settings. This has the benefit that the

server no longer enters the `.git` directory or any other excluded

directory when a user opens a file in the home directory.

Related to #11366

## Test plan

Opened a test file from the home directory and confirmed with the debug

trace (removed in #12360) that the server excludes the `.git` directory

when indexing.

## Summary

Add new rule and implement for `unnecessary default type arguments`

under the `UP` category (`UP043`).

```py

// < py313

Generator[int, None, None]

// >= py313

Generator[int]

```

I think that as Python 3.13 develops, there might be more default type

arguments added besides `Generator` and `AsyncGenerator`. So, I made

this more flexible to accommodate future changes.

related issue: #12286

## Test Plan

snapshot included..!

## Summary

Pretty sure this should still be an error, but also, I think I added

this because of ecosystem CI? So want to see what pops up.

Closes https://github.com/astral-sh/ruff/issues/12164.

## Summary

This is the _intended_ default that PEP 597 _wants_, but it's not

backwards compatible. The fix is already unsafe, so it's better for us

to recommend the desired and expected behavior.

Closes https://github.com/astral-sh/ruff/issues/12069.

Improve semantic index tests with better assertions than just `.len()`,

and re-add use-definition test that was commented out in the switch to

Salsa initially.

Implements definition-level type inference, with basic control flow

(only if statements and if expressions so far) in Salsa.

There are a couple key ideas here:

1) We can do type inference queries at any of three region

granularities: an entire scope, a single definition, or a single

expression. These are represented by the `InferenceRegion` enum, and the

entry points are the salsa queries `infer_scope_types`,

`infer_definition_types`, and `infer_expression_types`. Generally

per-scope will be used for scopes that we are directly checking and

per-definition will be used anytime we are looking up symbol types from

another module/scope. Per-expression should be uncommon: used only for

the RHS of an unpacking or multi-target assignment (to avoid

re-inferring the RHS once per symbol defined in the assignment) and for

test nodes in type narrowing (e.g. the `test` of an `If` node). All

three queries return a `TypeInference` with a map of types for all

definitions and expressions within their region. If you do e.g.

scope-level inference, when it hits a definition, or an

independently-inferable expression, it should use the relevant query

(which may already be cached) to get all types within the smaller

region. This avoids double-inferring smaller regions, even though larger

regions encompass smaller ones.

2) Instead of building a control-flow graph and lazily traversing it to

find definitions which reach a use of a name (which is O(n^2) in the

worst case), instead semantic indexing builds a use-def map, where every

use of a name knows which definitions can reach that use. We also no

longer track all definitions of a symbol in the symbol itself; instead

the use-def map also records which defs remain visible at the end of the

scope, and considers these the publicly-visible definitions of the

symbol (see below).

Major items left as TODOs in this PR, to be done in follow-up PRs:

1) Free/global references aren't supported yet (only lookup based on

definitions in current scope), which means the override-check example

doesn't currently work. This is the first thing I'll fix as follow-up to

this PR.

2) Control flow outside of if statements and expressions.

3) Type narrowing.

There are also some smaller relevant changes here:

1) Eliminate `Option` in the return type of member lookups; instead

always return `Type::Unbound` for a name we can't find. Also use

`Type::Unbound` for modules we can't resolve (not 100% sure about this

one yet.)

2) Eliminate the use of the terms "public" and "root" to refer to

module-global scope or symbols. Instead consistently use the term

"module-global". It's longer, but it's the clearest, and the most

consistent with typical Python terminology. In particular I don't like

"public" for this use because it has other implications around author

intent (is an underscore-prefixed module-global symbol "public"?). And

"root" is just not commonly used for this in Python.

3) Eliminate the `PublicSymbol` Salsa ingredient. Many non-module-global

symbols can also be seen from other scopes (e.g. by a free var in a

nested scope, or by class attribute access), and thus need to have a

"public type" (that is, the type not as seen from a particular use in

the control flow of the same scope, but the type as seen from some other

scope.) So all symbols need to have a "public type" (here I want to keep

the use of the term "public", unless someone has a better term to

suggest -- since it's "public type of a symbol" and not "public symbol"

the confusion with e.g. initial underscores is less of an issue.) At

least initially, I would like to try not having special handling for

module-global symbols vs other symbols.

4) Switch to using "definitions that reach end of scope" rather than

"all definitions" in determining the public type of a symbol. I'm

convinced that in general this is the right way to go. We may want to

refine this further in future for some free-variable cases, but it can

be changed purely by making changes to the building of the use-def map

(the `public_definitions` index in it), without affecting any other

code. One consequence of combining this with no control-flow support

(just last-definition-wins) is that some inference tests now give more

wrong-looking results; I left TODO comments on these tests to fix them

when control flow is added.

And some potential areas for consideration in the future:

1) Should `symbol_ty` be a Salsa query? This would require making all

symbols a Salsa ingredient, and tracking even more dependencies. But it

would save some repeated reconstruction of unions, for symbols with

multiple public definitions. For now I'm not making it a query, but open

to changing this in future with actual perf evidence that it's better.

## Summary

I believe these should always bind more tightly -- e.g., in:

```python

for _ in bar(baz for foo in [1]):

pass

```

The inner `baz` and `foo` should be considered comprehension variables,

not for loop bindings.

We need to revisit this more holistically. In some of these cases,

`BindingKind` should probably be a flag, not an enum, since the values

aren't mutually exclusive. Separately, we should probably be more

precise in how we set it (e.g., by passing down from the parent rather

than sniffing in `handle_node_store`).

Closes https://github.com/astral-sh/ruff/issues/12339

When there is a function or class definition at the end of a suite

followed by the beginning of an alternative block, we have to insert a

single empty line between them.

In the if-else-statement example below, we insert an empty line after

the `foo` in the if-block, but none after the else-block `foo`, since in

the latter case the enclosing suite already adds empty lines.

```python

if sys.version_info >= (3, 10):

def foo():

return "new"

else:

def foo():

return "old"

class Bar:

pass

```

To do so, we track whether the current suite is the last one in the

current statement with a new option on the suite kind.

Fixes#12199

---------

Co-authored-by: Micha Reiser <micha@reiser.io>

## Summary

This PR updates the server to build the settings index in parallel using

similar logic as `python_files_in_path`.

This should help with https://github.com/astral-sh/ruff/issues/11366 but

ideally we would want to build it lazily.

## Test Plan

`cargo insta test`

## Summary

I don't know that there's more to do here. We could consider not raising

the violation at all for arguments, but that would have some false

negatives and could also be surprising to users.

Closes https://github.com/astral-sh/ruff/issues/12267.

## Summary

Ensures that, e.g., the following is not considered a

redefinition-without-use:

```python

import contextlib

foo = None

with contextlib.suppress(ImportError):

from some_module import foo

```

Closes https://github.com/astral-sh/ruff/issues/12309.

## Summary

Closes https://github.com/astral-sh/ruff/issues/12291.

## Test Plan

```shell

❯ cargo run check ../uv/foo --select INP

/Users/crmarsh/workspace/uv/foo/bar/baz.py:1:1: INP001 File `/Users/crmarsh/workspace/uv/foo/bar/baz.py` is part of an implicit namespace package. Add an `__init__.py`.

Found 1 error.

```

## Summary

I don't fully understand the purpose of this. In #7905, it was just

copied over from the previous non-preview implementation. But it means

that (e.g.) we don't treat `type(self.foo)` as a type -- which is wrong.

Closes https://github.com/astral-sh/ruff/issues/12290.

## Summary

Update the name of `ASYNC109` to match

[upstream](https://flake8-async.readthedocs.io/en/latest/rules.html).

Also update to the functionality to match upstream by supporting

additional context managers from `asyncio` and `anyio`. This doesn't

change any of the detection functionality, but recommends additional

context managers from `asyncio` and `anyio` depending on context.

Part of https://github.com/astral-sh/ruff/issues/12039.

## Test Plan

Added fixture for asyncio recommendation

## Summary

S113 exists because `requests` doesn't have a default timeout, so

request without timeout may hang indefinitely

> B113: Test for missing requests timeout

This plugin test checks for requests or httpx calls without a timeout

specified.

>

> Nearly all production code should use this parameter in nearly all

requests, **Failure to do so can cause your program to hang

indefinitely.**

But httpx has default timeout 5s, so S113 for httpx request without

`timeout` argument is a false positive, only valid case would be

`timeout=None`.

https://www.python-httpx.org/advanced/timeouts/

> HTTPX is careful to enforce timeouts everywhere by default.

>

> The default behavior is to raise a TimeoutException after 5 seconds of

network inactivity.

## Test Plan

snap updated

Intern types using Salsa interning instead of in the `TypeInference`

result.

This eliminates the need for `TypingContext`, and also paves the way for

finer-grained type inference queries.

## Summary

This PR fixes the bug where the server was not considering the

`cells.structure.didOpen` field to sync up the new content of the newly

added cells.

The parameters corresponding to this request provides two fields to get

the newly added cells:

1. `cells.structure.array.cells`: This is a list of `NotebookCell` which

doesn't contain any cell content. The only useful information from this

array is the cell kind and the cell document URI which we use to

initialize the new cell in the index.

2. `cells.structure.didOpen`: This is a list of `TextDocumentItem` which

corresponds to the newly added cells. This actually contains the text

content and the version.

This wasn't a problem before because we initialize the cell with an

empty string and this isn't a problem when someone just creates an empty

cell. But, when someone copy-pastes a cell, the cell needs to be

initialized with the content.

fixes: #12201

## Test Plan

First, let's see the panic in action:

1. Press <kbd>Esc</kbd> to allow using the keyboard to perform cell

actions (move around, copy, paste, etc.)

2. Copy the second cell with <kbd>c</kbd> key

3. Delete the second cell with <kbd>dd</kbd> key

4. Paste the copied cell with <kbd>p</kbd> key

You can see that the content isn't synced up because the `unused-import`

for `sys` is still being highlighted but it's being used in the second

cell. And, the hover isn't working either. Then, as I start editing the

second cell, it panics.

https://github.com/astral-sh/ruff/assets/67177269/fc58364c-c8fc-4c11-a917-71b6dd90c1ef

Now, here's the preview of the fixed version:

https://github.com/astral-sh/ruff/assets/67177269/207872dd-dca6-49ee-8b6e-80435c7ef22e

This reverts commit b28dc9ac14.

We're not ready to stabilize the server yet. There's some pending work

for the VS Code extension and documentation improvements.

This change is to unblock Ruff release.

## Summary

<!-- What's the purpose of the change? What does it do, and why? -->

This is the implementation for the new rule of `pycodestyle (E204)`. It

follows the guidlines described in the contributing site, and as such it

has a new file named `whitespace_after_decorator.rs`, a new test file

called `E204.py`, and as such invokes the `function` in the `AST

statement checker` for functions and functions in classes. Linking #2402

because it has all the pycodestyle rules.

## Test Plan

<!-- How was it tested? -->